What is the problem you are having with rclone?

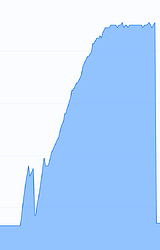

I’m seeing memory usage exceeding my available memory when using rclone, leading to OOM issues and my system killing the rclone process after 30 minutes to 2 hours.

The memory usage just creep upwards, stays at 90%, and at some point, the system kills the process:

I’ve used:

- --use-mmap

- --list-cutoff 10000

- --max-buffer-memory=1G

- export GOGC=20

To somehow limit memory consumption, but nothing seems to help very much. The bucket in questions contains millions of files in a single directory, which I believe might be the problem, but I was under the impression that especially –list-cutoff should help with that problem.

Run the command 'rclone version' and share the full output of the command.

rclone v1.70.3

- os/version: unknown

- os/kernel: 4.4.302+ (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.24.4

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Google Storage (GCS Buckets)

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy GCS:data /volume1/gcs/data/

--config /volume1/data/rclone.conf

--max-buffer-memory=1G

--use-mmap

--checkers 16

--transfers 8

--list-cutoff 10000

--gcs-decompress -P

--log-file /volume1/data/log.txt

Please run 'rclone config redacted' and share the full output. If you get command not found, please make sure to update rclone.

[GCS]

type = google cloud storage

project_number = XXX

service_account_file = /volume1/data/service-acc.json

bucket_policy_only = true

location = XXX