What is the problem you are having with rclone?

Low throughput on NFS to S3 (Scality) copy/sync job.

Getting like 70-110 mb/s

Run the command 'rclone version' and share the full output of the command.

rclone version

rclone v1.64.0-beta.7128.22a14a8c9

- os/version: redhat 7.9 (64 bit)

- os/kernel: 3.10.0-1160.90.1.el7.x86_64 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.20.5

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Scality ring

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy /mnt/regular_backup/ scality:migration-test/regular_backup/ --fast-list --transfers 32 --checkers 32 --progress -vv --no-traverse --no-check-dest

Number of transfers and checkers was a tip in a thread here, also tested with --no-traverse, --no-check-dest, checksums/no checksums...

The rclone config contents with secrets removed.

[SJscality]

type = s3

provider = Other

env_auth = false

access_key_id = xxx

secret_access_key = yyy

endpoint = s3.endpoint.com

acl = private

bucket_acl = private

chunk_size = 25Mi

list_version = 2

upload_cutoff = 200Mi

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/index-nPP43cwaT8Oy-P8ner2Bew: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-OFhmgiwxQhql-D7lcUCu0Q.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-Y3crD1slSFaLfTT3AF98hQ.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-cIP2LKIdTuWgtSBKf_qy3A.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-qD3Lk6cTQsWuEUp1nAm1xg.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-rUwMOieSRp-BozQQicGQfQ.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/JDRZv3L-RKKGXMdLI_YyTg/0/snap-yaVYzBf-RSy4AEXFu8Rp3A.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/4E4rFLMdSACJHzlaV67IbA/0/__EtAfkBUqRVqTLiAW_t5mOA: md5 = 1236d10d3d56c0d767a900d8ce9bc094 OK

2023-07-10 14:25:02 INFO : indices/4E4rFLMdSACJHzlaV67IbA/0/__EtAfkBUqRVqTLiAW_t5mOA: Copied (new)

2023-07-10 14:25:02 DEBUG : indices/2rQ38jClTkauBgqUIdfMCg/0/__-tBxvGvbR_eYhSLSvKyxag: multipart upload starting chunk 4 size 25Mi offset 75Mi/278.286Mi

2023-07-10 14:25:02 DEBUG : indices/LgrIkFISRQS3Gu_q31cRig/meta-AEVpHIkBcjCN1-QRE4dj.dat: Need to transfer - File not found at Destination

2023-07-10 14:25:02 DEBUG : indices/0OJu_YynRh2C7csiVSjtVg/0/__p8gWFVjBR7O7jXSkCAiHsA: md5 = c23465a0ed5bcdf164dffbadd86b5d9c OK

2023-07-10 14:25:02 INFO : indices/0OJu_YynRh2C7csiVSjtVg/0/__p8gWFVjBR7O7jXSkCAiHsA: Copied (new)

2023-07-10 14:25:02 INFO : Signal received: interrupt

2023-07-10 14:25:02 DEBUG : indices/2rQ38jClTkauBgqUIdfMCg/0/__-tBxvGvbR_eYhSLSvKyxag: Cancelling multipart upload

2023-07-10 14:25:02 DEBUG : indices/2JO76VdZT_WtuaQY-R45cw/0/__3uwfC1tpSsqFFBl9wNQK8A: md5 = da84027050e51d03d0ede825c9a287d7 OK

2023-07-10 14:25:02 INFO : indices/2JO76VdZT_WtuaQY-R45cw/0/__3uwfC1tpSsqFFBl9wNQK8A: Copied (new)

2023-07-10 14:25:02 DEBUG : indices/1DMXJQjTSVSC3EdkBW5uQQ/0/snap-Y3crD1slSFaLfTT3AF98hQ.dat: md5 = b3d62c172c694a54f5328d4d8c49da2e OK

2023-07-10 14:25:02 INFO : indices/1DMXJQjTSVSC3EdkBW5uQQ/0/snap-Y3crD1slSFaLfTT3AF98hQ.dat: Copied (new)

2023-07-10 14:25:02 DEBUG : indices/3ZwG5ZnfSp68FXpfDtjHxg/0/__3mpis6lhTNq9Q2djFBesCw: Cancelling multipart upload

2023-07-10 14:25:02 DEBUG : indices/3UDRXQiOSjOY6MUXHyecuQ/0/__OydcXbRxQLSVjxrkwsP0kQ: md5 = 6b19a813c18be65f9f5e3c2878f68c5e OK

2023-07-10 14:25:02 INFO : indices/3UDRXQiOSjOY6MUXHyecuQ/0/__OydcXbRxQLSVjxrkwsP0kQ: Copied (new)

2023-07-10 14:25:02 INFO : Exiting...

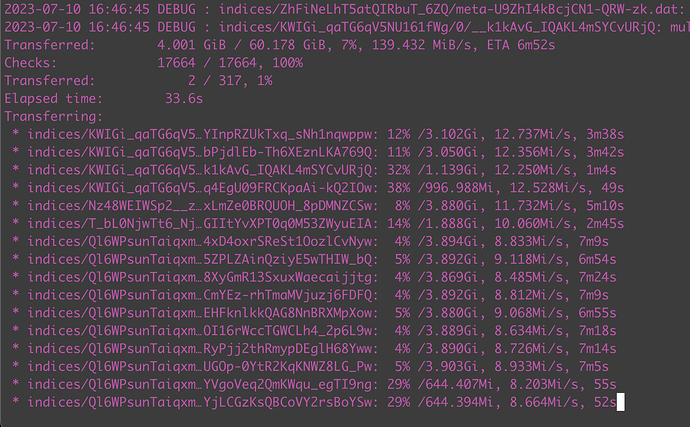

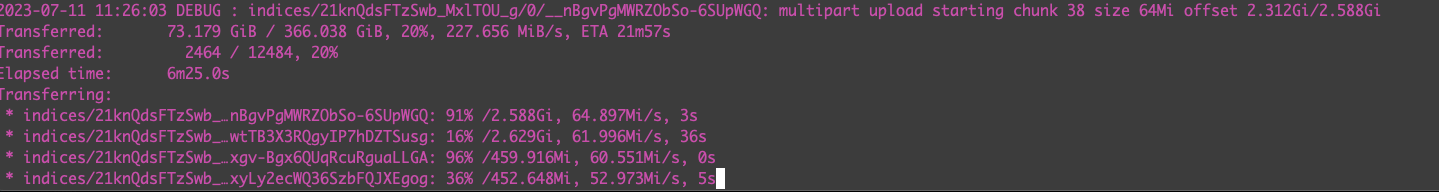

Transferred: 1.874 GiB / 219.115 GiB, 1%, 73.376 MiB/s, ETA 50m31s

Transferred: 1080 / 8306, 13%

Elapsed time: 25.9s

Transferring:

* indices/3_uqFIvzRIq1YL…-_Vlbzz_Tg-EH6tXRDztMQ: 87% /180.805Mi, 6.960Mi/s, 3s

* indices/2rQ38jClTkauBg…-tBxvGvbR_eYhSLSvKyxag: 36% /278.286Mi, 4.641Mi/s, 37s

* indices/3ZwG5ZnfSp68FX…1xgPM-urT3KMHhjY9S9lAA: 0% /654.942Mi, 0/s, -

* indices/2rQ38jClTkauBg…CIvRKHSsQtudCjYYS25jxg: 89% /155.614Mi, 7.054Mi/s, 2s

* indices/21knQdsFTzSwb_…-rnzRRzyTGaTfSQqr5bDeg: 0% /447.027Mi, 0/s, -

* indices/3ZwG5ZnfSp68FX…3mpis6lhTNq9Q2djFBesCw: 16% /208.697Mi, 1.930Mi/s, 1m30s

* indices/21knQdsFTzSwb_…0elJoFgDTZK1L_6HDtRFpw: 0% /2.623Gi, 0/s, -

* indices/0YnKHZqYTdmBsN…90CKOqKyQquJDb74wKGShQ: 0% /3.033Gi, 0/s, -

* indices/3_uqFIvzRIq1YL…6WALguR1T5yMjBjChsriOA: 67% /86.181Mi, 5.099Mi/s, 5s

* indices/0YnKHZqYTdmBsN…BwGogOwrTOW5SVpG6W9LUg: 6% /162.018Mi, 0/s, -

* indices/0YnKHZqYTdmBsN…DcJ6DngNRKGzGRLEJ69XDA: 13% /105.883Mi, 1.499Mi/s, 1m1s

* indices/21knQdsFTzSwb_…2WqdNhtfQXSy03S1-oYsQA: 92% /54.189Mi, 6.286Mi/s, 0s

* indices/2rQ38jClTkauBg…UacwJwZtQ825-nsPqW47lA: 91% /15.263Mi, 0/s, -

* indices/0YnKHZqYTdmBsN…F8tTQwbERruFCm8rkyV52A: 0% /161.804Mi, 0/s, -

* indices/2rQ38jClTkauBg…VQ9qEjXhQISc81aX34k4dw: 0% /32.992Mi, 0/s, -

* indices/0YnKHZqYTdmBsN…FhCTNMmKQn274in9yTlOAQ: 0% /39.768Mi, 0/s, -

* indices/2WBJ4AYEQgCpKb…xgT4WF9ZRKaxVHRbqPqqOA: 0% /1.318Ki, 0/s, -

* indices/2vESi_ysRHCYOV…6sILbAU7TbedTPYGpvzo_A: 0% /4.558Ki, 0/s, -

* indices/4VR9_Lm6R2CXZc…so3DjqoZSv6dljDHDyunjQ: 0% /646, 0/s, -

* indices/1ahaU2OQSbmIhi…_qHIxUF3T7iMEo5lft8zjQ: 0% /46.419Ki, 0/s, -

* indices/21knQdsFTzSwb_…4-wTolzVTSWLBtR1XPeRPA: 0% /2.247Mi, 0/s, -

* indices/-vfx6U4mSje38G…4NEMhPkjTd2dP46rjsWkAA: 0% /16.220Mi, 0/s, -

* indices/4DQ5sHCFQJ2D4l…OieSRp-BozQQicGQfQ.dat: 0% /492, 0/s, -

* indices/3ZwG5ZnfSp68FX…7FZAG13XQbuqKNGjMtHhzA: 0% /14.061Ki, 0/s, -

* indices/-rIaT6-zR8yKSv…tdTzrwwnTu-Vhw4yb1NmJQ: transferring

* indices/-aRPLjPBTsWVHV…ZqUDebVBRmSnE1CrhvkaAA: transferring

* indices/3wTBsd-BQgmMKS…DKGDxp6TTlGM-BHJuHNKvg: transferring

* indices/2rQ38jClTkauBg…Vyw-St7zSSO7zumZyhCowA: transferring

* indices/37Oxle3lTiGNrv…zQvwKcU6ScKlCLC_vdhekg: transferring

* indices/3_uqFIvzRIq1YL…DZAmXSdAS3OaAMDYiz1qBQ: transferring

* indices/47rCvLO1TMycsX…1xK09SHWTVii9Z59yRhwCg: transferring

* indices/18W6ZsYuRrqN-E…aqLt9PrnQzWMEuNF-_Bmag: transferring

The command is running on a VM with 16 GB of memory and 4 CPU's and a dedicated vmxnet3 adapter only for this.

CPU consumption is 80-100% but all of it is almost going to "wait".

top - 14:32:49 up 3 days, 6:12, 3 users, load average: 21.78, 7.13, 7.74

Tasks: 198 total, 1 running, 197 sleeping, 0 stopped, 0 zombie

%Cpu(s): 15.3 us, 10.3 sy, 0.0 ni, 0.7 id, 72.0 wa, 0.0 hi, 1.8 si, 0.0 st

KiB Mem : 3861080 total, 443364 free, 786588 used, 2631128 buff/cache

KiB Swap: 2097148 total, 2096628 free, 520 used. 2771636 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

71375 root 20 0 1165256 422684 17784 S 85.0 10.9 0:26.71 rclone

We have noticed that the NFS server and S3 has different TCP window sizes

65535 on NFS and 28960 on S3.

But other than that we can't see a reason for the low throughput. It's connected straight to core and should be able to leverage our 10G lines.

Please help if there any flag/option or other that might help speed this up... we have 180 TB to shuffle...