locked account without warning

did copy from cloud to cloud 3 times so 40tb

but still would be nice for warning. and or even them saying hey this is why you are blocked… but nothing.

Yeah they are super sensitive about bw usage above the “norm”

They should eventually unlock it happened to me 3 times

But not as of late, I use google drive unlimited for doing server to server copies.

Daily back up to two acd accounts and 3 G Suite for Business and 1 of those eBay shady G Suite for Education accounts.

Use the gdrive accounts to do any new copies I need.

Mount acd on two servers for plex and mount gdrive on another

Use the other two gdrive for plex cloud

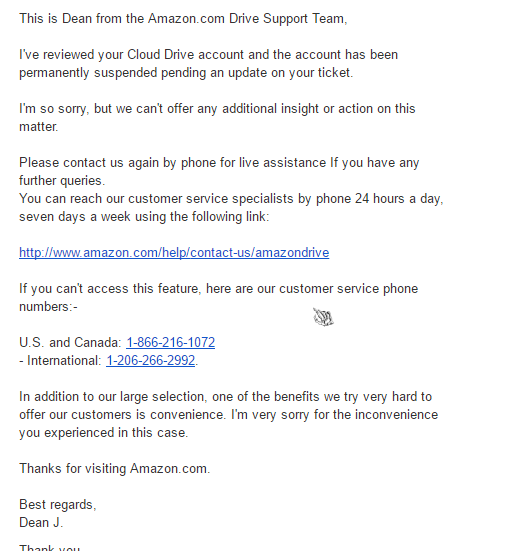

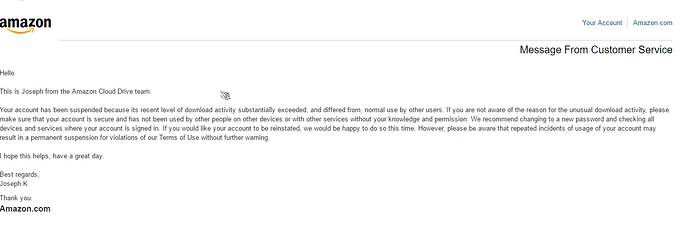

yeah normally they unlock it after i call them but this time they seem to not be able to at least that or they dont want to…

Hi @dtech4you,

Thanks for the sobering post.

Unfortunately, I think that all “unlimited” cloud storage providers will be forced, sooner or later, to implement some kind of “limiting” as more and more of their users start to really test the envelope of what “unlimited” really means… as their means to provide such services (ie, physical servers, disks, network connections, etc) aren’t really “unlimited” in any way.

But I agree that banishing (specially without any warning/explanation) is extreme, and a much better way to implement this would be for the service to throttle the operations that are giving them trouble (like GDrive does with new file creation).

Please keep us posted on how that evolves (ie, when and on what basis Amazon reinstates your access, etc).

Cheers,

Durval.

Hello @MartinBowling,

Wow! 5 remote backups?! You are my hero!  And I was thinking that, with double local copies (to 100% “redundant” hard disks[1]) and a single cloud copy, I was being paranoid enough about my data’s preservation… but you really set a new standard for that

And I was thinking that, with double local copies (to 100% “redundant” hard disks[1]) and a single cloud copy, I was being paranoid enough about my data’s preservation… but you really set a new standard for that

Alas, on the subject of “eBay shady G Suite for Education” accounts, you mean something like that, right? I’m wondering how long it will take until Google cracks down really hard on that kind of stuff…

Cheers,

Durval.

[1] Actually I use 4-disk raidz2 ZFS pools, which are then physically removed and separated in two 2-disk sets, which are stored in different locations.

will do i love to see how they respond, and how long will take them to 1 do something, 2 tell my why.

and yes i think that the cloud being as cool as it is it will have lots to change.

I have google drive as a backup only because i have a .edu (free) account

but not all the new stuff there only did one complete backup  so rebuilding lib from others who i copied to

so rebuilding lib from others who i copied to  got to get my stuff somehow lol

got to get my stuff somehow lol

need to figure out a way to have plex scan files without it making google lock up…

I found that disabling auto scanning helps. Also, I don’t have any issues with emby updates (12h interval).

Plex library scan usually locks the account, I ended up using emby for my streaming needs…

@durval yes I wonder if we might not see google sending letters to the institutions where there are parasite domains that people are selling the accounts on. Or even just a public post on their blog urging them to check their domain panel and see what domains are associated with their account and remove any non educational domains.

Yeah i’m pretty thorough with making sure I have my data lots of places hehe

local copy ACD

local copy ACD2

local copy DRIVE

local copy DRIVE2

DRIVE2 sync DRIVE3

DRIVE3 sync DRIVE4

I also notice that Emby scanner is much “lighter” on the touch than Plex

emby scanner just check for file, plex scanner opens every file and read the header.

Hello Ajki,

emby scanner just check for file, plex scanner opens every file and read the header.

Now I understand why you Plex-using folks are having trouble… in a cloud context and with a large library, this definitely can’t end well… :-/

Cheers,

Durval.

For your copy from Drive to Drive, are you sharing the folder from one drive to another and then locally cloning it, or just straight copy from drive to drive?

Are you running it on same server ?

If you do then simple detect changes in plex will solve problem for you ( assuming your mounts are not on NAS )

If you are downloading and uploading on cloud on different machine then you need to setup script that will do it for you. I have such setup and basically before I start rclone move i store all new folders in a file then upload that file after rclone move is complete. And on my second server I read from that file and trigger plex cli scanner to refresh those new folders.

sharing the folder and then doing sync, so that way I get a server side move

As of today It is finally unlocked.

well unlocked just to sit while they fix the big break they made…

https://status.aws.amazon.com/

hahaha we just need everyone to transfer 100’s of TB, and then nobody differs from the usual usage.

LOVE IT!!! rase the fucking avg to be off the charts crazy maybe then the network will just grow… though i could see that ending in failed cloud lol if everyone does 100TB /m need a PB networks setup XD

I just got locked yesterday, I thought maybe I was “being good” lately. Guess not haha