Hi,

it's been more than a year that i use rclone now, with differents settings and stuff, i'm using cloudbox feederbox/mediabox on 2 differents servers

I got a merger FS on mediabox (where plex is) that merge local/feederbox (remote rclone ftp local files)/gdrive rclone

in /mnt/unionfs/

So files still not uploaded to gdrive can still be accessible instantly on plex via the remote

on feederbox there is a merger FS with local/gdrive rclone in /mnt/unionfs too

Just renamed a bunch of my TV/movies folders to include the tmdb and tvdb ids in the folders name via sonarr/radarr and I restarted a full scan and it's been 4 days (260Tb of data) that the following message pop after some time each days (slowing the process), bazarr is rescanning too

So both of them are doing a lot of mediainfo (ffprobe) requests, which is not doing a lot of traffic but i get thoose messages.

"vfs cache: failed to download: vfs reader: failed to write to cache file: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded"

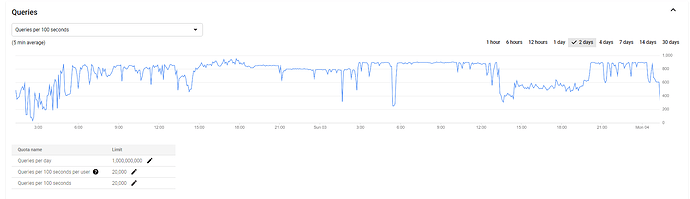

When the reset happens it's fine for like 10 hours, then i hit some kind of limit, everything is fine in the API calls on the google dashboard. the servers do about 1.5Tb of combined download before i get this message (limit is 10Tb on download as i read everywhere) while my feederbox uploads for 750Gb everyday at the moment.

I even have a vfs priming service that runs every 167h

I read multiple times here that nobody experience issue when fully scanning so i'm a bit confused here on what is happening ?

I do have all the settings that are not recommended ticked off in all of the softwares, but still hitting something.

I want to be able to add emby too in the next weeks and scan, but now i'm afraid it is also going to blow everything for like a week...  just like what plex is doing right now

just like what plex is doing right now

What is your rclone version (output from rclone version)

rclone v1.56.2

- os/version: ubuntu 18.04 (64 bit)

- os/kernel: 4.15.0-123-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.16.8

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Google drive

The rclone config contents with secrets removed.

[gd]

type = drive

client_id = xxxxxxxxxxxxxxxxxxxxx

client_secret = xxxxxxxxxxxxxxxxxxxxxxxxxxx

token = xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

root_folder_id = xxxxxxxxxxxxxxxxxxxxxxxxx

[google]

type = crypt

remote = gd:

filename_encryption = standard

directory_name_encryption = true

password = xxxxxxxxxxxxxxxxxxxxxxxx

password2 = xxxxxxxxxxxxxxxxxxxxx

service rclone Execstart (same on both servers, feederbox is limited to 4 tpslimit tough so i can't go over 10 for the sum of the 2 servers):

ExecStart=/usr/bin/rclone mount \

--user-agent='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36' \

--config=/home/seed/.config/rclone/rclone.conf \

--allow-other \

--rc \

--rc-addr=localhost:5572 \

--umask 002 \

--dir-cache-time 5000h \

--attr-timeout=5000h \

--poll-interval 10s \

--cache-dir=/cache \

--vfs-cache-mode full \

--vfs-read-ahead 2G \

--vfs-cache-max-size 150G \

--vfs-cache-poll-interval 5m \

--vfs-cache-max-age 5000h \

--log-level=DEBUG \

--stats=1m \

--stats-log-level=NOTICE \

--syslog \

--tpslimit=6 \

google: /mnt/remote

for some reason i also noticed that the /cache folder got back to a few Mb a few moments ago, not sure why, so i just added the : --vfs-cache-max-age 5000h \

A log from the command with the -vv flag

Oct 3 14:10:49 s165879 rclone[1502747]: Media/TV/South Park (1997) [tvdb-75897]/Season 08/: ReadDirAll:

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Life Is a Long Quiet River (1988) {tmdb-4271}/Life.Is.a.Long.Quiet.River.1988.HDTV-1080p.x264.DTS-HD.MA[FR].[FR]-RADARR {imdb-tt0096386}.mkv: vfs cache: downloader: error count now 4: vfs reader: failed to write to cache file: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Life Is a Long Quiet River (1988) {tmdb-4271}/Life.Is.a.Long.Quiet.River.1988.HDTV-1080p.x264.DTS-HD.MA[FR].[FR]-RADARR {imdb-tt0096386}.mkv: vfs cache: failed to download: vfs reader: failed to write to cache file: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Life Is a Long Quiet River (1988) {tmdb-4271}/Life.Is.a.Long.Quiet.River.1988.HDTV-1080p.x264.DTS-HD.MA[FR].[FR]-RADARR {imdb-tt0096386}.mkv: ChunkedReader.RangeSeek from -1 to 0 length -1

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Life Is a Long Quiet River (1988) {tmdb-4271}/Life.Is.a.Long.Quiet.River.1988.HDTV-1080p.x264.DTS-HD.MA[FR].[FR]-RADARR {imdb-tt0096386}.mkv: ChunkedReader.Read at -1 length 4096 chunkOffset 0 chunkSize 134217728

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Life Is a Long Quiet River (1988) {tmdb-4271}/Life.Is.a.Long.Quiet.River.1988.HDTV-1080p.x264.DTS-HD.MA[FR].[FR]-RADARR {imdb-tt0096386}.mkv: ChunkedReader.openRange at 0 length 134217728

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Wonders of the Sea 3D (2017) {tmdb-476070}/Wonders.of.the.Sea.2017.Bluray-1080p.x264.AC3[FR+EN].[FR+EN]-THREESOME {imdb-tt5495792}.mkv: vfs cache: downloader: error count now 4: vfs reader: failed to write to cache file: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Wonders of the Sea 3D (2017) {tmdb-476070}/Wonders.of.the.Sea.2017.Bluray-1080p.x264.AC3[FR+EN].[FR+EN]-THREESOME {imdb-tt5495792}.mkv: vfs cache: failed to download: vfs reader: failed to write to cache file: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Wonders of the Sea 3D (2017) {tmdb-476070}/Wonders.of.the.Sea.2017.Bluray-1080p.x264.AC3[FR+EN].[FR+EN]-THREESOME {imdb-tt5495792}.mkv: ChunkedReader.RangeSeek from -1 to 0 length -1

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Wonders of the Sea 3D (2017) {tmdb-476070}/Wonders.of.the.Sea.2017.Bluray-1080p.x264.AC3[FR+EN].[FR+EN]-THREESOME {imdb-tt5495792}.mkv: ChunkedReader.Read at -1 length 4096 chunkOffset 0 chunkSize 134217728

Oct 3 14:10:49 s165879 rclone[1502747]: Media/Movies/Wonders of the Sea 3D (2017) {tmdb-476070}/Wonders.of.the.Sea.2017.Bluray-1080p.x264.AC3[FR+EN].[FR+EN]-THREESOME {imdb-tt5495792}.mkv: ChunkedReader.openRange at 0 length 134217728

Oct 3 14:10:49 s165879 rclone[273422]: Media/TV/A Haunting (2005) [tvdb-79535]/: >Lookup: node=Media/TV/A Haunting (2005) [tvdb-79535]/Season 10/, err=<nil>

Oct 3 14:10:49 s165879 rclone[273422]: Media/TV/A Haunting (2005) [tvdb-79535]/Season 10/: Attr:

Oct 3 14:10:49 s165879 rclone[273422]: Media/TV/A Haunting (2005) [tvdb-79535]/Season 10/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxrwxr-x, err=<nil>

Thanks if you can help !