Hi, I’m hoping someone can lend a hand with my “fiasco” … I’ve spent time researching on my own and I’m just stumped.

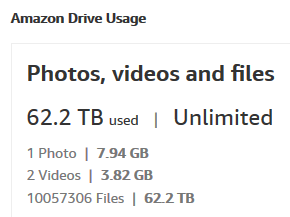

- Scope of issue: Trying to migrate 62.2 TB off of ACD before it is deleted (will toss a bit of that, but need to review first)

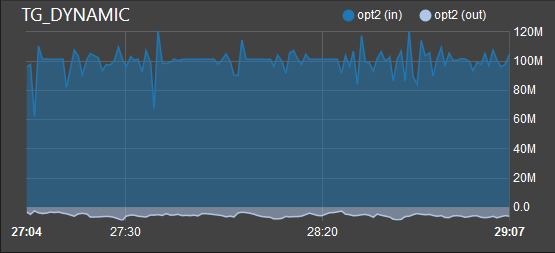

- ISP: 100/70 Mbps connection

- FreeNAS AIO: 96TB Raw HDD Capacity

I used Cloudberry Explorer to upload these Linux ISOs; however, when attempting to recently reverse the process (ACD => Local) every file I attempted to move resulted in an error “Object or Element Not Found” (can’t recall exactly).

As an aside, the Linux ISO joke is funny and I just learned of it recently, but prior to learning how to appropriately perform offsite backup, I 7z’ed files with a p/w in a size of 8 GB and uploaded. Hey, at least I was sorta encrypting. At any rate, far from a best case scenario.

Having read the news, I thought that rclone would’t be a feasible option, until I camne across this gem: Proxy for Amazon Cloud Drive

I managed to pull around 100GB off ACD and then RCLONE just stopped (more on that).

Log file (substituted more meaningful names where appropriate):

Version: rclone-v1.38-050-g4a1013f2-windows-amd64

rclone sync acd:directory\subdirectory \\freenas\pool\SMBShare\directory --acd-templink-threshold 0 --low-level-retries 1 --stats 10s --log-file=FILE -vv

rclone: Version “v1.38-050-g4a1013f2” starting with parameters [“rclone” “sync” “acd:Backups\Dir\SubDir” \\sullynas\Pool\SMBShare\Dir" “–acd-templink-threshold” “0” “–stats” “10s” “–log-file=FILE” “-vv”]

2017/11/02 12:02:39 DEBUG : amazon drive root ‘Backups/Dir/SubDir’: Token expired but no uploads in progress - doing nothing

2017/11/02 12:02:39 DEBUG : Keeping previous permissions for config file: -rw-rw-rw-

2017/11/02 12:02:39 DEBUG : acd: Saved new token in config file

2017/11/02 12:02:40 INFO : amazon drive root ‘Backups …’: Modify window not supported

2017/11/02 12:02:41 DEBUG : FILENAME.7z.003: Downloading large object via tempLink

2017/11/02 12:02:41 DEBUG : FILENAME.002: Downloading large object via tempLink

2017/11/02 12:02:41 DEBUG : FILENAME.004: Downloading large object via tempLink

2017/11/02 12:02:41 DEBUG : FILENAME.7z.001: Downloading large object via tempLink

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 593.167455ms (1 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 1/10 (error )

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 1.424990005s (2 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 1/10 (error )

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 1.081266286s (3 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 1/10 (error )

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 4.003431565s (4 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 1/10 (error )

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 1.016469993s (5 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 2/10 (error )

2017/11/02 12:02:41 DEBUG : pacer: Rate limited, sleeping for 23.634344457s (6 consecutive low level retries)

2017/11/02 12:02:41 DEBUG : pacer: low level retry 2/10 (error )

2017/11/02 12:02:42 DEBUG : pacer: Rate limited, sleeping for 14.698025498s (7 consecutive low level retries)

2017/11/02 12:02:42 DEBUG : pacer: low level retry 2/10 (error )

017/11/02 13:00:39 DEBUG : pacer: Rate limited, sleeping for 3m25.513408922s (35 consecutive low level retries)

2017/11/02 13:00:39 DEBUG : pacer: low level retry 9/10 (error <nil>)

2017/11/02 13:00:43 INFO :

Transferred: 0 Bytes (0 Bytes/s)

Errors: 0

Checks: 0

Transferred: 0

Elapsed time: 55m41.1s

Transferring:

* FILENAME.7z.001

* FILENAME.7z.002

* FILENAME.7z.003

* FILENAME.7z.004

I think one of my issues is that a sub dir name on ACD = “Real Estate” and shows up in the log as xxxReal" “Estate” but that is far from explaining the rate limiting. Help please! And thank you.