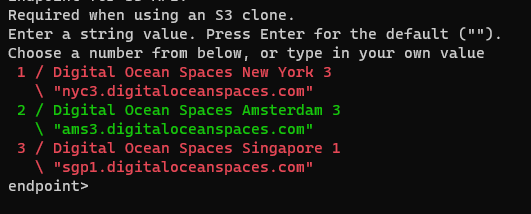

Screenshots from DOS & RC terminal

what to do? should I type fra1.digitaloceanspaces.com or have to manually .config it as of now until it added?

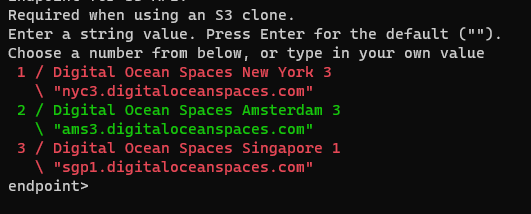

Screenshots from DOS & RC terminal

what to do? should I type fra1.digitaloceanspaces.com or have to manually .config it as of now until it added?

as per that screenshot, just enter the value

yeah I press the enter!

not sure what you mean?

did you enter the endpoint value or not?

but how to I mark this is as Solved?

just press the enter

you have two choices.

Yes that is what you have to do. Just type the full endpoint address.

This would be easy to add if someone wants to make a pull request.

BTW I just enter (Press Enter for Default) & it's working fine! Thanks for making RCLONE!

if you just press Enter, i think that you will not be using fra1.digitaloceanspaces.com.

i could be wrong tho.

can you post the config file, remove secrets and id.

let me re set up again! from the strart!

no endpoint selected! but facing a issue of ping drop constantly while stream

buffer every 2/3 sec

you are not supplying much information, makes it hard to help you.

when you first posted, there was a template of questions, would be helpful to post that now.

for testing, are you using any other cloud providers with rclone?

I think I'm not looking for a deep dive into the rclone sync! I find out I need just a backup solution! Cause buckets may be cheaper but in the real world, I need to invest in CDN & actual infrastructure & hardware! So rclone Is done the job that I'm looking for!

But I need some place to store data! I think AWS Deep Glacier might be good for this case! Cause I don't waste time & effort over-priced buckets! Need to invest in CDN!

Now I'm looking for the tutorial how to connect it with mountainduck, casue cryptomantor is awesome! & rclone crypt is good! but I really don't know how to only decrypt selected files! not like to sync the whole folder when I need it in the future!

i use the combination of wasabi for hot storage and aws s3 deep glacier to cold storage.

i keep most recent backups in wasabi, and then move that to deep glacier.

there is not a rclone command to crypt and decrypt a file, the encryption and decryption is transparent.

when a folder/file is copied/moved/synced, it is crypted/decrypted in transit.

as per the documenation

" When working against the crypt remote, rclone will automatically encrypt (before uploading) and decrypt (after downloading) on your local system as needed on the fly, leaving the data encrypted at rest in the wrapped remote"

if you use rclone mount, then you can see the files names as decrypted.

what would be the gain with that?

how much data will you be uploaded per month?

what is the speed of your internet connection?

I probably not using any INternet connection from my home for upload/download BTW! Everything on the cloud from 25Gbit connection to 150mbps connection (150 at my home)! I regularly work on the GUI Xfce environment of Ubuntu 18/20 on the server with NVMe SSD & AMD Z2 ! That's why it's so 1ster!

if you run rclone mount on a crypted remote, you can view the folder/file names using whatever filemanager you choose.

OK thanks! What keys I really need to access my bucket for rclone! Do AWS S3 create same API key like Digital Ocean Spaces or I have to generate via IAM! Really just want to know!