What is the problem you are having with rclone?

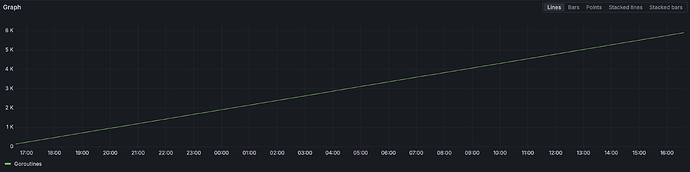

Monitoring my S3 rclone mount showed that goroutines count is infinitely increasing.

This can be seen by plotting the go_goroutines metric, here, roughly 24 hours after starting the mount:

Profiling using go tool pprof -dot http://localhost:5574/debug/pprof/goroutine gave me this diagram.

From the diagram, we can clearly see that the averageLoop the one goroutine kept from behind deleted.

When running the tool multiple times, the number of currently running goroutines is increased.

Reading the code, I'm suspecting that the a.stop channel is sometimes not closed. This would explain why the goroutines aren't being freed.

Run the command 'rclone version' and share the full output of the command.

rclone v1.69.3

- os/version: alpine 3.21.3 (64 bit)

- os/kernel: 5.15.0-140-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.24.3

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Amazon S3 Object Storage

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone mount config-name:/my-bucket /data \

--config=/config/rclone.conf \

--uid=1000 \

--gid=1000 \

--cache-dir=/cache \

--use-mmap \

--allow-other \

--umask=002 \

--rc \

--rc-no-auth \

--rc-addr=:5574 \

--rc-enable-metrics \

--vfs-cache-mode writes \

--allow-non-empty

The rclone config contents with secrets removed.

[config-name]

type = s3

provider =

access_key_id =

secret_access_key =

region = fr-par

endpoint =

storage_class = STANDARD