@ladude626 make sure you disable Scheduled Tasks in Plex as it will scan whole library and as well preform deep analyzation for all videos.

I tried google-drive-ocamlfuse a while ago, didn’t have a fraction of the performance necessary sadly. Perhaps it’s been updated/further optimized for streaming though. Also Google seems to take no issue with you using a lot of download data, or accessing every file. Which is to say, you can analyze every file several times in a day without receiving a ban. The problem is that rclone asks Google for the contents of a given folder in individual requests, each request is considered a query, use too many and it seems you get your 24 hour ban.

You may find that you refresh your library one to many times while doing zero analyzing of new or old files and still get your account locked.

@Ajki Darn too late I got banned after a daily library update. I changed it under the “Library” but forgot about the “Scheduled Tasks” section. It’s so weird, you don’t get any notice of the ban. The file is still mounted but I get a 403 error on download. Upload is still running fine.

I seem to have a strange issue with Rclone and Google Drive, It disconnects from google and gives a transport end point is not connected. its easy to fix I just have to unmount and then remount via rclone, I need to figure out how to run a check via cron and see if its broken and then if it is run through the remount procedure

Yeah using node-gdrive-fuse I scan my library several times about 55.6tb and it works fine google doesn’t seem to care at all about download usage or file access (unlike Amazon) but rclone queries for same functions will definitely get the ban. But rclone is definitely better performance wise, will make the switch to rclone once/if the ban issue fixed

I’m getting multiple ban with rclone and Google Drive. Couldn’t get google-drive-ocamlfuse to work on in a seedbox. I’ll definitely give node-gdrive-fuse a try.

EDIT: It sure looks like an API access daily limit issue. Look at “403: Daily Limit Exceeded” here: https://developers.google.com/drive/v3/web/handle-errors. Any workaround @ncw?

To make ocamlfuse visible to plex you have to use -o allow_other

This is working for me.

I got google-drive-ocamlfuse working now, but performance is slow compared to rclone. Couldn’t get node-gdrive-fuse working at all, but don’t have much experience with running java script codes on Linux.

Rclone still seems to give the beast performance with google drive but still getting banned daily. Using ACD for daily using still.

Maybe a solution could be running ocamlfuse for plex maintenance and then rclone for watching.

I’m trying if i can use rclone for watching with have the ban. If this work i will try mount with ocamlfuse during miantenance period and the remount with rclone during day.

Let’s see if it works.

Hi, those of you that got banned by google or acd because of plex scanning, could you try emby instead? Try it with only “Download artwork and metadata from the internet” option in the folder setting and disable scan media library scheduled task . I tried it with about 200 movies on acd and it seems fine. I would really like to know the results from people that got thousands of movies on acd.

Tonight i disabled all plex planned task but today i found my daily ban.

I really don’t know how i can use google with Plex.

Any other idea?

I’m also a litte bit lost here.

With ACD, I get a speed of approx 5 MB/s (Google 60 MB/s), google on the other hand gets banned every day.

Also ACD has a file limit of 50GB which is less than a 4K movie.

I don’t really know why ACD is so slow for me … maybe the data center is too far away from the location of the vps (Frankfurt). I’m using the mount command discussed here in combination with encfs.

So if we could avoid the google ban (caching, throttling, ect) this would be the perfect solution for me.

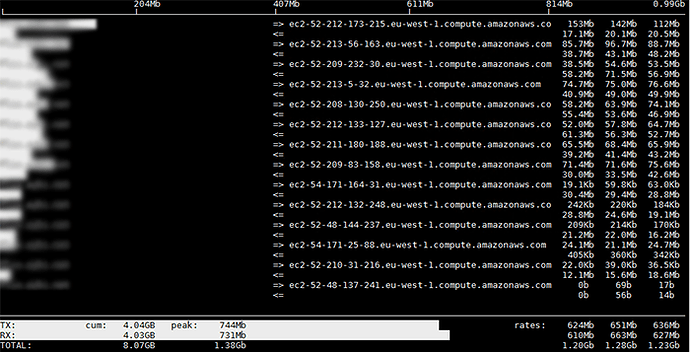

Make sure you use amazon.de and not amazon.com, my surrent speed ( 30 transfers ):

2017/01/13 07:03:02 Transferred: 5077.410 GBytes (89.425 MBytes/s) Errors: 5 Checks: 29644 Transferred: 2997 Elapsed time: 16h9m1.1s

How do I see if I’m using .de oder .com and how do I switch? On the webpage, the url is https://www.amazon.de/clouddrive

Thats it you have DE drive, could be that peering with Amazon is just bad from ISP you have.

Try speedtest-cli to check whats your bandwith.

Thats my speedtest-cli result from my VPS:

Retrieving speedtest.net configuration...

Testing from DigitalOcean...

Retrieving speedtest.net server list...

Selecting best server based on ping...

Hosted by Tele2 (Frankfurt) [0.00 km]: 4.765 ms

Testing download speed................................................................................

Download: 1965.89 Mbit/s

Testing upload speed....................................................................................................

Upload: 941.63 Mbit/s

Do you reach the same speed when you mount your acd drive and copy one file from acd mount to the local filesystem?

No with one file is less, it can drop to even 5MB.

Check where are you connecting, my iftop amazon connection list

Ah ok, thought you would get the same speed for accessing the mount. So 5MB/s would be sufficient for most media files, but when one day there are good 4k movie (currently I see no difference to 1080p tbh) files with a 60mbit bitrate, 5MB/s wouldn’t be enough though. But on the other hand, ACD does not alles 50+ GB files.

iftop also shows eu-west for me.

Is there maybe a max speed per account? I’ve another ISP running which has the ACD mounted, ‚encrypts everything with encfs and reuploads it. Thats constantly running with about 15MB/s for the last week.

If I access the acd from different locations in parallel, should I reuse the same rlcone.conf file or should I generate a new token?

Iam using same .rclone.conf file on all my machines.

Btw why are you switching to encfs, one of the main reasons Iam switching to crypt is:

- ability to use filters

- heavily reduce disk I/O’s as with encfs you need to encrypt all files on disk

- much cleaner setup without the need of acd mount, encrypted folder, unencrypted folder etc…

-

@ncw is really active with rclone project and always improving and optimizing it … cant wait the day when we also get local cache and fully working mount with direct ability to copy/edit files.

p.s. Re encrypting my whole lib is real pain in the ass, 25TB encrypted, a bit less then 15TB to go.

I’ve read in several posts here that crypt is slower than encfs, isn’t that the case?

Also I wan’t to be independent from rclone in case another tools pops up that might fit my use case better.

I don’t need to encrypt all files on disk by using encfs --reverse

And I’m also looking forward to local caches… would hopefully eliminate the google drive bans.