24 hour bans don't happen for 'no good reason' as there is something that always causes that.

I can speak to Drive File Stream because I don't use it.

If you played a file using a version of rclone prior to June 2018 and didn't use the cache backend, that would cause the issue with rclone in particular.

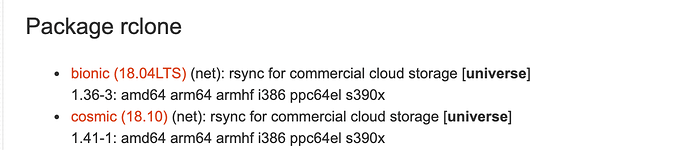

There are many ways to get the old package by not downloading the latest version from the site. For example, Ubuntu has versions in their PPA that are ancient:

If you have Drive File Stream questions, I'm sure they can help out with those particular things. We can happily answer any rclone questions here especially me with Plex as that's my use case.

I've got ~60TB of encrypted data and switched over to rclone back in June 2018 once the new release hit. So I'm approaching almost 10 months now and never have seen a 24 hour ban nor any major API hits.