What is the problem you are having with rclone?

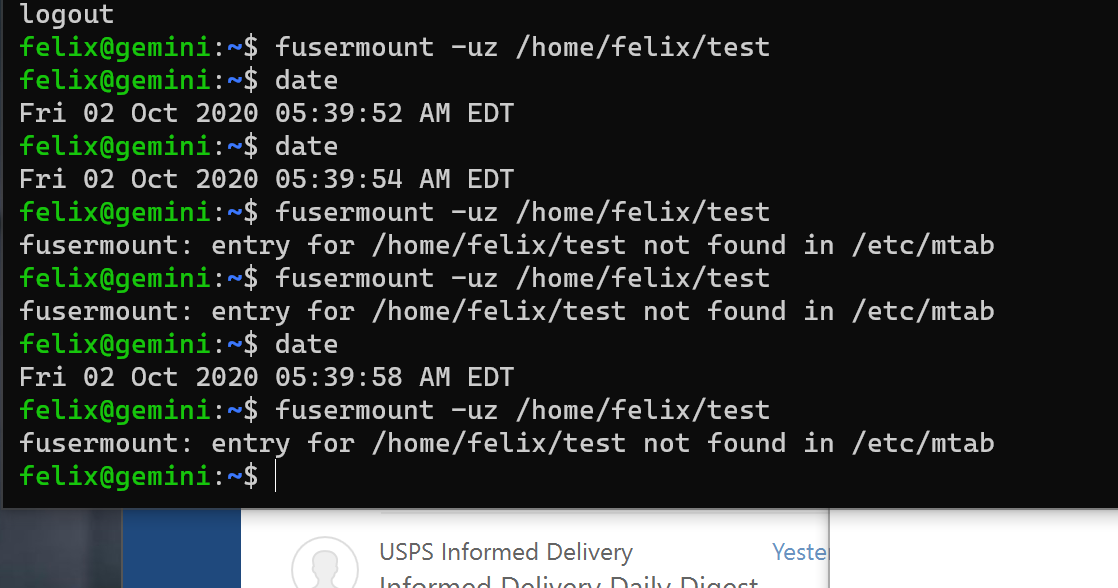

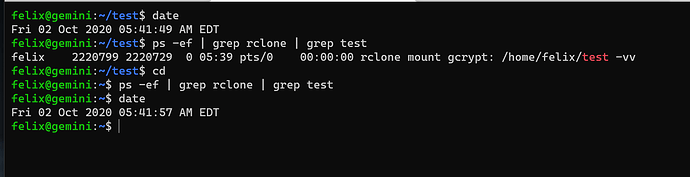

When I perform systemd restart/stop, rclone does not properly stop leaving rclone in a stale state on the machine with system, and the mounted file system does not unmount. Once it is stopped, I cannot restart the systemd service as it is in a broken state.

My belief is that this is due to rclone not properly stopping, and fusermount not being able to forcefully unmount the efs/remote and/or the port not closing properly, leaving the process in a hung state. This happens if you have a daemon process continually spawning from the looks of it, or if rclone gets in a weird state.

What is your rclone version (output from rclone version)

rclone version

rclone v1.53.1

- os/arch: linux/amd64

- go version: go1.15

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Ubuntu 20.04.1

Which cloud storage system are you using? (eg Google Drive)

Google Drive File Stream - Shared Drives

The command you were trying to run (eg rclone copy /tmp remote:tmp)

The rclone config contents with secrets removed.

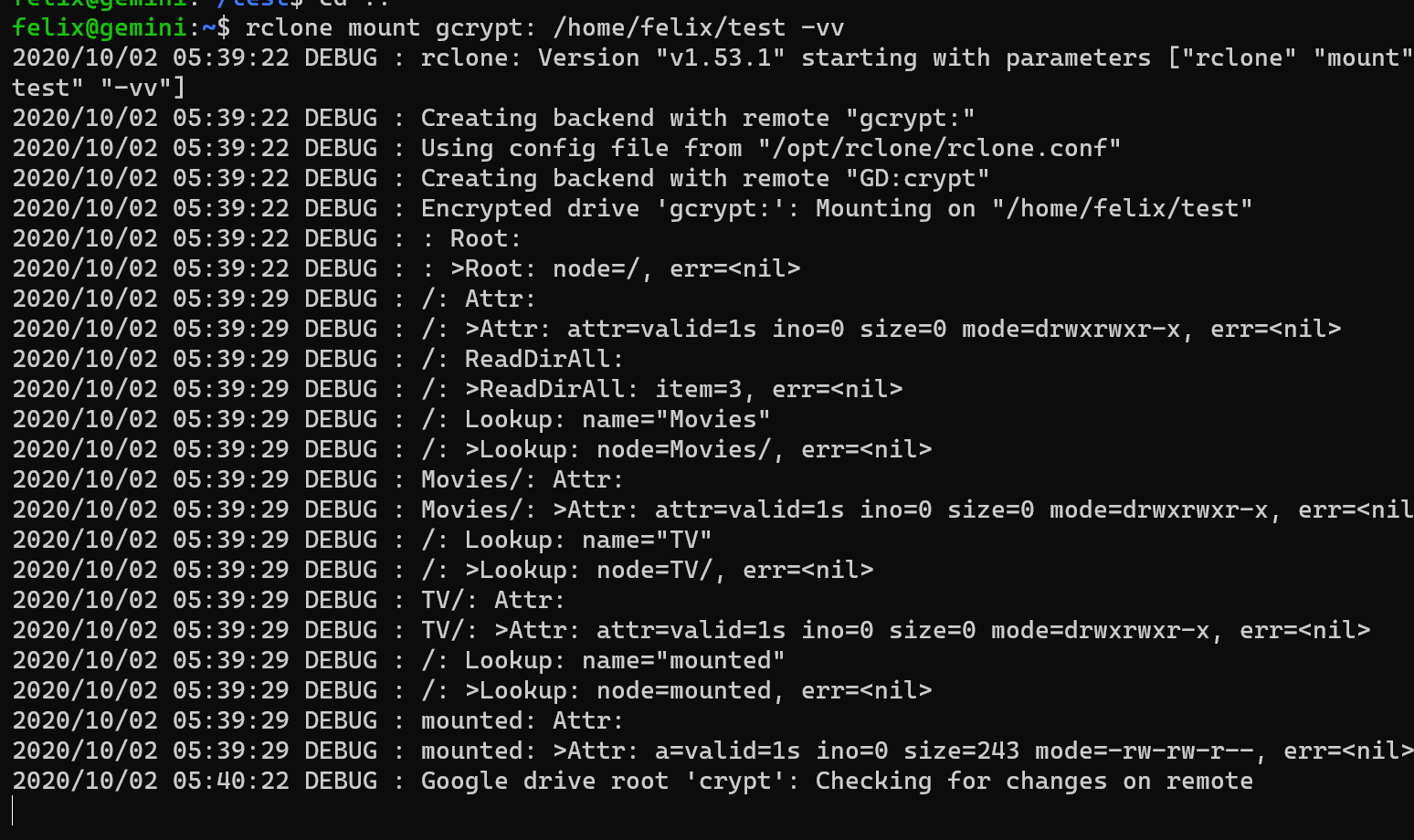

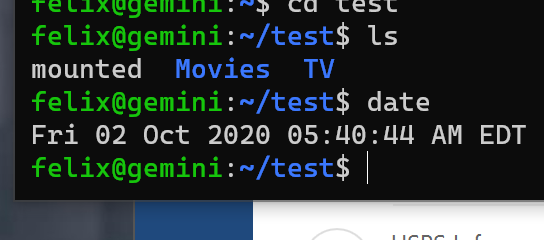

A log from the command with the -vv flag

andrew@nas:/etc/systemd/system$ sudo systemctl status rclone-ebooks-crypt.service

● rclone-ebooks-crypt.service - RClone Service

Loaded: loaded (/etc/systemd/system/rclone-ebooks-crypt.service; disabled; vendor preset: enabled)

Active: active (running) since Thu 2020-10-01 15:40:00 PDT; 2min 32s ago

Main PID: 2191 (rclone)

Tasks: 12 (limit: 57733)

Memory: 42.0M

CGroup: /system.slice/rclone-ebooks-crypt.service

└─2191 /usr/bin/rclone mount gdriveebooks-crypt: /mnt/rclone/gebooks/books --allow-other --buffer-size 256M --dir-cache-time 1000h --log-level INFO --log-file /var/log/rclone/books-mount.log --poll-interval 15s --timeout 1>

Oct 01 15:39:58 nas systemd[1]: Starting RClone Service...

Oct 01 15:40:00 nas systemd[1]: Started RClone Service.

andrew@nas:/etc/systemd/system$ sudo ps aux | grep 2191

andrew 2191 0.1 0.1 742824 58880 ? Ssl 15:39 0:00 /usr/bin/rclone mount gdriveebooks-crypt: /mnt/rclone/gebooks/books --allow-other --buffer-size 256M --dir-cache-time 1000h --log-level INFO --log-file /var/log/rclone/books-mount.log --poll-interval 15s --timeout 1h --umask 002 --rc --rc-addr 127.0.0.1:5584

andrew 32976 0.0 0.0 6432 2612 pts/0 S+ 15:42 0:00 grep --color=auto 2191

andrew@nas:/etc/systemd/system$ sudo systemctl stop rclone-ebooks-crypt

andrew@nas:/etc/systemd/system$ sudo ps aux | grep 2191

andrew 2191 0.1 0.1 742824 58880 ? Ssl 15:39 0:00 /usr/bin/rclone mount gdriveebooks-crypt: /mnt/rclone/gebooks/books --allow-other --buffer-size 256M --dir-cache-time 1000h --log-level INFO --log-file /var/log/rclone/books-mount.log --poll-interval 15s --timeout 1h --umask 002 --rc --rc-addr 127.0.0.1:5584

andrew 36470 0.0 0.0 6432 2500 pts/0 S+ 15:42 0:00 grep --color=auto 2191

andrew@nas:/etc/systemd/system$ sudo journalctl -u rclone-ebooks-crypt.service

-- Logs begin at Sun 2020-09-27 11:31:42 PDT, end at Thu 2020-10-01 15:54:31 PDT. --

Sep 27 11:32:17 nas systemd[1]: Stopping RClone Service...

Sep 27 11:32:17 nas systemd[1]: rclone-ebooks-crypt.service: Succeeded.

Sep 27 11:32:17 nas systemd[1]: Stopped RClone Service.

-- Reboot --

Sep 27 11:32:36 nas systemd[1]: Starting RClone Service...

Sep 27 11:32:37 nas systemd[1]: Started RClone Service.

Oct 01 15:33:45 nas systemd[1]: Stopping RClone Service...

Oct 01 15:33:45 nas systemd[1]: rclone-ebooks-crypt.service: Succeeded.

Oct 01 15:33:45 nas systemd[1]: Stopped RClone Service.

Oct 01 15:33:45 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:33:45 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:33:45 nas systemd[1]: Starting RClone Service...

Oct 01 15:33:45 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:33:45 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:33:45 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:33:50 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 1.

Oct 01 15:33:50 nas systemd[1]: Stopped RClone Service.

Oct 01 15:33:50 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:33:50 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:33:50 nas systemd[1]: Starting RClone Service...

Oct 01 15:33:50 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:33:50 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:33:50 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:33:55 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 2.

Oct 01 15:33:55 nas systemd[1]: Stopped RClone Service.

Oct 01 15:33:55 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:33:55 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:33:55 nas systemd[1]: Starting RClone Service...

Oct 01 15:33:56 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:33:56 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:33:56 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:34:01 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 3.

Oct 01 15:34:01 nas systemd[1]: Stopped RClone Service.

Oct 01 15:34:01 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:34:01 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:34:01 nas systemd[1]: Starting RClone Service...

Oct 01 15:34:01 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:34:01 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:34:01 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:34:06 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 4.

Oct 01 15:34:06 nas systemd[1]: Stopped RClone Service.

Oct 01 15:34:06 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:34:06 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:34:06 nas systemd[1]: Starting RClone Service...

Oct 01 15:34:06 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:34:06 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:34:06 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:34:11 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 5.

Oct 01 15:34:11 nas systemd[1]: Stopped RClone Service.

Oct 01 15:34:11 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:34:11 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:34:11 nas systemd[1]: Starting RClone Service...

Oct 01 15:34:11 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:34:11 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:34:11 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:34:16 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 6.

Oct 01 15:34:16 nas systemd[1]: Stopped RClone Service.

Oct 01 15:34:16 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:34:16 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:34:16 nas systemd[1]: Starting RClone Service...

Oct 01 15:34:17 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:34:17 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:34:17 nas systemd[1]: Failed to start RClone Service.

(Repeating lines)

Oct 01 15:39:06 nas systemd[1]: Starting RClone Service...

Oct 01 15:39:06 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:39:06 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:39:06 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:39:11 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 63.

Oct 01 15:39:11 nas systemd[1]: Stopped RClone Service.

Oct 01 15:39:11 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:39:11 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:39:11 nas systemd[1]: Starting RClone Service...

Oct 01 15:39:11 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:39:11 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:39:11 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:39:16 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 64.

Oct 01 15:39:16 nas systemd[1]: Stopped RClone Service.

Oct 01 15:39:16 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:39:16 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:39:16 nas systemd[1]: Starting RClone Service...

Oct 01 15:39:16 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:39:16 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:39:16 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:39:21 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 65.

Oct 01 15:39:21 nas systemd[1]: Stopped RClone Service.

Oct 01 15:39:21 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:39:21 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:39:21 nas systemd[1]: Starting RClone Service...

Oct 01 15:39:21 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:39:21 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:39:21 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:39:27 nas systemd[1]: rclone-ebooks-crypt.service: Scheduled restart job, restart counter is at 66.

Oct 01 15:39:27 nas systemd[1]: Stopped RClone Service.

Oct 01 15:39:27 nas systemd[1]: rclone-ebooks-crypt.service: Found left-over process 2036 (rclone) in control group while starting unit. Ignoring.

Oct 01 15:39:27 nas systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Oct 01 15:39:27 nas systemd[1]: Starting RClone Service...

Oct 01 15:39:27 nas systemd[1]: rclone-ebooks-crypt.service: Main process exited, code=exited, status=1/FAILURE

Oct 01 15:39:27 nas systemd[1]: rclone-ebooks-crypt.service: Failed with result 'exit-code'.

Oct 01 15:39:27 nas systemd[1]: Failed to start RClone Service.

Oct 01 15:39:32 nas systemd[1]: rclone-ebooks-crypt.service: Stop job pending for unit, delaying automatic restart.

Oct 01 15:39:37 nas systemd[1]: rclone-ebooks-crypt.service: Stop job pending for unit, delaying automatic restart.

Oct 01 15:39:37 nas systemd[1]: rclone-ebooks-crypt.service: Got notification message from PID 2036, but reception only permitted for main PID which is currently not known

Oct 01 15:39:38 nas systemd[1]: Stopped RClone Service.

-- Reboot --

Oct 01 15:39:58 nas systemd[1]: Starting RClone Service...

Oct 01 15:40:00 nas systemd[1]: Started RClone Service.

Oct 01 15:42:51 nas systemd[1]: Stopping RClone Service...

Oct 01 15:42:51 nas systemd[1]: rclone-ebooks-crypt.service: Succeeded.

Oct 01 15:42:51 nas systemd[1]: Stopped RClone Service.

rclone-ebooks-crypt.service

[Unit]

Description=RClone Service

Wants=network-online.target

Before=docker.service

After=network-online.target

[Service]

Type=notify

Environment=RCLONE_CONFIG=/home/andrew/.config/rclone/rclone.conf

KillMode=none

RestartSec=5

ExecStart=/usr/bin/rclone mount gdriveebooks-crypt: /mnt/rclone/gebooks/books \

--allow-other \

--buffer-size 256M \

--dir-cache-time 1000h \

--log-level INFO \

--log-file /var/log/rclone/books-mount.log \

--poll-interval 15s \

--timeout 1h \

--umask 002 \

--rc \

--rc-addr 127.0.0.1:5584

ExecStop=/bin/fusermount -uz /mnt/rclone/gebooks/books

Restart=on-failure

User=andrew

Group=andrew

[Install]

WantedBy=multi-user.target

Log file: /var/log/rclone/books-mount.log

2020/10/01 15:39:59 NOTICE: Serving remote control on http://127.0.0.1:5563/

2020/10/01 15:39:59 NOTICE: Serving remote control on http://127.0.0.1:5584/

2020/10/01 15:40:00 NOTICE: Serving remote control on http://127.0.0.1:5563/

2020/10/01 15:40:05 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:10 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:15 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:20 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:26 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:31 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:36 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:41 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:47 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:52 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:40:57 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:02 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:08 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:13 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:18 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:23 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:29 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:34 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:39 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:44 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:49 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:41:55 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:42:00 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

2020/10/01 15:42:05 Failed to start remote control: start server failed: listen tcp 127.0.0.1:5563: bind: address already in use

[gdriveebooks]

type = drive

client_id = OMIT.apps.googleusercontent.com

client_secret = OMIT

token = {"access_token":"","token_type":"Bearer","refresh_token":"","expiry":"2020-10-01T16:42:29.277101958-07:00"}

team_drive = DriveFolderID

[gdriveebooks-crypt]

type = crypt

remote = gdriveebooks:/eBooks/

password = OMIT

password2 = OMIT1