HeadStew

May 24, 2021, 1:31am

1

vfs cache: failed to download: vfs reader: failed to write to cache file: unexpected end of JSON input

Followed by "too many open files" shortly after then not responding until restarted

rclone version)

root@pepe:/home/chris# rclone version

os/version: debian 10.9 (64 bit)

os/kernel: 4.19.0-16-amd64 (x86_64)

os/type: linux

os/arch: amd64

go/version: go1.16.4

go/linking: static

go/tags: none

Debian 10 64-Bit

Dropbox, through a Crypt remote

rclone copy /tmp remote:tmp)

[Unit]

Description=Dropbox Mount

Wants=network-online.target

After=network-online.target

[Service]

Type=notify

Environment=RCLONE_CONFIG=/opt/rclone/rclone.conf

KillMode=none

RestartSec=5

ExecStart=/usr/bin/rclone mount media: /mnt/remote/media \

--allow-other \

--dir-cache-time 1000h \

--log-level INFO \

--log-file /mnt/remote/media.log \

--poll-interval 15s \

--umask 002 \

--uid 1001 \

--gid 1001 \

--dir-perms=0777 \

--file-perms=0777 \

--user-agent RandomString \

--rc \

--rc-addr :5572 \

--rc-no-auth \

--cache-dir=/mnt/remote/cache/media \

--vfs-cache-mode full \

--vfs-cache-max-size 16G \

--vfs-cache-max-age 336h \

--bwlimit-file 16M

ExecStop=/bin/fusermount -uz /mnt/remote/media

#ExecStartPost=/usr/bin/rclone rc vfs/refresh recursive=true --rc-addr 127.0.0.1:5572 _async=true --timeout=10m

Restart=on-failure

User=plex

Group=plex

[Install]

WantedBy=multi-user.target

[dropbox]

type = dropbox

token = {"access_token":"REDACTED","token_type":"bearer","refresh_token":"REDACTED","expiry":"2021-04-18T22:43:07.295335765-04:00"}

client_id = REDACTED

client_secret = REDACTED

[media]

type = crypt

remote = dropbox:/User Name/

filename_encryption = standard

directory_name_encryption = true

password = REDACTED

password2 = REDACTED

-vv flag

2021/05/23 18:52:25 INFO : Media/Television/Married at First Sight - Unmatchables (2021)/Season 01/S01E06 - Episode 6 [WEBDL-720p] [h264 AAC 2.0].mkv: vfs cache: downloader: error count now 3: vfs reader: failed to write to cache file: unexpected end of JSON input

2021/05/23 18:52:25 ERROR : Media/Television/Married at First Sight - Unmatchables (2021)/Season 01/S01E06 - Episode 6 [WEBDL-720p] [h264 AAC 2.0].mkv: vfs cache: failed to download: vfs reader: failed to write to cache file: unexpected end of JSON input

Link to full log with debug on: media.log.txt - Google Drive

That one however doesn't have the Too many files open error.

File Limits on system are below:

root@pepe:/opt/rclone# cat /proc/sys/fs/file-max

9223372036854775807

root@pepe:/opt/rclone# ulimit -n

1024000

ncw

May 24, 2021, 2:10pm

2

I think that is caused by dropbox returning an unexpected response - I'm not sure why though...

That sounds as though there is a file descriptor leak or maybe there were a huge number of files open?

When it next happens can you get a goroutine dump from the --rc and post that? That should give a hint.

If you could also run lsof -c rclone and post the output of that, that would be super helpful - thanks.

HeadStew

May 24, 2021, 5:14pm

3

HeadStew

May 25, 2021, 4:16am

4

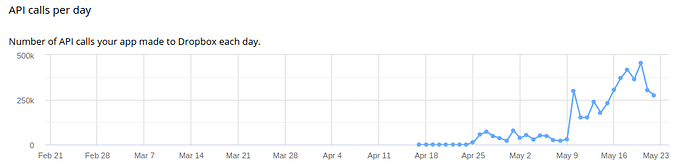

Also something interesting, not sure if related but my API calls took a jump recently:

Nothing really has changed in usage on my end to prompt that.

ncw

May 25, 2021, 10:46am

5

Yes there is definitely a socket leak going on there!

1010 @ 0x43b205 0x44c477 0x78b247 0x4725c1

# 0x78b246 net/http.(*persistConn).readLoop+0x966 net/http/transport.go:2203

1010 @ 0x43b205 0x44c477 0x78c657 0x4725c1

# 0x78c656 net/http.(*persistConn).writeLoop+0xf6 net/http/transport.go:2382```

So there are 1010 http connections open...

The lsof agrees.

This is most likely caused by something forgetting to close the HTTP connection.

This could be rclone or it could be the dropbox SDK (it wouldn't be the first bug I've found in the dropbox SDK)!

I suspect the dropbox SDK because I haven't had reports of this with any other backends

To make progress on this, I really need a way of replicating the problem.

If you could watch rclone with lsof and see what operations cause the number of protocol: TCP to increase that would be great. If you can pin it down to something specific appearing in the log that would be perfect.

Wolveix

June 30, 2021, 5:01pm

6

Hey @ncw , I've been working with @HeadStew on this to try and figure the issue out. It could be a coincidence, but the leak seems to happen when the following line is logged:

commit()

}

}

// Shutdown finishes any pending batches then shuts everything down

//

// Can be called from atexit handler

func (b *batcher) Shutdown() {

b.shutOnce.Do(func() {

atexit.Unregister(b.atexit)

fs.Infof(b.f, "Commiting uploads - please wait...")

// show that batcher is shutting down

close(b.closed)

// quit the commitLoop by sending a quitRequest message

//

// Note that we don't close b.in because that will

// cause write to closed channel in Commit when we are

// exiting due to a signal.

b.in <- quitRequest

b.wg.Wait()

})

I'm not too familiar with what this function does, but I'm currently reading over it to try and get an idea. I'd appreciate any input! We'll continue to try and pin down the issue.

1 Like

ncw

July 1, 2021, 9:12am

7

Thats shuts the batcher down.

It should only be called once when the backend is being shut down, just before rclone exits. Are you seeing it called more than once?

So likely the leaking has happened before then.

1 Like

Wolveix

July 1, 2021, 7:01pm

8

ncw:

Thats shuts the batcher down.

It should only be called once when the backend is being shut down, just before rclone exits. Are you seeing it called more than once?

So likely the leaking has happened before then.

That makes sense. No, it's only being called once. I'll investigate the functions that occur before it and get back to you.

1 Like

system

August 31, 2021, 3:02pm

9

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.