Google seems to take a harder stance on 'shared' things and sharing a large video file and downloading that a number of times fully (analyzing it/thumbnails/etc) hits a download quota much faster than a personal drive (not shared) doing the same thing.

That doesn't make a lot of sense to me, because you would need a higher quota to accommodate more users that would come with a shared drive. I'm the only user on the shared drive, although I do use a few service accounts for uploading, I slightly modified your upload script.

Unfortunately I did not have vnstat installed during most of the download quota week. Honestly, I suspect emby and jellyfin kept recanning the entire library. I had to scan both at least twice during that week, with about a day in between the 403s.

I used to have buffer-size 64M, but I can't remember if I had it during the heavy scanning week. Let's assume I did.

Let's do the math:

66,000 x 64M = 4224000M = 4.22T x 2 (jellyfin and emby) = 8.44T

If it was 16M, the default buffer size:

66,000 x 16M = 1056000M = 1.05T x 2 (jellyfin and emby) = 2.10T

This is not even considering if there is more than 1 chunk used per ffprobe call. So either I was within the 10tb daily limit, assuming all 66,000 files can be scanned in 24 hours, or I was over it. It takes about 1.5-2 days to scan my library from scratch, so i don't think I did all 66,000 in 24 hours.

Let's assume 33,000 files but with 2x more chunks.

33,000 x 64M = 2112000M = 2.11T x 2 (jellyfin and emby) = 4.22T x 2x = 8.44TB

4 chunks per ffprobe:

33,000 x 64M = 2112000M = 2.11T x 2 (jellyfin and emby) = 4.22T x 4x = 16.88TB

Let's assume 33,000 files but with 4x more chunks.

33,000 x 16M = 528000M = .52T x 2 (jellyfin and emby) = 1.04T x 2x = 2.08TB

4 chunks per ffprobe:

33,000 x 16M = 528000M = .52T x 2 (jellyfin and emby) = 1.04T x 4x = 4.16TB

So either I barely went over, or I was fine.

It's been 4 days without any api errors. If it happens again, I'll turn on debug mode.

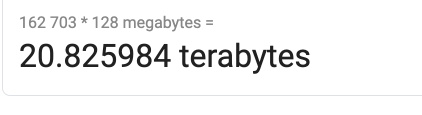

Right now I have 128M read-chunk, no limit, buffer-size 0. I have a 4 hour scheduled task window. I decided to try my luck and turn on chapter and thumbnail extraction (24 hours with it on, so far it's fine). I delay local files for 8 hours, giving plenty of time for plex to do it while the file is local. I've stopped using emby and jellyfin, maybe one day I'll do jellyfin again run into this, but now I know to turn the read chunk down to 8mb and turn off the buffer.