Oh but been using it for ages , no problems so far.

Well here is the details

´´´

[Scripts]

type = crypt

remote = Google:Scripts

filename_encryption = standard

directory_name_encryption = true

password = xxxxx

´´´

Rclone -vv copy Scripts: /home/plex/scripts/

´´´

rclone copy -vv Scripts: /home/plex/scripts/

2021/04/10 21:17:58 DEBUG : Using config file from "/home/plex/.config/rclone/rclone.conf"

2021/04/10 21:17:58 DEBUG : rclone: Version "v1.55.0" starting with parameters ["rclone" "copy" "-vv" "Scripts:" "/home/plex/scripts/"]

2021/04/10 21:17:58 DEBUG : Creating backend with remote "Scripts:"

2021/04/10 21:17:58 DEBUG : Creating backend with remote "Google:Scripts"

2021/04/10 21:17:58 DEBUG : Google drive root 'Scripts': root_folder_id = "XXXXXXXX" - save this in the config to speed up startup

2021/04/10 21:17:59 DEBUG : Creating backend with remote "/home/plex/scripts/"

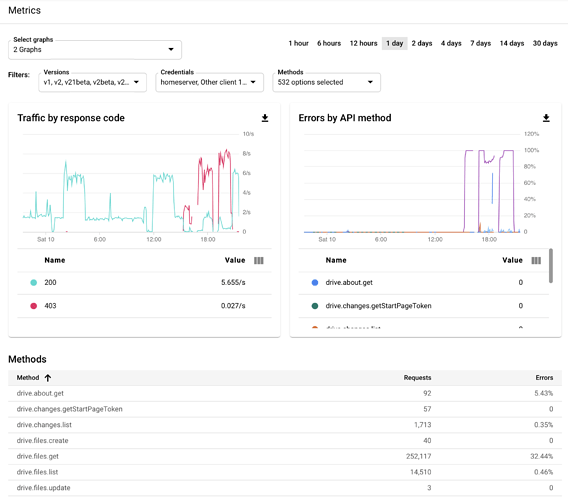

2021/04/10 21:18:02 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:02 DEBUG : pacer: Rate limited, increasing sleep to 1.239365217s

2021/04/10 21:18:02 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:02 DEBUG : pacer: Rate limited, increasing sleep to 2.793718262s

2021/04/10 21:18:02 DEBUG : pacer: Reducing sleep to 0s

2021/04/10 21:18:02 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:02 DEBUG : pacer: Rate limited, increasing sleep to 1.71281199s

2021/04/10 21:18:02 DEBUG : pacer: Reducing sleep to 0s

2021/04/10 21:18:02 DEBUG : pacer: low level retry 2/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:02 DEBUG : pacer: Rate limited, increasing sleep to 1.62468263s

2021/04/10 21:18:03 DEBUG : pacer: Reducing sleep to 0s

2021/04/10 21:18:03 INFO : exclude-file.txt: Copied (new)

2021/04/10 21:18:03 INFO : .plexignore: Copied (new)

2021/04/10 21:18:04 INFO : mount.sh: Copied (new)

2021/04/10 21:18:04 INFO : kill_stream.py: Copied (new)

2021/04/10 21:18:04 INFO : demount.sh: Copied (new)

2021/04/10 21:18:04 INFO : gdrive2synology.sh: Copied (new)

2021/04/10 21:18:04 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:04 DEBUG : pacer: Rate limited, increasing sleep to 1.028830292s

2021/04/10 21:18:04 DEBUG : pacer: low level retry 2/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=XXXXXXXXXX, userRateLimitExceeded)

2021/04/10 21:18:04 DEBUG : pacer: Rate limited, increasing sleep to 2.422275949s

2021/04/10 21:18:05 DEBUG : pacer: Reducing sleep to 0s

2021/04/10 21:18:05 INFO : rclone-sync.sh: Copied (new)

2021/04/10 21:18:05 INFO : plexrestore.sh: Copied (new)

2021/04/10 21:18:05 INFO : pihole.sh: Copied (new)

2021/04/10 21:18:05 INFO : purge-old.kernels-2.sh: Copied (new)

´´´