chchia

July 30, 2020, 9:09am

1

What is the problem you are having with rclone?

not sure if this a bug i doubt so, but i am asking for help how to server-side copy file for below condition.

gDriveShared is a gDrive with a shared link

i can see the crypted content of shared folder in GdriveShared with following command:

Now i would like to copy the content of gDriveShared --drive-shared-with-me to a folder in gDriveShared:Crypted

i wondering if this is possible? like

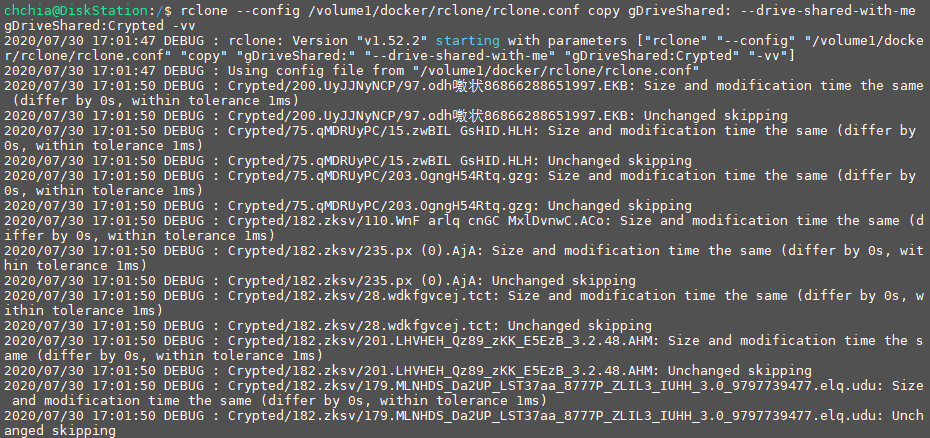

rclone copy gDriveShared: --drive-shared-with-me gDriveShared:Crypted -vv

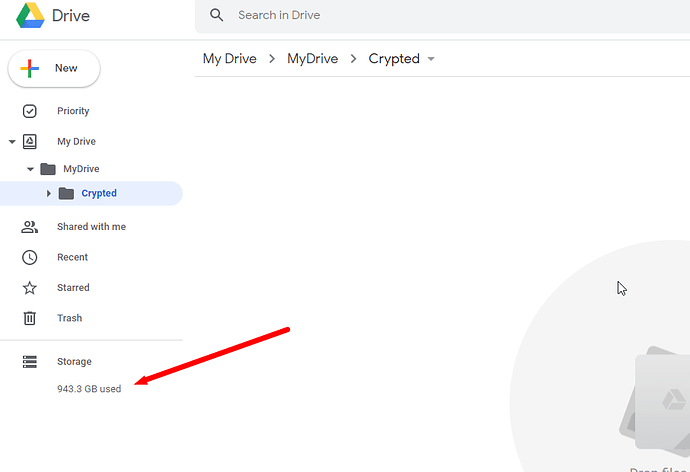

it will show file is already there or it show copied OK, however looking at gDrive web site, it show

folder is empty yet i had occupied 900 over GB.... where is the file goes? i am pulling out all my hair....

What is your rclone version (output from rclone version)

latest stable 1.52.2

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Synology Linux

Which cloud storage system are you using? (eg Google Drive)

Google Drive

please let me know if there is more log file that i can provide.

chchia

July 30, 2020, 9:58am

2

I think i found where the file goes, it goes to the shared-folder...

so, the when copy file, the flag --drive-shared-with-me is actually apply to both source and destination remote?

can i make --drive-shared-with-me for source folder only?

You'd make another remote if you want to separate the shared with me stuff.

1 Like

chchia

July 30, 2020, 2:02pm

4

making another remote does it means it will not be server-side copy?

If you want to server side, you'd just add:

server_side_across_configs = true

in your rclone.conf

chchia

July 30, 2020, 3:11pm

7

if you dont mind, could you write me the command how to use server_side_across_configs = true?

thanks

You can enter what I shared into each remote in your rclone.conf and that applies to all commands or you can use the flag

--drive-server-side-across-configs

Just put that on the end of your copy or sync command.

chchia

July 31, 2020, 2:24am

9

thanks, it works!

somehow i have a lot of user rate limit exceeded, i did not aware that server side copy will also apply rate limit.

You can only do 750GB upload per day regardless of how you do it.

system

September 29, 2020, 10:36pm

11

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.