What is the problem you are having with rclone?

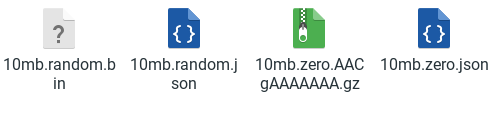

When I create a compress-remote and specify an existing remote to compress during the config/creation process, subsequent attempts to copy or sync files to that compressed-remote result in a .bin and a .json file being created in the compress-remote for each source file, rather than a .gzip file as per the documentation. The .bin file is exactly the same size as the original file instead of being smaller, leading me to believe that compression is not functioning correctly.

For example, "largefile.pdf" which is 500KB, when copied to the compress-remote becomes "largefile.pdf.bin" which is still 500KB, and "largefile.pdf.json" which is somewhat smaller.

Run the command 'rclone version' and share the full output of the command.

rclone v1.62.2

- os/version: arch 21.0.1 (64 bit)

- os/kernel: 5.4.195-1-MANJARO (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.20.4

- go/linking: dynamic

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

For this test, a local/alias type remote on my local filesystem.

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy ~/Documents/largefile.pdf compessedremote1:

The rclone config contents with secrets removed.

[localremote1]

type = alias

remote = /home/manjaro/LRemote

[compressedremote1]

type = compress

remote = localremote1:

A log from the command with the -vv flag

2023/06/21 15:12:47 DEBUG : rclone: Version "v1.62.2" starting with parameters ["rclone" "copy" "-vv" "/home/manjaro/Documents/largefile.pdf" "compressedremote1:"]

2023/06/21 15:12:47 DEBUG : Creating backend with remote "/home/manjaro/Documents/largefile.pdf"

2023/06/21 15:12:47 DEBUG : Using config file from "/home/manjaro/.config/rclone/rclone.conf"

2023/06/21 15:12:47 DEBUG : fs cache: adding new entry for parent of "/home/manjaro/Documents/largefile.pdf", "/home/manjaro/Documents"

2023/06/21 15:12:47 DEBUG : Creating backend with remote "compressedremote1:"

2023/06/21 15:12:47 DEBUG : Creating backend with remote "/home/manjaro/LRemote/.json"

2023/06/21 15:12:47 DEBUG : Creating backend with remote "/home/manjaro/LRemote"

2023/06/21 15:12:47 DEBUG : largefile.pdf: Need to transfer - File not found at Destination

2023/06/21 15:12:47 DEBUG : largefile.pdf: md5 = 17f06d633743b8574126aceed17d3069 OK

2023/06/21 15:12:47 INFO : largefile.pdf: Copied (new)

2023/06/21 15:12:47 INFO :

Transferred: 370.985 KiB / 370.985 KiB, 100%, 0 B/s, ETA -

Transferred: 1 / 1, 100%

Elapsed time: 0.0s

2023/06/21 15:12:47 DEBUG : 4 go routines active