Be more specific. Download from???

And what is the mount command?

Downloading from IBM Cloud Object Storage by virtual machine on IBM Cloud

[root@localhost ~]# cat /root/.config/rclone/rclone.conf

[icos]

type = s3

provider = IBMCOS

env_auth = false

access_key_id = 88zxxxxxxxxxxxxxxxxx

secret_access_key = RvjYIxzzzzzzzzzzzzzzzzzzz

endpoint = s3.tok-ap-geo.objectstorage.service.networklayer.com

location_constraint = ap-flex

acl = privat

[root@localhost home]# rclone copy icos:khayama-test2/temp_300GB_file . -vv

2018/09/28 13:50:42 DEBUG : rclone: Version “v1.43-116-g22ac80e8-beta” starting with parameters [“rclone” “copy” “icos:khayama-test2/temp_300GB_file” “.” “-vv”]

2018/09/28 13:50:42 DEBUG : Using config file from “/root/.config/rclone/rclone.conf”

2018/09/28 13:50:42 DEBUG : pacer: Reducing sleep to 0s

2018/09/28 13:50:43 DEBUG : temp_300GB_file: Couldn’t find file - need to transfer

2018/09/28 13:51:42 INFO :

Transferred: 3.334G / 300 GBytes, 1%, 56.166 MBytes/s, ETA 1h30m8s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 1m0.7s

Transferring:

temp_300GB_file: 1% /300G, 58.113M/s, 1h27m7s

…

2018/09/28 14:01:42 INFO :

Transferred: 38.756G / 300 GBytes, 13%, 60.059 MBytes/s, ETA 1h14m14s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 11m0.7s

Transferring:

temp_300GB_file: 12% /300G, 61.036M/s, 1h13m2s

2018/09/28 14:02:42 INFO :

Transferred: 42.320G / 300 GBytes, 14%, 60.123 MBytes/s, ETA 1h13m8s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 12m0.7s

Transferring:

temp_300GB_file: 14% /300G, 60.976M/s, 1h12m7s

2018/09/28 14:03:42 INFO :

Transferred: 44.000G / 300 GBytes, 15%, 57.706 MBytes/s, ETA 1h15m42s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 13m0.7s

Transferring:

temp_300GB_file: 14% /300G, 7.885M/s, 9h14m5s

2018/09/28 14:04:42 INFO :

Transferred: 44.000G / 300 GBytes, 15%, 53.588 MBytes/s, ETA 1h21m31s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 14m0.7s

Transferring:

temp_300GB_file: 14% /300G, 168.026k/s, 443h46m18s

Do you have any idea or do you need more specific information?

2018/09/28 14:23:40 INFO :

Transferred: 44.000G / 300 GBytes, 15%, 44.144 MBytes/s, ETA 1h38m58s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 17m0.6s

Transferring:

temp_300GB_file: 14% /300G, 3/s, 0s

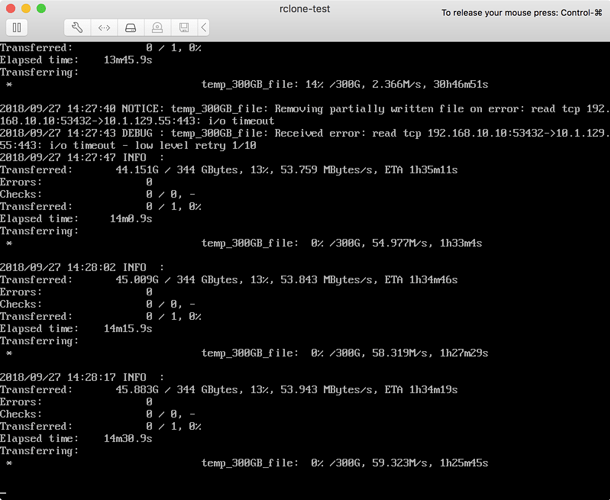

2018/09/28 14:24:18 NOTICE: temp_300GB_file: Removing partially written file on error: read tcp 192.168.10.10:53532->10.1.129.55:443: i/o timeout

2018/09/28 14:24:20 DEBUG : temp_300GB_file: Received error: read tcp 192.168.10.10:53532->10.1.129.55:443: i/o timeout - low level retry 1/10

2018/09/28 14:24:40 INFO :

Transferred: 45.093G / 344 GBytes, 13%, 42.729 MBytes/s, ETA 1h59m23s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 18m0.6s

Transferring:

temp_300GB_file: 0% /300G, 60.383M/s, 1h24m28s

2018/09/28 14:25:40 INFO :

Transferred: 48.724G / 344 GBytes, 14%, 43.740 MBytes/s, ETA 1h55m12s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 19m0.6s

Transferring:

temp_300GB_file: 1% /300G, 61.873M/s, 1h21m26s

IO timeout means there was a pause in receiving data of more than --timeout seconds

The default is

--timeout duration IO idle timeout (default 5m0s)

So it means there was a pause for more than 5 minutes. Do you see the bytes downloaded pause in the stats before the timeout?

It looks like it transferred 38GB of the file then had the pause...

You could try increasing --timeout to see if that helps.

(This issue will help when implemented: Resume downloads if the reader fails · Issue #2108 · rclone/rclone · GitHub )

Not resolved…

rclone copy icos:khayama-test2/temp_300GB_file . -vvv --timeout 60s

2018/09/28 19:06:53 DEBUG : rclone: Version “v1.43-116-g22ac80e8-beta” starting with parameters [“rclone” “copy” “icos:khayama-test2/temp_300GB_file” “.” “-vvv” “–timeout” “60s”]

2018/09/28 19:06:53 DEBUG : Using config file from “/root/.config/rclone/rclone.conf”

2018/09/28 19:06:54 DEBUG : pacer: Reducing sleep to 0s

2018/09/28 19:06:55 DEBUG : temp_300GB_file: Couldn’t find file - need to transfer

2018/09/28 19:07:54 INFO :

…

2018/09/28 19:18:54 INFO :

Transferred: 41.309G / 300 GBytes, 14%, 58.685 MBytes/s, ETA 1h15m13s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 12m0.7s

Transferring:

temp_300GB_file: 13% /300G, 60.189M/s, 1h13m21s

2018/09/28 19:19:54 INFO :

Transferred: 44.000G / 300 GBytes, 15%, 57.705 MBytes/s, ETA 1h15m42s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 13m0.7s

Transferring:

temp_300GB_file: 14% /300G, 25.885M/s, 2h48m47s

2018/09/28 19:20:41 NOTICE: temp_300GB_file: Removing partially written file on error: read tcp 192.168.10.10:53550->10.1.129.55:443: i/o timeout

2018/09/28 19:20:43 DEBUG : temp_300GB_file: Received error: read tcp 192.168.10.10:53550->10.1.129.55:443: i/o timeout - low level retry 1/10

2018/09/28 19:20:54 INFO :

Transferred: 44.596G / 344 GBytes, 13%, 54.313 MBytes/s, ETA 1h34m4s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 14m0.7s

Transferring:

temp_300GB_file: 0% /300G, 59.997M/s, 1h25m10s

2018/09/28 19:21:54 INFO :

Transferred: 48.154G / 344 GBytes, 14%, 54.740 MBytes/s, ETA 1h32m14s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 15m0.7s

Transferring:

temp_300GB_file: 1% /300G, 60.715M/s, 1h23m9s

Try setting the timeout much bigger than the default, say --timeout 1h

Let me check with 1h, I’ll be back later

No change…

rclone copy icos:khayama-test2/temp_300GB_file . -vvv --timeout 1h

2018/09/28 19:37:51 DEBUG : rclone: Version “v1.43-116-g22ac80e8-beta” starting with parameters [“rclone” “copy” “icos:khayama-test2/temp_300GB_file” “.” “-vvv” “–timeout” “1h”]

2018/09/28 19:37:51 DEBUG : Using config file from “/root/.config/rclone/rclone.conf”

2018/09/28 19:37:52 DEBUG : pacer: Reducing sleep to 0s

2018/09/28 19:37:53 DEBUG : temp_300GB_file: Couldn’t find file - need to transfer

2018/09/28 19:38:52 INFO :

Transferred: 3.414G / 300 GBytes, 1%, 57.610 MBytes/s, ETA 1h27m51s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 1m0.6s

Transferring:

temp_300GB_file: 1% /300G, 59.684M/s, 1h24m48s

…

2018/09/28 20:49:52 INFO :

Transferred: 44.000G / 300 GBytes, 15%, 10.428 MBytes/s, ETA 6h58m58s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 1h12m0.6s

Transferring:

temp_300GB_file: 14% /300G, 0/s, 0s

2018/09/28 20:50:22 NOTICE: temp_300GB_file: Removing partially written file on error: read tcp 192.168.10.10:53558->10.1.129.55:443: i/o timeout

2018/09/28 20:50:24 DEBUG : temp_300GB_file: Received error: read tcp 192.168.10.10:53558->10.1.129.55:443: i/o timeout - low level retry 1/10

2018/09/28 20:50:52 INFO :

Transferred: 45.602G / 344 GBytes, 13%, 10.660 MBytes/s, ETA 7h57m45s

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 1, 0%

Elapsed time: 1h13m0.6s

Transferring:

temp_300GB_file: 0% /300G, 60.260M/s, 1h24m30s

…

How’s the next try? Is this bug?

There is something going on at the networking layer I would say.

My hunch would be that this isn't an rclone problem but a problem with the network layer (eg ethernet drivers).

Can you try the download on a different machine on the same network?

Maybe you could use Wireshark to get some idea of what is happening?

I’m sorry to say that this is already the second device.

The same phenomena can be observed within other IBM Cloud account environment.

So, I thinks it’s caused by rclone.

My downloading with s3fs was successfully done…

Maybe is a restriction of IBM Cloud with big files.

It sounds like it is some interaction between rclone and the IBM cloud environment then.

When you use s3fs do you see it do a retry, or does it download everything in one go?

I used “s3cmd” with “–continue” option.

Without “–continue” option, it failed.

Do you have the same option in rclone?

Ah, OK. So it sounds like s3cmd is failing the same way but retrying.

This issue Resume downloads if the reader fails · Issue #2108 · rclone/rclone · GitHub is about adding a similar feature to rclone but it isn't implemented yet.

Okay, I’m looking forward to your implementation.