I’m a bit of an S3 newbie, so I’m sure this might be user error, but is it possible for simple ‘lsf’ commands on an Amazon S3 bucket to generate enough calls that my AWS bill would significantly spike because of it?

I have about 11 Terabytes of personal photos and videos in an S3 bucket (Glacier Deep Archive storage class), and was using rclone to generate a CSV listing of all the files and folders in that bucket, so that I could import the listing into a media cataloguing system on my Mac.

There’s 230,000 files in the S3 bucket, and I was using the following rclone command to dump the file list:

rclone lsf -R --csv --absolute --format ps --files-only --fast-list --exclude .DS_Store mys3remote:/photolibrary.bucket

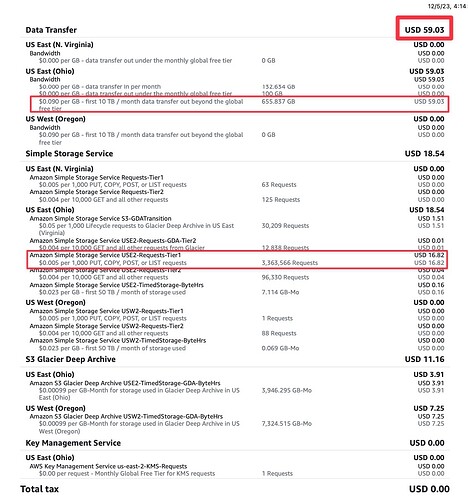

To my shock, at the end of the month, my AWS bill was nearly $60 for “data transfer out”, even though I did not actually download any files from the bucket. I checked my bucket security settings and all public access to the bucket was disabled.

I was under the impression that —fast-list minimized the number of transactions when performing directory listings? But even if I didn’t use —fast-list, is it really possible that my rclone command for getting a file listing could really be downloading over half a terabyte of data (I did about 5-10 test runs of the command, as I was integrating it into a python script, so the “data transfer out” size is probably a cumulative count of all my test runs)

Unfortunately I forgot to enable logging on the bucket in question, so I’m not exactly sure why I’m getting billed for 655 GB of Data Transfer Out when all I was doing was listing the bucket contents using —fast-list in rclone?

I contacted AWS support about this, and they just replied with links to their “Understanding data transfer charges” documentation page.

My AWS bill shows that I got charged $59.03 for Data Transfer Out, and an additional $16.82 for "USE2-Requests-Tier1". I know AWS charges for every API call, but this seems a bit excessive to me for just pulling down file listings (and not the files themselves). But I'm willing to be schooled on this if this is totally my fault!

My rclone version:

rclone v1.62.2

- os/version: darwin 14.2 (64 bit)

- os/kernel: 23.2.0 (x86_64)

- os/type: darwin

- os/arch: amd64

- go/version: go1.20.2

- go/linking: dynamic

- go/tags: none