If on the same filesystem; would it be possible to rename the files instead of copying them? Plus also I’ve seen some applications (like Sonarr, Radarr) try to hardlink instead of rename, so this would be great too.

Pinging @remus.bunduc

Is it a FUSE limitation to execute a cp instead of a mv when moving a file from the local disk to an rclone mounted path that’s using --cache-tmp-upload-path.

The reason for this is that this creates 2 versions of the file (taking up disk space), but more importantly, it creates unneeded I/O.

If you’re doing a straight up mv file.mkv /path/to/rclone/mount it’d be nice to have this happen instantly like a normal filesystem mv command does instead of what is obvious a cp.

But this may be a limitation of FUSE (or maybe if you’re using crypt, it has to encrypt it as well?)

So you’re asking about the moment when cache uploads the file in the cloud provider? (from --temp-path)

@aj1252 so you’re asking that instead of moving/copying the file from the local disk to the temp path, it should just create a symlink?

rclone should be running on Windows too and its support for that isn’t great. Plus, I’m not sure even if rclone supports symlinks. What the temp path for cache is really just a local remote.

In a perfect world, I have a local disk

/data

I have my rclone cache_upload in /data/rclone

Normally I’d just hard link ln /data/torrents/blah.mkv to /data/rclone/blah.mkv so I don’t eat the cost of the disk space twice.

I don’t think there is any way I’m aware of around linking across file systems as it wouldn’t work. Rclone would have to handle taking a file from the upload path differently. I used to go around this my using rclone move to ship stuff to my GD but with the upload cache, it’s just too simple to setup so I don’t mind a little extra IO / disk space for a brief period of time.

Not sure how else to explain it, but do this with a test file (i.e.: file.mkv):

time mv file.mkv /tmp – you’ll see this happens instantly

vs.

time mv file.mkv /path/to/rclone/mount – this takes time, even if you have --cache-tmp-upload-path set.

I think it’s due to a FUSE limitation. When you “move” a file from the local disk to a fuse mount, even though the file is technically being moved to the path identified in --cache-tmp-upload-path, the OS executes a cp operation, not a mv.

Ergo, this takes up CPU cycles, IO, etc. Unsure if there’s a way around it. I think this is what the OP is referring to (at least his first question).

The ‘mv’ only happens instantly if you aren’t crossing a file system though unless I’m missing something.

@Stokkes if you use encryption the file will be encrypted on the fly too and stored in the temp path encrypted. This would be different if cache will be the remote which is mounted but alas, I made no progress on that testing. To the best of my knowledge: drive -> cache -> crypt is still the safest way for now

@Animosity022 I understand what you’re saying but I haven’t seen any command or functionality of rclone touching the outside system (apart from configs and caches).

I’m not saying it’s impossible, I would scrape the entire backlog and reference files on the local drive rather than moving them in a temp fs but that requires extensive work to build in the failsafe checks.

Isn’t a move enough though? That’s what I do at least: mass copy data from local to the mount, wait it a bit to be sure it works, delete the source and let rclone upload them in the background while I’m still able to read from them. You could as well just do a move rather than copy/delete.

I’m trying to weigh in the pros and cons. If a mv works and keeps data duplication at a minimum, why add a new layer of complexity? And it is cause with files all over the place, it’s easy to change them somehow and destroy what you expected rclone to eventually backup

I’m all for simple.

My prior use case was downloading a torrent, seeding the torrent for 2 weeks. I’d use Sonarr/Radarr to hard link a copy on the same file system that the torrent is on to save disk space.

I’d unionfs/mergerfs the local with my GD. With rclone cache, I just use a cache_upload area instead and have it make a copy instead since I can’t hard link. All in all, a little more IO and some extra disk space for a brief period of time for more convenience and a simpler setup.

I think you’re over thinking this…

When you execute a mv on a local file system, the file system will just change location on the disk that are representing the physical bytes of the file, so the mv happens instantly because no inodes are being touched.

When you execute a mv to a FUSE-based filesystem (i.e.: rclone), because it’s a different filesystem, what actually happens is a two-step process:

- a copy is made from the source FS to the target FS

- The file is deleted

Now, if you’re using an rclone cache mount with --cache-tmp-upload-path (forget crypt I understand this adds a layer, so forget it for this conversation), while the mv operation goes from local filesystem to Fuse-filesystem, the file itself still ends up on the same physical disk, but it’s actually written to a completely new set of inodes and when the mv is completed, the references to the old inodes representing that file are removed.

One part of this question is there anyway to circumvent this or is this a FUSE or Kernel-based limitation? Because the file ends up in the same directory anyway (assuming again --cache-tmp-upload-path is set`.

Here’s how to test this, assuming --cache-tmp-upload-path is set to /tmp (again, FORGET crypt):

Case 1

time mv file.mkv /tmp

This will happen instantly because the FS is just changing the location of where the OS references the physical bytes on the disk

Case 2

time mv file.mkv /mnt/rclone

The file ends up in /tmp still because of --cache-tmp-upload-path is set to /tmp, but because it goes through the FUSE layer, it will take significantly more time. Also, because of this, it creates more IO, doubles the disk space (because the file is copied and THEN deleted, the space is used 2x during the mv)

Is that clearer?

What some programs (Sonarr, Radarr for example) do is to use hardlink instead of mv.

Example:

touch somefile1

ls --inode somefile1

ln somefile1 somefile2

rm somefile1

ls --inode somefile2

Which is the same as doing:

touch somefile1

ls --inode somefile1

mv somefile1 somefile2

ls --inode somefile2

It would be great if rclone could catch these as well as normal mv as Stokkes has described above.

I expect that this does exactly the same as if you would mount a “local” remote and not related to cache or tmp upload path. In fact, I expect that if I create a virtual disk and mount in on my mac, it should do the same thing.

My assumption could answer the question that yes, it might be limited to fuse.

But I can’t test it right now, I’m swamped (work and personal). I would rule out cache completely from this benchmark as again, it does nothing special apart from a local mount that gets created in the background.

I’ll give you an example which might show the issue better:

rclone mount /media/Cloud GoogleCached: --cache-tmp-upload-path="/media/Disk1/Rclone/Upload"

mv “/media/Disk1/qBittorrent/Finished/Movies1080/Mollys.Game…mkv” “/media/Cloud/MoviesRemux1080/Molly’s Game (2017)”

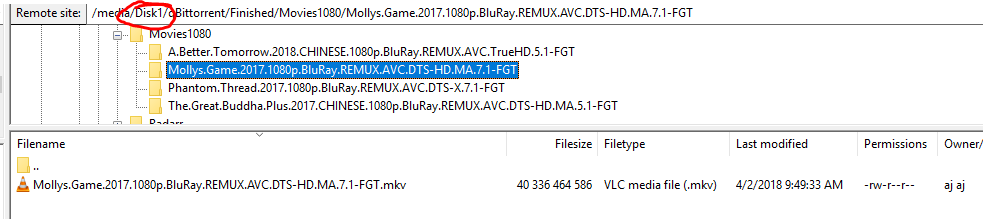

Here is the original on /media/Disk1:

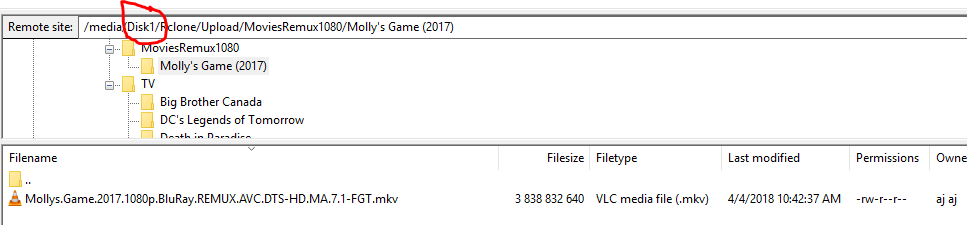

Here is the cache-tmp-upload-path on the same disk /media/Disk1:

Copying all 40G instead of just renaming it.

This was also discussed here: https://github.com/ncw/rclone/issues/1936

It was deemed an acceptable compromise in today’s local storage capabilities

Like I said, there’s definitely room to improve at some point. If it’s worth the additional risk or not, it can be subject to its own discussion/issue.

Since the polling issue is fixed, you could always just script a rclone move every x from a local path on the same disk and move them that way. I considered doing that again as well as it would be an effective work around for the issue and solve the same thing.

I just did something like this before:

OLDER='5d'

# Move older local files to the cloud

/usr/bin/rclone move /data/local/movies/ gupload:Movies --min-age $OLDER --syslog -v

Seemed to work fine and with the polling issue fixed, it would achieve the same goal in the end with very minimal effort/scripting.