@Ajki I’m using your mount setting and everything is working great but how do you update your plex library? On a timer?

@chris243

In my rclone-cron.sh I run

tree --prune -i -h -D /storage/media-local/ > /home/plex/rcloneque.log

rclone move …

pmslibupdate.sh

The pmslib file checks

if grep -q “series” /home/plex/rcloneque.log; then

curl “http://xxxx:32400/library/sections/2/refresh?force=0&X-Plex-Token=xxxx”

fi

Basically scripts just update the library i uploaded in.

Interesting… I would love to take a look of all the different scripts you made and canabilize them for my own setup =)… Any chance you can upload them somewhere? That would be awesome.

Also - are you still using the net settings you posted somewhere in the beginning of this thread?

Another option, which has the potential to allow you a fine-tuned approach, is to use the Plex Media Scanner command directly.

#!/bin/sh

su - plex -c /bin/sh << eof

export LD_LIBRARY_PATH=/usr/lib/plexmediaserver

find "$2" -type f -mmin -$((60*24)) -print0 | xargs -0 dirname -z | sort -uz | xargs -0 -I {} /usr/lib/plexmediaserver/Plex\ Media\ Scanner -r -p -s -c $1 -d "{}" --no-thumbs 2>&1

eof

Save this as a bash script and then feed it two arguments when called, the place to look for new files and the library number to look in. This, in theory, would have the effect of scanning only the folders you tell it to instead of rescanning the entire library. In practice, there is one oddity that Plex has not addressed and that is it also tries to match unmatched files from the specified library [1]. This does result in a slightly longer scan time than it should be, but nowhere as long as rescanning the entire library.

The exact definition of a “new file” is defined by the 60*24 part, as is the time calculates to 24hrs, but you can make that longer or shorter as desired by changing the numbers.

I have actually done some tests. I’m just a beginner but it just worked so far.

VPS Server with Plex Media Server installed

rclone mount --allow-non-empty --allow-other --read-only [secret:path/to/mount] [/mnt/TEST]

So far so good with rclone v.1.35, anyone else has tested it?

Plex sees correctly the files and i have tested with a 100 movies section without any issues. No ban, good speed/performance scanning and streaming.

Now i need to go for the next step which is going to production, but for that i would need to write the scripts for mounting, and plex, and plexpy and so on.

any other suggestions or issues detected?

Are you using GDrive or ACD?

I’m using Gdrive, but have tested also with Amazon.

Is it me btw, or is this mount just not that stable… Sometimes everything will be a-ok, pretty fast even, other times I keep on getting that horrid ‘There was a problem playing this item - PLAYER_ERROR_INVALID_OPERATION’ message on every movie that’s on Amazon. Running from a dedicated server.

Hey pushnoi,

There is a user named ‘Ajki’ in this thread that has done some extensive testing over the past couple of weeks. He has posted his mount script in this thread… not sure if thats his current one. At any rate, I’ve been using Ajki’s mount settings for the past 2 weeks and I have only lost “sync” once…

Now thats pretty awesome considering I’m a convert from ACD-CLI (which is an awesome idea BTW) … that was some neat software and when it worked… it was brilliant. But it got to the point where I had scripts running every couple of minutes just to keep everything floating… then it had be reset every freaking minute… then the.db would nuke itself…

That being said it was brilliant when it was working…

As to your question… running Ajki’s mount scripts as described in this thread… and rclone mount has been up and running for 2 weeks straight with only 1 loss of sync… and I can’t even really definitely say it was a fault of rclone… as it might have been me banging rocks together and trying to make something work.

Cheers

Yeah I build my current setup based on scripts from @Ajki (and thanks for that, those are awesome). I also came from acd_cli. Using his latest mentioned mount now. I tried something different though, re-analyzed all my media, which seemed to have worked quiet well - my problems seem to be gone after that. I’m using a server @ with 1.5TB, so I’m using this as a buffer for my newer additions, uploading only files from my encrypted folders after they are a month old (running a cronjon with a rclone move --min-age 1M etc). I don’t know why, but maybe media that is analyzed when it’s stored locally needs to be re-analyzed after being moved to ACD.

Might be a good idea to have this done in the same script I use to upload stuff to my ACD, but I have no idea how I’d script this. I’m using:

curl http://127.0.0.1:32400/library/sections/all/refresh -H "X-Plex-Token: my_plex_token"

to update my library after adding new stuff, and as far as I know it’s only possible to do either this, or do a full refresh:

curl http://127.0.0.1:32400/library/sections/all/refresh?force=1 -H "X-Plex-Token: my_plex_token"

If someone knows a way to call an analyze media from cli I would be forever in their debt =) Over @ Plex I can only find this article which only uses the web interface to make it happen: https://support.plex.tv/hc/en-us/articles/200289336-Analyze-Media

I made simple python script that I run once per day that analayze all media where analyzation info is missing.

You could modify it to reanalzyte all files by changing "where bitrate is null" to "where 1=1" , also Iam using curl calls to reanalyze as plex scanner cli is not working on default 16.04 ubuntu installation ( confirmed by dev )

If its working for you you could replace curl call to cli call.

#!/usr/bin/env python3

import requests

import sqlite3

conn = sqlite3.connect('/var/lib/plexmediaserver/Library/Application Support/Plex Media Server/Plug-in Support/Databases/com.plexapp.plugins.library.db')

c = conn.cursor()

c.execute('select metadata_item_id from media_items where bitrate is null')

items = c.fetchall()

conn.close()

print("To analyze: " + str( len(items) ))

for row in items:

requests.put(url='http://xxx:32400/library/metadata/' + str(row[0]) + '/analyze?X-Plex-Token=xxx')

requests.get(url='http://xxx:32400/library/metadata/' + str(row[0]) + '/refresh?X-Plex-Token=xxx')

print(str(row[0]))

p.s. Since I dont have any files locally in Plex I never encounter that problem but what you could also do is change above query to reanalyze video after its moved to cloud.

@Ajki Tnx for that, with some trial and error (I’m not much of a scripter), I got this working as is. I ran this a couple of times:

plex@pushnoi:~/scripts$ python3 plexanalyse.py

To analyze: 104

57590

plex@pushnoi:~/scripts$ python3 plexanalyse.py

To analyze: 4

56764

plex@pushnoi:~/scripts$ python3 plexanalyse.py

To analyze: 0

Traceback (most recent call last):

File "plexanalyse.py", line 16, in <module>

print(str(row[0]))

NameError: name 'row' is not defined

Kind of weird that it found another 4 items after running the first time, but oh well. And I’m guessing that last output is normal if there are no rows where bitrate is null or something? Do you have this running as a cronjob every couple of minutes or so, or just when you’ve added new content?

No iam running it once per day during nights.

You should check which 4 files were not analayed as its more then likely those files are corrupted and cant be analyzed by Plex.

Try to analayz it manually by visiting

http://xxx:32400/library/metadata/YYY/analyze?X-Plex-Token=XXX

replace XXX and in YYY put the ID that is not getting analayzed, on that video click on 3 dots on the left side and run analyze manually.

If there is no info bellow the movie posted eg 1080p AAC 2.0 etc… that means video is not analyzed … you can also check info and you will see bitrate and other info about video is missing.

p.s. Not sure what you changed in source i never got NameError.

@Ajki Why does ACD keep locking your account? I assume you have the unlimited storage option right?

Iam not getting my account locked anymore, last time was more then a month ago.

@Ajki Have you changed your mount settings much recently? I get a lot of timeouts… Perhaps your timeouts are too strict I’m using the last mount command you posted.

2017/01/17 03:43:51 somefile: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:50946->SOMEIP:443: i/o timeout 2017/01/17 03:43:51 somefile: ReadFileHandle.Read error: read tcp SOMEIP:50946->SOMEIP:443: i/o timeout 2017/01/17 04:05:47 somefile: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:38340->SOMEIP:443: i/o timeout 2017/01/17 04:05:47 somefile: ReadFileHandle.Read error: read tcp SOMEIP:38340->SOMEIP:443: i/o timeout 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: low level retry 1/1: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:14:43 somefile: ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:16:59 somefile: ReadFileHandle.Read error: read tcp SOMEIP:60056->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:47698->54.72.23.233:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:25:53 somefile2: ReadFileHandle.Read error: read tcp SOMEIP:47698->SOMEIP:443: i/o timeout 2017/01/17 04:41:59 somefile2: ReadFileHandle.Read error: low level retry 1/1: unexpected EOF 2017/01/17 04:41:59 somefile2: ReadFileHandle.Read error: unexpected EOF 2017/01/17 04:50:45 somefile2: ReadFileHandle.Read error: low level retry 1/1: unexpected EOF 2017/01/17 04:50:45 somefile2: ReadFileHandle.Read error: unexpected EOF 2017/01/17 05:01:22 somefile3 ReadFileHandle.Read error: low level retry 1/1: couldn't reopen file with offset: needs retry 2017/01/17 05:01:22 somefile3 ReadFileHandle.Read error: couldn't reopen file with offset: needs retry 2017/01/17 05:10:37 somefile3 ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:45354->SOMEIP:443: i/o timeout 2017/01/17 05:10:37 somefile3 ReadFileHandle.Read error: read tcp SOMEIP:45354->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:06:26 somefile4: ReadFileHandle.Read error: read tcp SOMEIP:44446->SOMEIP:443: i/o timeout 2017/01/17 06:34:01 somefile5: ReadFileHandle.Read error: low level retry 1/1: read tcp SOMEIP:45250->SOMEIP:443: i/o timeout 2017/01/17 06:34:01 somefile5: ReadFileHandle.Read error: read tcp SOMEIP:45250->SOMEIP:443: i/o timeout

I don't think my actual files are corrupted, I just noticed that sometimes it take more than 1 try to get your script to return 0 files listed with no bitrate in the metadata. I still do a curl update library after a newly added file. Afterwards I'm gonna put your script for analyzing as well, since the update library seems to trigger a couple of files (which are random files btw) to be marked with missing bitrate. I don't know how this happens exactly, but I guess I'll just keep on running the script after the refresh + as a cronjob and see how it goes.

On another note - I noticed that the older Windows and Apple application (Plex Home Theater) has no option to force Directstream (if it supports it at all). It just uses Direct play or Transcoding. Have you guys noticed that Direct playing really does not work well with content placed on Amazon, or is that just me? Playing from the newer Samsung / LG apps seems to be just fine when set to direct streaming and/or transcoding. Chromecast as well, iOS devices also (since they always seem to be transcoding anyway).

I opened a topic on the Plex forums to see if there's an option to disable Directplay / force Directstream all together on the server side, rather than on the client side, but got 0 answers =). Do some of you guys have a solution for this?

I did some more testing today and got the instance where movie would get stuck at begining at "original" playback ( if I changed to 4Mbit 720p it would work ). My client is on 1GBPs network and have more then enough bandwith to the server.

Bellow is the video and logs from rclone and Plex.

Mount options for testing ( note files are layered trough ENCFS )

rclone mount

--read-only

--allow-non-empty

--allow-other

--max-read-ahead 14G

--acd-templink-threshold 0

--checkers 16

-v

--dump-headers

--log-file=/var/log/acdmount.log

acd:/ /storage/.acd/ &

exit

my production mount settings

rclone mount

--read-only

--allow-non-empty

--allow-other

--max-read-ahead 14G

--acd-templink-threshold 0

--checkers 16

--quiet

--stats 0

acd:/ /storage/.acd/ &

exit

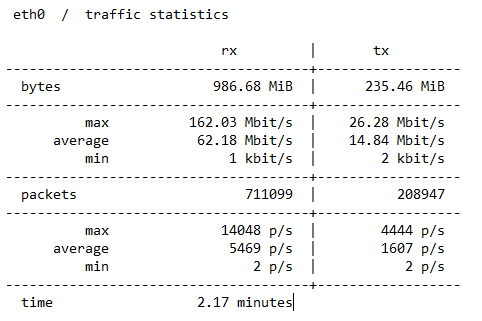

vnstat during the test

https://puu.sh/tpia4/cb1867fc0b.mp4

Rclone log: https://drive.google.com/file/d/0B89x0Pnx4k71Tm1LNW11bGVWWmM/view?usp=sharing

Plex log: https://drive.google.com/file/d/0B89x0Pnx4k71QklNckllWEFabm8/view?usp=sharing

As you see I did not get those EOF errors in this test ( remember seeing them on my home alpine machine ), the interesting part in plex logs is

DEBUG - We want 120 segments ahead, last returned was 1 and max is 93

I wonder if rclone could be tweaked in a way to return max segments

p.s. Hopefully soon my lib will be converted so I can switch completely to crypt and do some proper testing. ( away next week so if its not done by then will do it in February )

17.01.2017/12:00 compareacdvscrypt START

ENCFS STATS

Total objects: 40703

Total size: 38307.922 GBytes (41132818298382 Bytes)CRYPT STATS

Total objects: 38692

Total size: 32530.897 GBytes (34929784507837 Bytes)

Uploaded in last hour:

17.01.2017/12:00 compareacdvscrypt END in 320 seconds

Maybe @ncw have an idea how to optimize it even more.

I had to bump up the --timeout setting to 45s to get rid of the i/o timeouts.

1m is default setting