I am mounting through crypt so perfect, that is what I needed. I will probably give it a try, I am tired of having my personal server 24/7 (my upload speed sucks)

Thanks very much

What sort of speeds do you get using your script? I’m using my Hetzner server with its 1GBps line and I get about 8MiB/s average

Whats weird is, when Plex goes to play a file, using tcptrack, I barely go over a MiB a second, and Plex rarely even loads the video. I’m starting to get so annoyed because it just barely works at all.

Are you in Europe or the US?

Do you have amazon.com account or any of EU ones ?

Amazon UK account was used for signing up to Amazon cloud drive.

I can only upload a file at 4MB/s too, what do you think the issue could be?

Did you get Hetzner from market place as some of their datacenters are on shared 1GB line

Try speedtest-cli to get an idea how much bandwith do you even have.

Just ran it, got 832Mbps down, 700Mbps up.

That is really weird, what speed do you get if you copy some file directly from acd ( without crypt)

eg rclone copy acd:/someencryptedfolder/someencrypted file.

also while copy from crypt is in progress open another ssh and check free for ram and cpu usage.

Tried unencrypted (after a reboot) saw peaks of 25MiB/s, average was 17MiB/s

Ran the test a few minutes later and I’m back to shitty 5MiB/s, doesn’t seem to be improving after a few re runs.

I’ve got a i7 3770 in my machine and 16GB of RAM, they aren’t getting hammered during download by rclone, any more things I can test?

It’s quite probable that it’s your server.

Does it have an INIC (Intel NIC), those tend to fare better. One thing you can try (you’d need to confirm) is that I believe Hetzner’s doesn’t charge you for the first 14 days of using a server if you buy an auction one. You could try getting another one and testing it, then keeping it or killing it depending on the results without being charged. Again, please verify.

My experiences with Hetzner are spotty but the best servers I had where in DC19,20,21 with an Intel INIC and “ENT HDDs”

You can filter the auctions / search for those and try a different server.

Just managed to snag a Xeon with 16GB RAM and 2 ent HDD’s with a INIC and guaranteed 1Gbps up/down.

I’ll have to try with this new server, are you using encfs or crypt?

Using crypt.

My server is now with OVH in a Canadian data centre as all my Plex clients are in North America and peering from Germany was unstable.

Dont waste your time getting a new server.

if you do --transfers=50 it will fill up your 1Gbit.

As i already mentioned i get similar speeds and then randonmly a file copies at 35MB/sec.

So this seems like a traffic shaping behaviour.

Either this applies to all users or heavy data users and it doesnt have to do with crypt it seems.

Maybe if someone creates a new amazon account and tries out if they get the same results.

Another thing might be that they might limit speed from rclone. We can test this from hetzner with Windows server running official Amazon client.

My 0,02c…

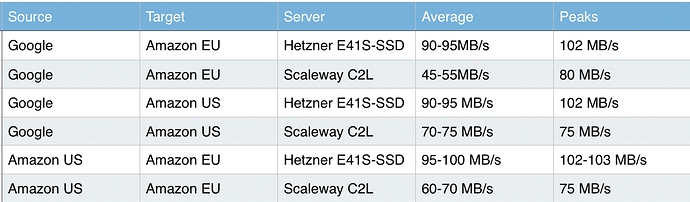

i share my results from my tests copying between Google Drive, Amazon US & Amazon EU.

rclone --verbose --transfers=15 --no-traverse --drive-chunk-size=16M --no-update-modtime --ignore-existing copy source:PATH target:PATH

I will explain more in detail how i reach to use 15 transfers in parallel.

With transfer=15 the overhead because of Amazon being the target (object not found issues and retries), i would measure it is around 20-25%.

With this number i maximize the bw in Hetzner, and 80% of bw in Scaleway. To get 100% bw with Scaleway i should go to transfers=25, but then it becomes really unreliable and overhead goes to >70%.

so with 15 i find comfortable.

It is clear for me that Intel i7 CPU performs much better for single streams as trying with just a few transfers my Hetzner instance is transferring at really higher rates, not the same with Scaleway Avoton processors.

In any case, very surprised with these results, pretty amazing, at least not expected; thanks to the good work of @ncw and pretty happy also with cloud giants and smaller ones (hetzner & scaleway).

I wanted to add to this conversation as I’ve been following for a few months. My configuration as far as my hosted Plex might differ slightly as I don’t have my downloading, uploading and plex roles separated on different VPS or dedi’s as some of you have. Not sure I’d call my config simplistic as all of the roles live on one box. It deffiniently adds more challenges. I don’t want to get off topic, but I feel an overview of my config is needed to compare to others that are using the rclone mount and having the best streaming experience possible.

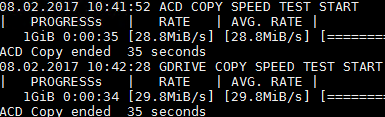

First my dedi is hosted on SYS in CA using this server E5-SAT-2-32. I would have liked SSD, but due to this box also downloading content, it is beneficial to have larger storage locally. Kind of a buffer before I upload to ACD. I am running EVERYTHING in docker with the exception of rclone. I’ve limited my downloader (nzbget) to 4 cores of the CPU, so during downloading, extracting, repair etc, it doesn’t interfere with Plex transcoding. Content is stored locally and I perform a rclone copy to ACD when needed. ACD is mounted using rclone and I’m using encFS for encryption. I’m doing reverse encryption locally and obviously forward encryption for ACD. I’ve ran @Ajki speed test and I’m averaging around 30MB/s from ACD. Plex start times are rather quick, I can start a movie stored in ACD between 5-10sec. Obviously, content stored locally starts up instantly.

My first issue I ran into was library performance (browsing, etc.) Because I’m not running this on SSD library performance took a hit. I’ve followed this post to help improve that and it has helped tremendously. I’ve set my default_cache_size to 500000.

I’ve followed this threads advice and have set my rclone mount settings to:

/usr/sbin/rclone mount --allow-non-empty --allow-other --acd-templink-threshold 0 --log-file=/home/plex/scripts/logs/acdmount.log acd:/ /home/plex/.acd &

This has helped quite a bit with direct play and direct stream however, I still have issues once and awhile with direct play. My thinking here is ACD and I’m not sure how to correct this. I saw earlier that @Ajki had asked about write-back-cache. Since my ACD mount is not read only, is this beneficial to my type of setup? This wasn’t really explained or touched on earlier in this thread.

I’d also like to understand are most of you running Plex in docker or local install?

That server is so beefy I’d just disable Direct Play/Direct Stream. I too have had these issues (I have the OVH SP-32 with 2x480GB SSDs in RAID 0 (I do nightly backups so if the raid fails, no big deal). I use this box for downloading too and just run a script to move things to ACD when the drive gets full. The SSDs make so much of a difference. I used to use SYS but I ditched it because the HDDs are slow and I would consistently get CPU IO Wait issues when transcoding/scanning/doing almost any disk activity. If you use nzbget/sonarr/etc and do extraction on the same disk you have your Plex library, my experience with SYS is that you’ll notice some cpu io wait.

It’s just Amazon is a bit flakey sometimes, it’s not always stable.

I also use Plex in a docker (as well as the other tools). I suggest installing the Glances docker and keep that running when you’re transcoding/extracting. It will keep a running log of the IO Wait issues, this could potentially be a problem.

Yea, when direct play issues come up I just disable it on my client and transcode just fine. Direct stream I don’t have an issue with, since essentially it is being transcoded to a new container, so it’s just direct play. I’ve transcoded up to 14 streams with no issues, the server barely breaks a sweat. I do have glances installed and I see IO Wait issues in my glances. They are very brief though and don’t seem to be directly effecting streaming or transcoding. Like you said this typically happens when the disk is being hammered normally when something is being downloaded. I will mention that making that SQLite change did help with library performance issues, even when IO Waits were happening. Essentially this is putting pages into RAM for better performance. Default for SQLite is only 2000 pages. I was closer to 100,000 pages in my sqlite db. Maybe one day Plex will allow us to use other DBs postgres, maria, etc…

I was previously on OVH and dropped to SYS for a few reasons. The spinning drives on OVH and SYS are the same (HGST 7200 drives), I was not nearly using the 500Mbps guaranteed and the 250Mbps that SYS provides was fine, and the largest reason was cost. The cost for SYS for the performance of this box can’t be beat and as I mentioned I wasn’t utilizing OVH to the fullest.

@Tank

How big is your media library in TB? I still have my SYS server until Feb 14, so I’ll try this. Just did a PRAGMA page_count; and I have 147k pages.

If I did the calculation in the post on the Plex forums, I’d almost have to set it to 2.9 million cache size. I’ll try it to see if my server explodes.

This is a great find though, thanks for sharing!

My library is about 30TB on ACD. I don’t think you need to go 20x, even setting your cache at a million might be sufficient for awhile. If I did my math correctly even 1 million pages in cache is only 1.5GB of RAM. Honestly if you have the RAM to spare, why not?

I’m always on the hunt for more performance and at this point it’s really the mount options or ACD. I guess this is why we’re all here. I could go SSD, but I don’t think it’s needed until I run out of RAM.

@Ajki have you noticed any improvement over google drive vs ACD?

Great thanks… I will try with 2 million cache size I have 32GB of ram so lots to spare.

I’ve played with many mount options but I don’t really feel any make any significant difference so I just use ``rclone mount remote: folder --allow-other --read-only`, pretty simple.

For Google Drive, I notice it is significantly more performant for me but I can’t use it without getting banned