Hey guys,

since I slimmed down my setup (thrown out Plexdrive and moved from UnionFS to MergerFS) I rather was into testing streaming and general usage.

Homeserver:

- 100Mbit down

- Debian 10

- Gdrive crypt

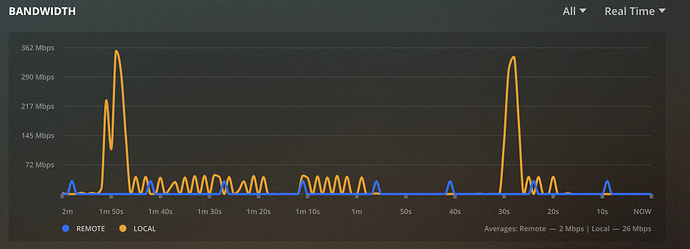

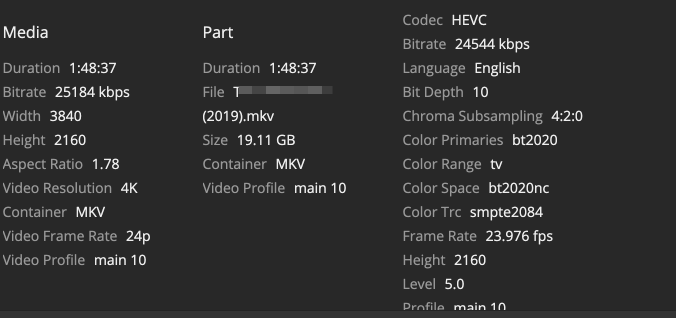

So I tested 4k streaming and have chosen a very recent movie which wants about 50Mbit. While I could see via Netdata that the server mostly used 90+Mbit I experienced a few lags which slighty affected a nice watch.

- Plex on LG OLED C8 WebOS = Just a few lags

- Plex on Nvidia Shield System = Considered unwatchable

- Plex on Nvidia Shield Kodi = Messed up picture and sound for like 30 seconds after each lag

While I assume that is just about the client caching and I could live with it to watch via the TV-Plex, I have to admit that it works just fine when streaming from my rent dedicated Server tho.

Anyway is there any optimization I can do?

rclone mount:

[Unit]

Description=RClone Mount Auto

AssertPathIsDirectory=/mnt/google/auto-gd

Wants=network-online.target

After=plexdrive.service

[Service]

Type=notify

ExecStart=/usr/bin/rclone mount "auto-gd:/" /mnt/google/auto-gd \

--allow-other \

--allow-non-empty \

--acd-templink-threshold 0 \

--buffer-size 2G \

--checkers 32 \

--config /root/.config/rclone/rclone.conf \

--dir-cache-time 144h \

--drive-chunk-size 32M \

--fast-list \

--log-level INFO \

--log-file /home/scripts/logs/mount-auto.cron.log \

--max-read-ahead 2G \

--read-only \

--tpslimit 10 \

--vfs-cache-mode writes \

--vfs-read-chunk-size 128M \

--vfs-read-chunk-size-limit off \

--stats 0

ExecStop=/usr/bin/fusermount -uz /mnt/google/auto-gd

Restart=on-failure

RestartSec=10

[Install]

WantedBy=multi-user.target

MergerFS:

[Unit]

Description = /home/user/downloads/auto MergerFS Mount

After=mount-auto.service

RequiresMountsFor=/mnt

[Mount]

What = /mnt/google/auto:/mnt/google/auto-gd

Where = /home/user/downloads/auto

Type = fuse.mergerfs

Options = sync_read,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff,auto_cache

[Install]

WantedBy=multi-user.target

In the next case I just want to get rid of the testfiles in one directory, but I can't since the rclone mount is RO. And ofc I can't find out via Gdrive website because of the encryption. What is the recommended usecase here? I mean I could maybe live with that I can't delete a torrent via the rutorrent Web-UI but do I need another RW rclone mount then?

In that case I also want to question the upload folder. Couldn't files placed in a RW rclone mount be encrypted and uploaded automatically so I also can manage the torrents conpletely from the web?

Slightly regarding the last question of managing torrents, I yesterday added 35 torrents in one go and found out today that just a few downloaded, some already have downloaded partly and others stick to 0%. And while each torrent has several seeders, even up to over 200, they don't really download. Like 4 torrents currently show a download of 0.2KB/s to 0.8KB/s, others don't download at all eventhough there are dozens of seeders. And I wonder if it could be because the upload-script already uploaded and rtorrent maybe can't handle that? The upload script runs every 5 min uploading files older than 5 min. Looks like rtorrent doesn't keep up the timestamp of unfinished torrents if they are not downloading. But if that is the case I also doubt only running it once per night won't do the work either, there may be torrents with just 1 home-PC-seeder and therefor it may take days until it's finished....

Edit: I just rechecked and it looks like the timestamp of the files which still show as downloading with the very slow speed don't update, they have the timestamp from yesterday.

Upload-script:

#!/bin/bash

# RCLONE UPLOAD CRON TAB SCRIPT

# Type crontab -e and add line below (without # )

# * * * * * root /home/scripts/upload-m.cron >/dev/null 2>&1

if pidof -o %PPID -x "upload-auto.cron"; then

exit 1

fi

LOGFILE="/home/scripts/logs/upload-auto.cron.log"

FROM="/mnt/google/auto/"

TO="auto-gd:/"

# CHECK FOR FILES IN FROM FOLDER THAT ARE OLDER THEN 15 MINUTES

if find $FROM* -type f -mmin +5 | read

then

echo "$(date "+%d.%m.%Y %T") RCLONE UPLOAD STARTED" | tee -a $LOGFILE

# MOVE FILES OLDER THEN 5 MINUTES

rclone move $FROM $TO -c --no-traverse --transfers=300 --checkers=300 --delete-after --delete-empty-src-dirs --min-age 5m --log-file=$LOGFILE

echo "$(date "+%d.%m.%Y %T") RCLONE UPLOAD ENDED" | tee -a $LOGFILE

fi

exit

Is anyone having suggestions, tipps, similiar usecases? I would be glad to solve all that finally.