Actually, yes, come to think of it I don't think it affect the download transfers either.

My bad.

So @random404, that's why I use mergerfs to avoid some of the current limitations of rclone in terms of writing and I batch my uploads at night.

If the feature I linked get implemented, I'd be back at just using rclone for writes as the delay you are seeing for #2 doesn't work for me and my workflow.

I'm not talking about the cache backend. I am using VFS cache mode writes.

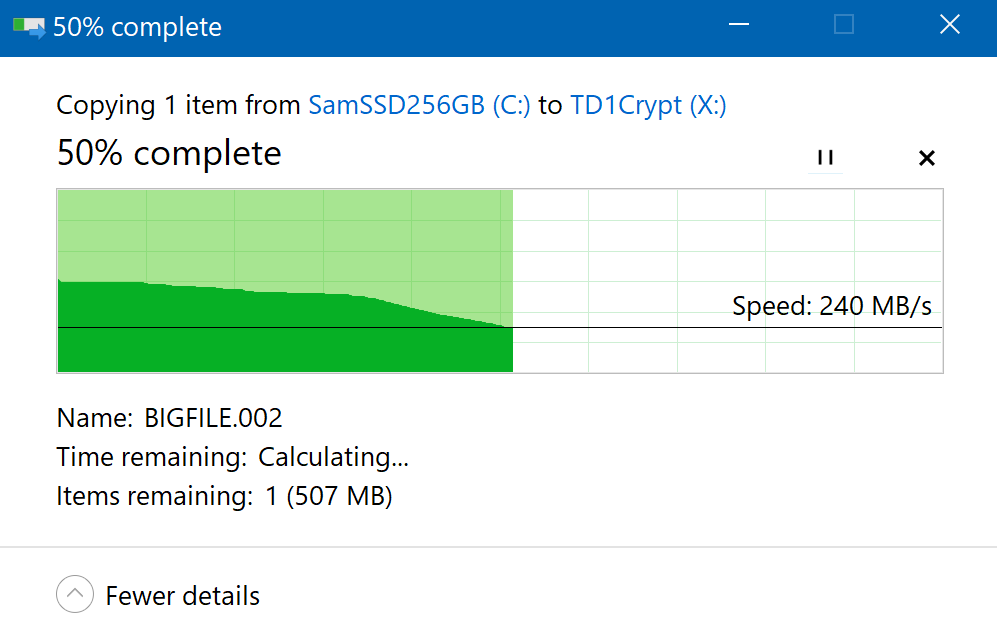

This isn't fully 500MB/sec, but to achieve that you need to both be reading and writing to SSD, preferably different ones. I didn't bother to rewire my whole setup just to show this. It pretty clearly illustrates there's no limitation in rclone in ingesting files quickly to cache. This speed is fully in line with my expectations based on where I am copying the files to and from (using the same Samsung 840 Pro as both soruce and cache destination in this case). This is a much older and slower SSD than what you have mind you.

However, this only works up to 4 files. Beyond this the current files have to finish uploading before the next one gets moved to cache - so this gives the appearance of the full transfer of all the files taking a long time from the point of view of the OS (effectively being limited by upload speed at that point). This needs asynchandling to fix, see linked issue, (or a mergerFS solution like Animosity uses).

If you can't get your cache to ingest a single large file quickly while upload queue is empty then you are either being limited by the drive you write to locally (VFS cache) , or the drive you read the files from.

You can't write 240MB/s to the vfs-cache-mode writes backend with a large file because it has to upload it.

If you want to make a new post and share your logs/config, please do so as it has to upload it when it is done.

It writes into VFS write cache at 240MB/sec (or however fast your reading and writing locally can handle). In the example here - a single file - the transfer will close and finish as soon as the local copying to cache is done - as far as the OS is concerned.

Of course, from there it's going to upload at a much slower speed, but that happens in the background by rclone.

I don't see what the confusion is here...

And yes, this will not happen for many files transferred in bulk. I think we all already agree as pr discussions above that this needs an update for async handling or a workaround like mergerFS to be properly fixed.

Let's deal with data.

[felix@gemini ~]$ ls -al file.txt

-rw-r--r-- 1 felix felix 104857600 Aug 18 14:02 file.txt

[felix@gemini ~]$ du -ms file.txt

100 file.txt

[felix@gemini ~]$ time cp file.txt file1.txt

real 0m0.044s

user 0m0.000s

sys 0m0.040s

[felix@gemini ~]$ time cp file.txt /Test/file.txt

real 0m6.669s

user 0m0.004s

sys 0m0.055s

[felix@gemini ~]$ ps -ef | grep Test

felix 31574 31221 5 14:03 pts/1 00:00:01 rclone mount GD: /Test --vfs-cache-mode writes -vv --log-file /tmp/test.log

felix 31637 30509 0 14:03 pts/0 00:00:00 grep Test

You can see the file copied to cache takes a lot longer.

The reason is because it uploads after it writes locally and does not give you a prompt back to the OS until the upload is completed.

Debug log here:

https://pastebin.com/7RMijYpY

That's the reason that the OP is seeing a different in write speeds as it has to upload.

If you want to share a log that shows something else, please do.

I trust that your results are accurate, but I just can't replicate those results.

Apparently it does (return to the OS) for me - long before the background upload completes.

Note that I am on windows, so I guess it's not impossible that this difference might be down to some difference in either the OSes, or Winsfv / FUSE implementations.

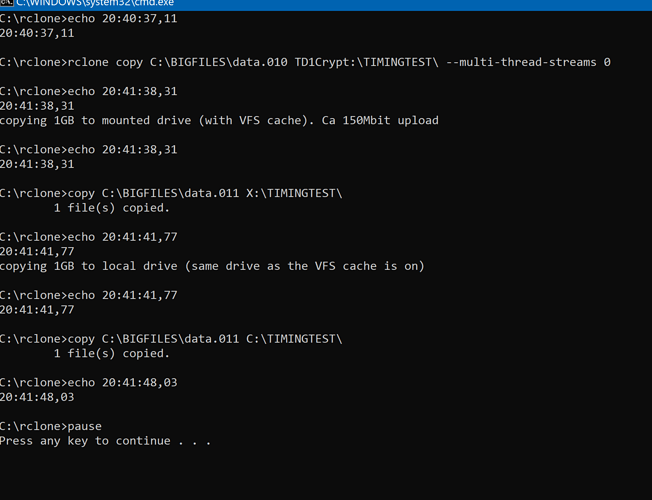

I threw together a quick batch to demonstrate (excuse my primitive scripting)

TLDR:

Directly to remote (no cache) = about a minute

To mount via VFS cache = 4'ish seconds

To local SSD (same drive as cache) = 6'ish seconds

I consider the last two results the same, especially given that under the third test the SSD was also busy reading for the cache to upload in the background.

I was manually watching network while in progress. First test returns after upload done. Right after second test returns, it's upload starts, and the third test is done long before the background upload from test 2 finishes.

This exactly mirrors what I see if I just move a single big file over within Windows explorer. It transfers for a few seconds then completes and closes. Only large batches end up keeping the copy window open (due to the reasons described earlier).

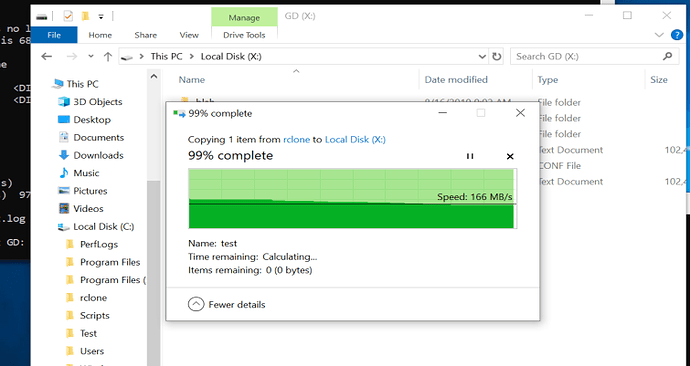

It's the same on Windows as you can see it stops at 99% and sits while it uploads.

The "speed" shown is 166MB/s which is accurate but it'll stall at 99% until the upload finishes and report back a 'bogus' speed for the transfer and you don't get a command prompt back nor the explorer back until the upload is complete.

Windows rclone debug log:

I see what you are saying, but as I said this stalling doesn't happen for me unless there are 4 or more files already uploading.

A single file copy returns as soon as it hits the cache. Doesn't matter if it's via explorer (see youtube video) or via commandline (as demonstrated in previous post).

I just can't replicate what you are seeing on your end.

For the sake of completeness, I am currently on version:

C:\rclone>rclone version

rclone v1.48.0-103-g85557827-fix-3330-vfs-beta

- os/arch: windows/amd64

- go version: go1.12.3

But I am not aware of change that would be relevant to this.

I don't think I can get much out of looking at the debug log unless I have some idea what I'm looking for - but if you want to dig around then I'd be willing to make a debug output of the same scenario as on the video if you request it. I expect you have much more experience at deciphering the logs than me.

You've shared the behavior of a cache remote with cache-tmp-uploads not the vfs-cache-mode writes backend.

No. Let me be absolutely clear about this:

I am not using any cache backend at all.

I am not so confused about configuration that I have accidentally run a cache-backend on this.

I have a Gdrive remote + a crypt remote pointing to the Gdrive.

Nothing else is involved here - except --vfs-cache-mode writes obviously

[TD1]

type = drive

client_id = [REDACTED]

client_secret = [REDACTED]

scope = drive

token = [REDACTED]

team_drive = [REDACTED]

upload_cutoff = 256M

chunk_size = 256M

server_side_across_configs = true[TD1Crypt]

type = crypt

remote = TD1:/Crypt1

filename_encryption = standard

directory_name_encryption = true

password = [REDACTED]

password2 = [REDACTED]

mount:

rclone mount TD1Crypt: X:

Please reproduce the item and share a debug log.

Give me 2 min...

Replicated the scenario in the video exactly.

Here is a full debug log. I let the log run until upload to remote finished just to be safe.

https://pastebin.com/2ggKpe9w

But this isn't a problem for me by any means, so don't spend all your time troubleshooting something that works just fine for my sake. I just provide this in case you want to dig into this and figure out why we are getting different results.

You are using a specific beta branch version which has some vfs changes.

You got a whole bunch of stuff that's doesn't work in a mount there too:

checkers/transfers/fast-list

Running your specific mount and rclone version, it's doesn't come back on Windows nor Linux for me as I ran through both.

Same as OP.

The code isn't written to release until the upload is complete.

I'm aware of the non-functioning flags - just haven't bothered to remove them. Remnants from before when I wasn't sure if they worked via mount or not - but thanks for the comment.

It's possible it's a version thing, but the I know what the VFS changes are in that version and I don't see how it would affect this specifically. I can't be arsed to go back to 1.48 stable just to test a non-problem. Not unless you are super keen on finding out more about this.

I can't explain it either. I can only assure you the info I have given here is accurate and that there isn't any confusion in terms of the configuration.

I think we're good at hijacking OP's thread as the information just isn't quite right unfortunately so let's be sure to stay on topic.