I have a glusterfs storage cluster, mounted at /mnt/glusterfs. And I wanted to use rclone mount with the vfs cache so I can cache contents of the cluster locally, saving bandwidth on the cluster.

I noticed there is a huge performance drop in time to open files

My flags:

--allow-other \

--vfs-cache-max-age=168h \

--vfs-cache-max-size=3T \

--vfs-cache-mode=full \

--cache-dir=/mnt/cache \

--vfs-read-chunk-size=256K \

--vfs-read-chunk-size-limit=8M \

--buffer-size=256K \

--no-modtime \

--no-checksum \

--dir-cache-time=72h \

--timeout=10m \

--umask=002 \

--log-level=DEBUG \

--log-file=/opt/rclone.log \

--async-read=false \

--rc \

--rc-addr=localhost:5572 \

I tested with a starting chunk size of 1MB with buffer size of 1 MB too and found no noticeable difference in time to open files.

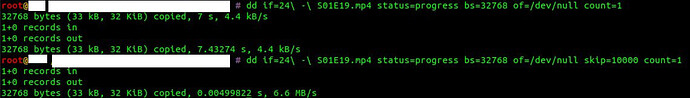

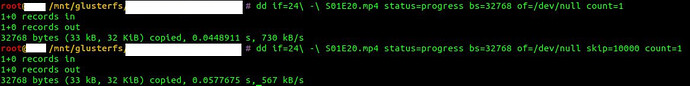

Compared to reading the same amount of data directly from the cluster:

Any ideas in how to fix this? Can we have a setting that create all the sparse files in advance, and maybe never clear them from cache

My rclone remote is just:

[glusterfs]

type = local

ncw

March 8, 2021, 11:25am

2

I can't see anything obviously wrong with your setup.

Can you attach a log please? Preferably from just before the dd starts to when it ends.

I don't think the creation of the files is the problem here.

You could try pointing your mount to a local directory and see what the performance is like there.

I have found the rclone mount is unusable, no matter the chunk size settings, even default.

As soon I start using the mount heavily nothing works and I don't see anything obviously wrong in the logs. I don't know why.

You can see the speeds directly from the cluster are much higher than the rclone mount with no active use...

I'm going to PM you my logs

}, { Name: "case_insensitive", Help: `Force the filesystem to report itself as case insensitive Normally the local backend declares itself as case insensitive on Windows/macOS and case sensitive for everything else. Use this flag to override the default choice.`, Default: false, Advanced: true, }, { Name: "no_preallocate", Help: `Disable preallocation of disk space for transferred files Preallocation of disk space helps prevent filesystem fragmentation. However, some virtual filesystem layers (such as Google Drive File Stream) may incorrectly set the actual file size equal to the preallocated space, causing checksum and file size checks to fail. Use this flag to disable preallocation.`, Default: false, Advanced: true, }, {

How can I change this setting to true on my mount?

That has not been released yet, so you must run latest beta , then you can add command line option --local-no-preallocate.

Thanks!!

But it didn't work... It went from 17 seconds to 14 seconds to read the first 32kb of file from cold cache...

Still unusable for me

Reading directly from the glusterfs mount takes less than 1 second to do the same

system

May 8, 2021, 4:45pm

7

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.