hello and welcome to the forum,

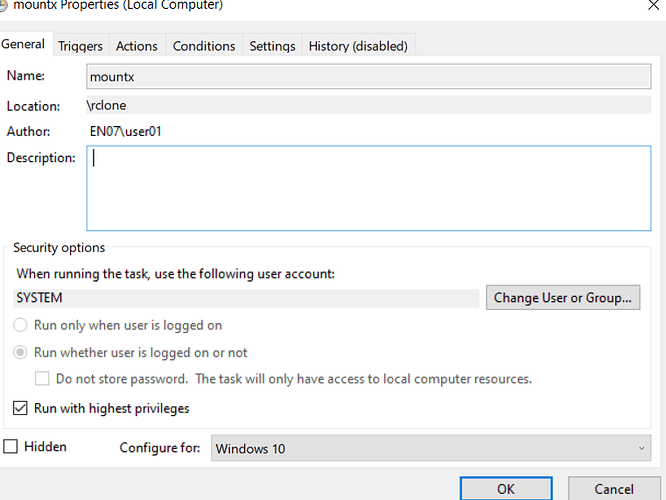

if you run the rclone mount as system user, then all process should see the mount.

use can use task scheduler to do that.

another option that i use is to mount to a folder, not a drive letter.

rclone mount remote: b:\mounts\remote

--allow-other does nothing on windows

and this post might be helpful, from @ookla-ariel-ride