What is the problem you are having with rclone?

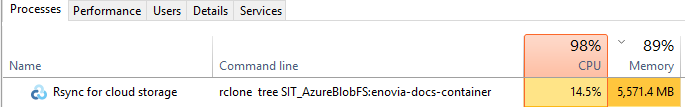

Slow lsd/tree in azure blob when there are hundred thousands small files (100KB~3MB ) in azure blob. it is also consuming very high memory utilization

) in azure blob. it is also consuming very high memory utilization

What is your rclone version (output from rclone version)

1.53.0

Which OS you are using and how many bits (eg Windows 7, 64 bit)

windows server

Which cloud storage system are you using? (eg Google Drive)

azure cloud

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone tree SIT_AzureBlobFS:enovia-docs-container

The rclone config contents with secrets removed.

[SIT_AzureBlobFS]

type = azureblob

account = XXXXXXXXX

key = XXXXXXX

A log from the command with the -vv flag

2020/09/17 06:52:52 DEBUG : rclone: Version "v1.53.0" starting with parameters ["rclone" "tree" "SIT_AzureBlobFS:enovia-docs-container" "-vv"]

2020/09/17 06:52:52 DEBUG : Using config file from "C:\\Users\\Simplementqa.im\\.config\\rclone\\rclone.conf"

2020/09/17 06:52:52 DEBUG : Creating backend with remote "SIT_AzureBlobFS:enovia-docs-container"