How does this work? Is it the Team drive feature which allows multiple users to see the same files?

Thank you so much. I had been looking for something like this. Moved over 60TB of data that i had been collection in a second acount for months into the main account. Though a large transfers like that still tells you, “transfer failed”, but everything seems ok. The quota usage info on the Google drive website only increases by around 10TB per day though.

In my tests, the upload transfer limit was raised to 800GB/24h recently.

Some say the download transfer limit was reduced to 5TB/24h at the same time. I have not tested that.

Just came across this page that explicitly states the 750GB/day upload limit:

https://support.google.com/a/answer/172541?hl=en

Thank you, ninjit - yes, it took them a while to mention the old limit in the docs … I wonder how long it will be, before that page is updated

What does your own tests say?

I’m seeing about 750GB/day still.

I’m leaving the sync op running, it will get to ~750gb then a stream of errors till the reset, then jump to 1.50tb next time I check… more 403 msgs…24 hrs later its stuck at 2.26tb and so on.

Is there a more graceful way to deal with this limit, other than letting rclone keep trying repeatedly until the 24 hr reset kicks in?

Something like an option to pause for X hours when the first 403 error is encountered?

I’d rather not kill and restart the operation later after the reset period, as I think rclone then has to repeat queries to check for existing files each time.

Set your bandwidth limit to something low so you can only push 700GB a day or so.

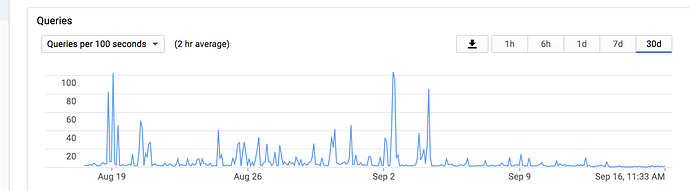

What’s also interesting is watching the error rate in the API console.

Seems to give you an idea of how quickly the limit is reached.

e.g. for me it appears to show a consistent 4hr window of actual transfers each day, meaning my upload bandwidth is something like 50MB/s, vs rclone stats showing ~8MB/s effective bw for the 24hr period

I’d prefer to let the machine upload as fast as it can then wait 20 hrs, vs doing it all day long at a trickle.

e.g. cron job at night when network isn’t as busy, which gets killed after 4hrs of running

But that still leaves the issue of rechecking existing files repeatedly every night

(or am I overthinking how much work that is for rclone?)

You could just let it run for weeks if you limit the bandwidth rather than maintaining it each night.

Doing that as well, got an extra suite for backup which I’m filling at the moment. Using the free credits of my new account to let a VPS at google upload around 730GB Daily. At 17TB now (out of 40Tb) as its a backup I’m not in a hurry and I don’t want to use hacks like multiple service accounts to circumvent the limits of google drive.

FWIW, I think sometimes Google gets confused. I use an older product (“grive” 'cos it’s bi-directional) to sync my local directory to Google Drive once an hour. There’s only 8Gb in the whole tree, and 95% of the time there are no changes to sync. And yet I still sometimes get API rate limit exceeded errors.

Rate limited exceeded is the easiest message you’ve requested too many hits per second. You’d need to turn down your transactions per second based on the quotas you have.

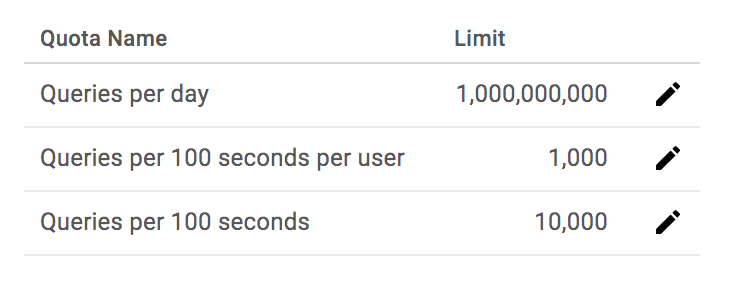

Keep it under these and you don’t get rate limited:

The 1000 per 100 seconds per user is the key one.

My last 30 days are very minimal: