I notice noone here has discussed the main issue stopping me currently. As I’m trying to use netdrive to download my encrypted acd locally, I am getting issues that file path is too long. I am using Windows 10 and removed the file character limitations and reformatted the drive to EX FAT. This has not solved my issue. any ideas other than the google server?

Not sure about netdrive, can't help you, why don't you just use odrive, works on windows the move is the same as everything else, download to local, upload to cloud no matter how you do it, and so far the fastest has been the google server. If you are doing this on your home server depending on how fast your connection is, could take you months to download. so you may want to buy a subscription to odrive for the 100/yr.

I couldn’t get rclone to work. i think it doesn’t like network drives or how Expandrive mounts them. I would just mount both ACD and Gdrive within Expandrive and copy with Windows copy. There are other tools to copy but this seems to be working ok at 60MB/s.

i can not create a disk over 10TB but i have request +15000GB and confirm this. I repeat this request and google confirm this again.

In the confirm mail is a link “To verify the quota change, please navigate to”… this site is the quota site and i see the quoats but i can not change anything i see ony the % for the services.

What i overlook ???

Update: on the quota site i found this:

Reservierter Speicherplatz auf persistenter Festplatte insgesamt (GB) europe-west1 0 % 0 von 10.000

i request a disk upgrade again

The solution by the request form is 20000GB and not 20.000GB !!! and now i have 20tb quota

I have a sync question, i want to sync one folder called “Movies” from “ACD:/crypt/Movies” and not all folders from ACD. Can someone tell me the command to sync the folger “Movies” please.

And thank you for the support, infos, instruction here

If you use odrive, first let it sync all the folder structure and than start the sync itself. I’ve used this command to sync my media folder:

exec 6>&1;num_procs=10;output="go"; while [ "$output" ]; do output=$(find "$HOME/odrive-agent-mount/Amazon Cloud Drive/Media/" -name "*.cloud*" -print0 | xargs -0 -n 1 -P $num_procs "$HOME/.odrive-agent/bin/odrive.py" sync | tee /dev/fd/6); done

If you change "$HOME/odrive-agent-mount/Amazon Cloud Drive/Media/" to "$HOME/odrive-agent-mount/Amazon Cloud Drive/Media/Movies" for example it will sync the Media --> Movies folder from amazon. The other folders would still have the folder structure though, but files are not downloaded.

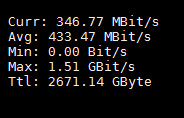

Migrated acd to gd with a personal clientid and rclone on a Google compute instance.

Sustained rate of 430mbyte/s, ridiculous fast, one night and it was done (12TB), no traffic costs for ingress and egress to gd. No bandwidth limitations from acd.

my ACD download is very slow, got only 4TB down in 1,5 days

avg 112.79 Mbit/s only

region EU

How did you get a Personal ID? I have done 100GB after 2 days

Making your own client_id

When you use rclone with Google drive in its default configuration you are using rclone’s client_id. This is shared between all the rclone users. There is a global rate limit on the number of queries per second that each client_id can do set by Google. rclone already has a high quota and I will continue to make sure it is high enough by contacting Google.

However you might find you get better performance making your own client_id if you are a heavy user. Or you may not depending on exactly how Google have been raising rclone’s rate limit.

Here is how to create your own Google Drive client ID for rclone:

Log into the Google API Console with your Google account. It doesn’t matter what Google account you use. (It need not be the same account as the Google Drive you want to access)

Select a project or create a new project.

Under Overview, Google APIs, Google Apps APIs, click “Drive API”, then “Enable”.

Click “Credentials” in the left-side panel (not “Go to credentials”, which opens the wizard), then “Create credentials”, then “OAuth client ID”. It will prompt you to set the OAuth consent screen product name, if you haven’t set one already.

Choose an application type of “other”, and click “Create”. (the default name is fine)

It will show you a client ID and client secret. Use these values in rclone config to add a new remote or edit an existing remote.

You can’t get a new personal ACD client ID atm, I had already applied a while back

Thank you for your answer ![]() yes i use odrive and is a fresh installation. I have sync nothing.

yes i use odrive and is a fresh installation. I have sync nothing.

Is this the command for the default sync to generate the folder strucure?

python "$HOME/.odrive-agent/bin/odrive.py" sync "$HOME/odrive-agent-mount/Amazon Cloud Drive.cloudf"

i use this guide from odrive Agent/CLI (Linux, MacOS, Win) and i am by 5. Sync an odrive folder

For folder structure you should use the commands from Philip:

find ~/odrive-agent-mount/ -type f -name "*.cloudf" -exec python "$HOME/.odrive-agent/bin/odrive.py" sync "{}" \;

Execute this command a couple of times until it stops doing anything (this means the folder structure has been created) The command only explores one folder depth at a time. So that’s why you need to run it a couple of times. After that use my previous command to only sync the Media\Movies folder if needed.

ok i understand great script @Philip and thanks for post Timmert.

in the folder ~/odrive-agent-mount/ is one file "> Amazon Cloud Drive.cloudf" and when i send the folder structure command: find "~/odrive-agent-mount/" -type f -name "*.cloudf" -exec python "$HOME/.odrive-agent/bin/odrive.py" sync "{}" ;

i have an error:

find: `~/odrive-agent-mount/': No such file or directory

root@instance-1:~/odrive-agent-mount# ls -all

total 12

drwxr-xr-x 2 root root 4096 May 21 10:23 .

drwx------ 4 root root 4096 May 21 10:22 ..

-rw-r--r-- 1 root root 0 May 21 10:23 Amazon Cloud Drive.cloudf

-rw-r--r-- 1 root root 71 May 21 10:23 .odrive

You might need to remove the quotes I think, my bad!

find ~/odrive-agent-mount/ -type f -name "*.cloudf" -exec python "$HOME/.odrive-agent/bin/odrive.py" sync "{}" \;

now it works the folder sync script GREAT Thanks

i have found an other solution to switch easy

FOR USERS HO PREFER WINDOWS!

create a google cloud VM with 1Core, 3,75 GB RAM und 70GB HDD with windows serer 2016(not core)

then nstall expandrive with Drive letter Z and move with rclone all data directly from Z to the google drive with --transfers 10. he will output sometimes ERROR put round about the most files will transfer. currently i have a upload speed from 50 MByte/s!

the good news: the VM only cost 54$/month. so you have no problem with the 300 free $!

Not sure how you managed that one night 12TB, I’ve got 20 TB and i am doing about 3-4TB every 7 - 8 hrs just to download.

the sync works great ![]() i downlod with:

i downlod with:

Curr: 935.05 MBit/s

Avg: 954.07 MBit/s

Next question is rclone encrypt, my files on ACD are encrypted with rclone and now i want decrypt the files local and upload to gdrive decrypted.

- my odrive download dir with encrypted files is "/mnt/data-disk/Amazon\ Cloud\ Drive/crypt/xsadaf2fdsa" (the Movies folder)

- How can I mount this local directory in rclone?

- new remote "5" and path to odrive download dir or "9" Lokal Disk"

LOL about doing it on home machine. I tried using MultCloud initially and it’s speeds were slower than my house would have been, Although I rented a server I can return within 14 days at no cost to me with a gigabit uplink at 4x4TB drives.

So far I do not think Odrive has good speed, it looked to hit 11 MB/s in the task manager, but I’m getting something now so I can monitor the bandwith it is using. With this system I was able to upload to ACD at 50-60MB/s, so I’d like to see close to 100MB/s down