[Animosity022]

Can you share the debug log and the memory dump?

Remote Control / API

The only way that I can connect to the rclone is through https and I get this error:

https://ip:port/debug/pprof/goroutine?debug=1: x509: cannot validate certificate for <ip> because it doesn't contain any IP SANs

any help on this!

I used the core/memstats and here is the result:

"Alloc": 823668928, "BuckHashSys": 1687850, "Frees": 4699578171, "GCSys": 76943360, "HeapAlloc": 823668928, "HeapIdle": 1025417216, "HeapInuse": 985194496, "HeapObjects": 5096734, "HeapReleased": 565354496, "HeapSys": 2010611712, "MCacheInuse": 13888, "MCacheSys": 16384, "MSpanInuse": 18890672, "MSpanSys": 25673728, "Mallocs": 4704674905, "OtherSys": 7846094, "StackInuse": 2654208, "StackSys": 2654208, "Sys": 2125433336, "TotalAlloc": 1187534721016

An other machine which is receiving exactly same commands and has no memory issue:

"Alloc": 15428592, "BuckHashSys": 1823709, "Frees": 2667775013, "GCSys": 3791872, "HeapAlloc": 15428592, "HeapIdle": 76636160, "HeapInuse": 19111936, "HeapObjects": 98855, "HeapReleased": 66314240, "HeapSys": 95748096, "MCacheInuse": 13632, "MCacheSys": 16384, "MSpanInuse": 231696, "MSpanSys": 753664, "Mallocs": 2667873868, "OtherSys": 1447195, "StackInuse": 4915200, "StackSys": 4915200, "Sys": 108496120, "TotalAlloc": 720210779856

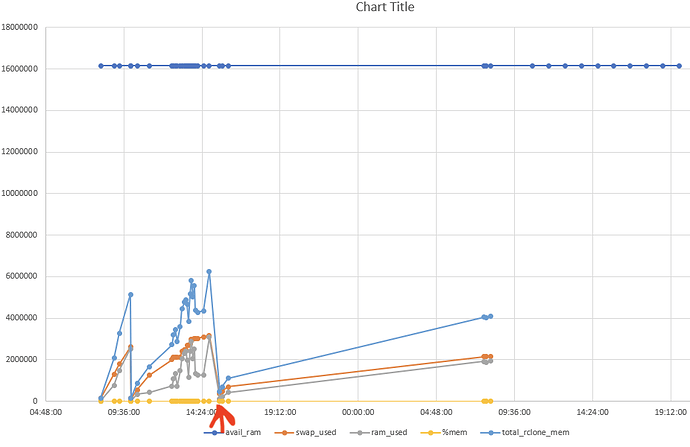

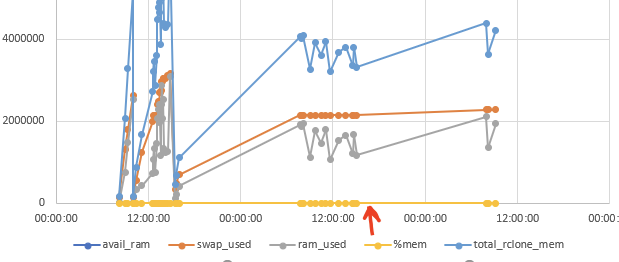

after reducing job expire duration the situation is much better. at least we have not ran out of memory yet. But memory consumption is still going up gradually.

The log file now is over 20Gb I am trying to download and open it