Came to the forums to say im having exactly the same issues. Australia. Will decrease chunk size and report back as well.

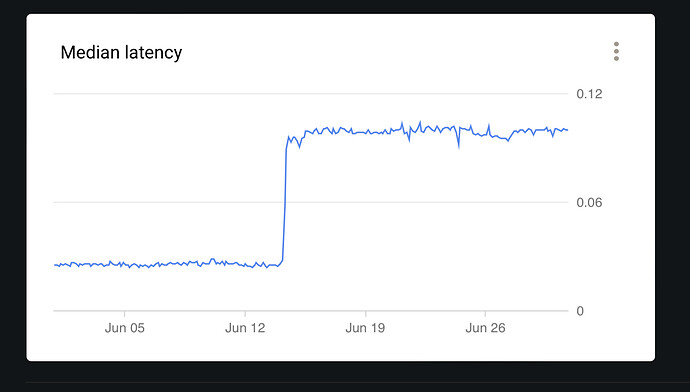

Looking at my api console i can see there has been a not insignificant increase in latency as well. Not sure if this is related.