Has anyone tried and confirm that they don't pay for egress?

Yes. Many times.

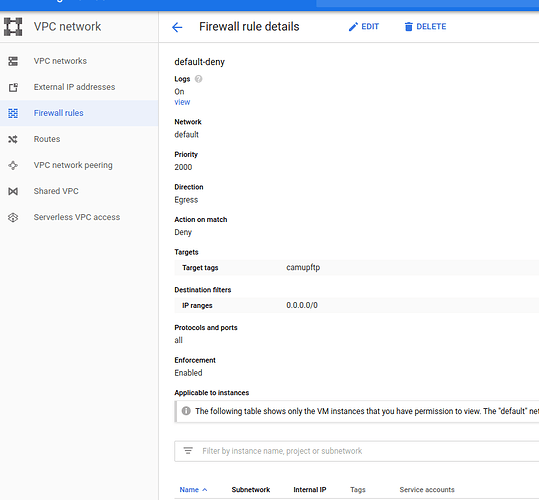

If you want to be sure and ‘protect’ yourself. Just setup a deny all rule for egress traffic from your instance in the “vpc network” -> firewalls section in the cloud platform GUI.

Then you can select the view link and watch any traffic attempts get denied.

Is there a command that limits this quota in the rclone of 750gb or 100gb?

Server side copies use 100 while the other uses 750. You can see if it’s server-side in the logs. --disable copy will turn it off completely.

All my missing files are in the google drive recycle bin, help. How do I restore all at once?

Easy way is with the GUI. You can use the drive-trashed-only flag to see the trash though with rclone.

What would be the command? Is it quick to restore from the trash?

It should be pretty quick. You can just copy them out like any other rclone command. With move or copy. Test first with dry-run

One example, I'm not sure what to do.

sudo rclone ...

I'm not in front of a computer but...

Rclone move remote1: remote1: --drive-trashed-only --dry-run . I've not played with this too much so ensure you test.

Honestly the GUI is easier most likely.

You can try a few with

Rclone copy remote1: remote1:testlocation/ --drive-trashed-only --dry-run

As I would GUI I installed rclonebrownser, but I did not find the option to restore from the recycle bin.

I meant the Google drive web browser

ah yes, but you do not have the option to restore all the files in the bin at one time.

Depends on how you deleted. You can restore the folder if you deleted a entire folder. But yes you can use rclone too.

Why are you running sudo rclone ? Especially copying from one drive to another, you shouldn't need sudo.

I have an idea, might simplify your situation, especially dealing with the deleted files. Forget about what ended up in the trash. Don't empty your trash, in case this isn't successful, but otherwise, just leave that junk there.

It seems like your main issue is the rate limiting.

Make sure you are using a Google Cloud Compute VM, as others suggested before. Ever since switching to that, I cannot recommend it highly enough.

Once your VM is up, add both remotes to your config, and run this:

tmux

rclone copy --drive-shared-with-me Gdrive: Gsuite:New --bwlimit 8.5M -P

Then press CTRL b, then $

Type rclone, press enter

Then press CTRL b, then d (not CTRL d, just d)

What you did is open a "spare" terminal, named it rclone for later reference, then detatched from it to let it run in the background. So your rclone command will continue to run as if you're logged into the machine and watching the terminal. I find it much more convenient than nohup, and (at least on Ubuntu 18LTS) it comes preinstalled on the Google VM. You can safely log out of the VM completely, and just let it run.

Next time you log into your VM, just type

tmux attach-session -t rclone

and you'll be right back to it.

The 8.5M bwlimit will keep you at high speeds, but below 750GB/Day.

8.5M works out to 717GB/Day

The math:

- 750 GB/Day Limit

- 750 GB * 1,024 = 768,000 MB

- 1 Day = 86,400 Seconds

- 768,000 / 86,400 = 8.8M

Hope it helps.

Solved with your tips thank you!!!

Thank you all! I'm very happy because I've been able to remove my files from the company server for my personal drive.

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.