Is there a way where we can run a simulation run in Windows for this? Some type of A/B testing. create 10,000+ in one folder and how long does it take to open? Or where as 10,000 folders spiting them into sub-folders (A-Z, etc.).

Ah, this is probably because it is fetching the modification times which take an extra transaction on s3. vfs/refresh doesn't fetch the modification time.

Try adding the --no-modtime flag or the --use-server-modtime flag - that should speed it up.

thanks

the issue is not about speeding up, but that vfs/refresh does nothing on a mount using s3 wasabi.

the point i am trying to make is that vfs/refresh seems to have a bug or i am misunderstanding.

- vfs/refresh does make a difference on a mount using gdrive. after refresh i can enter the folder immediately.

- vfs/refresh does NOT make a difference on a mount using s3 wasabi.

after the refresh, if i try to enter into a folder, there is a 60+ second delay.

the only way to prime/pre-cache isdir y: /s

afterdir y: /s, i can enter the folder immediately.

It does work on Linux with google drive. I use it quite a bit and just reverified it. Its odd that it isn't working on windows for you.

yes, with gdrive mount on windows, the refresh works.

no, with s3 wasabi mount on windows, the refresh does nothing, as far as i can tell.

I think if its not caching the modtimes then when you change to a directory it would still need to grab the modtimes because of your file manager or whatever you're accessing it so that is why it is slow? I think what @ncw meant was if you use --use-server-modtime that it would fill those in with server modtimes instead and it would be faster in the mount (but show the server modtimes instead).

i understand what ncw wrote and i did a test and he is correct.

my issue is not with speed itself, but the difference in speed and behaviors between two mounts.

in my testing, on a folder with 5000 files

vfs/refresh on gdrive mount, when i enter the folder, zero delay

vfs/refresh on s3 wasabi mount, when i enter the folder, 60+ second delay.

why is vfs/refresh making a difference on gdrive mount but NOT on s3 wasabi mount?

another difference,

vfs/refresh on gdrive mount takes 14 seconds to complete.

vfs/refresh on s3 wasabi mount, takes less than one second to complete.

why is the speed and behavior so different, both are folders with 5000 files in it.

I think because wasabi uses the s3 backend and it wont automatically grab the mod times on the s3 backend because they can be expensive (another round trip). But since the list into a directory NEEDS that info, the list wil have to go grab that data anyway.

So if you use --use-server-modtime see if it browses faster. It will then be caching modtimes (the system modtime instead though).

yes, i agree that is faster.

so rclone rc vfs/refresh recursive=true --fast-list -v

behaves differently depending on the backend?

is that why the refresh on s3 mount takes less than one second but seems to do nothing to speed entry in a folder?

yes I've seen this in other areas as well. Different backends give different data back with the same roundtrips. and some you pay more for (like S3). So I think the reasoning is to not incur unknown cost. I think on S3, it would need to make a query for each file to populate the modtimes. That may or may not be desired.

yeah,

i guess most s3 storage companies has api costs and bans/limits.

wasabi does not.

i wish for a flag for s3 backend, for fastlist to work without care of api costs/bans/etc.

thanks

What kind of effect would using the above flags have on a read-only GDrive mount, like mine? Would there be any benefit to using either? If so, which would be better?

for gdrive you don't need them. no-modtime will not grab modtimes. use-server-modtime will essentially give you the date of the time the drive was mounted.

Yes I think we need an enable-mod-times flag for backends where the default is the inverse.

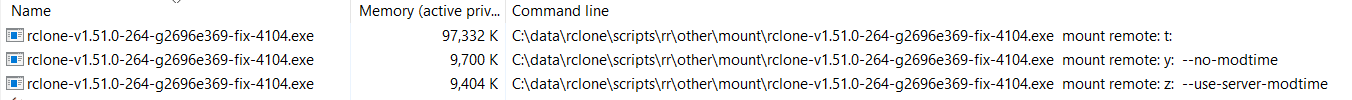

i did a test

using double commander, i did a flat view, which flattens a set of folders into one list.

10 folders with 5000 files each, total of 50,000 files

for t: i gave up after 6 minutes, still did not complete, used 97MB of ram

for y: 12 seconds, used 70MB of ram

for z: 11 seconds, used 67MB of ram

so depending on what you are using the mount for, we have options to tweak speed versus need for modtime.

As far as I can remember it is only s3 and swift where you don't get the modtime as part of the listing, but I might be wrong about that! The http backend maybe? But that needs to do a HEAD request to get the size which you will need regardless.

Maybe if I made a Feature flag for the backends

- ModTimeIsExpensive

then the VFS layer could default to modtimes OFF for those backends (which is always what you want in my experience) and ON for everything else.

Is that what you were thinking?

Note that --no-mod-time gives the file the modification date of the directory wheras --use-server-modtime gives the LastModified that the server gives for "free" in the directory listings, so that is probably more desirable

One of the reasons this is so slow is that the VFS layer runs the modtimes sequentially. The VFS layer is entirely driven by the mount though so doing them in parallel would be very hard unless the mount layer (and hence the original application) read the modtimes in parallel.

Yea I agree. Some remotes use S3 like wasabi? I think it would be good to be able to get consistent results from differing remotes by allowing the user to enable expensive flags if needed. One example is the above with vfs/refresh recursive=true on wasabi isn't doing the extra round trip to grab the times when wasabi doesn't charge for that roundtrip.

Yes something like that would work.

Yes s3 is quite popular!

The recursive listing from s3 remotes is really really quick - they are optimized for that. However reading the ModTime takes a GET request per file which takes ages.

I'll stick it on the TODO list ![]()

thanks but for me it is not a big deal.

now that it is clear what the behaviors are:

- if i do need mod-time, i can use

dir x: /s - if i do not need mod-time, i can use

vfs/refresh

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.