Thanks - will take a look now!

From the logs I can see that there were 543 async buffers running

543: select [0~461 minutes] [Created by asyncreader.(*AsyncReader).init @ asyncreader.go:78]

asyncreader asyncreader.go:83 (*AsyncReader).init.func1(*)

There should only be 24 running (one per --transfer) so there are 519 extra.

However in the log file we see about the right number of these errors

grep -i retry.*10/10.*broken rclone.log | wc -l

537

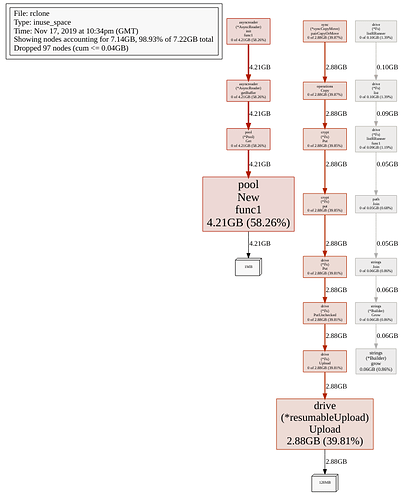

In the memory profile we can see that half the memory is leaked by the async buffer but a great deal by the google uploader

Which means that when operations.Copy is exiting with an error, it isn't closing the upload by the look of it.

I'm also a bit suspicious of those failures being short each time...

A lot of investigation later I think I've found the problem which explains both things!

https://beta.rclone.org/branch/v1.50.1-048-g4005e125-fix-memory-leak-beta/ (uploaded in 15-30 mins)

Commit message

accounting: fix memory leak on retries operations

Before this change if an operation was retried on operations.Copy and

the operation was large enough to use an async buffer then an async

buffer was leaked on the retry. This leaked memory, a file handle and

a go routine.

After this change if Account.WithBuffer is called and there is already

a buffer, then a new one won't be allocated.

Please have a go and let me know what happens! If this fixes the problem I'll roll it into a point release.

Thanks, that was fast!

Do you want me to run this with debug log and the go debug memory?

EDIT:

Running the same dataset with the same settings and log-collection as last time.

Great - thanks! Debug log and memory is good if it goes wrong  Hopefully it won't! I'd be interested in your results either way though.

Hopefully it won't! I'd be interested in your results either way though.

It did not crash due to memory this time, but due to max-transfer limit.

What happens if you don't set max transfer limit and hit it?

@ncw Anyhow, the log files are in the same shared drive, but in a directory after the rclone version.

All the transfers halt with continuous retries by rclone till the quota resets.

Looking at those the memory usage seems to stay pretty constant at 3GB which is 24 --transfers * 128 --drive-chunk-size = 3GB

There weren't any strange errors about short transfers either, so I think that looks successful to me

I've merged this to master now which means it will be in the latest beta in 15-30 mins and released in v1.51. I'll also put it in a 1.50.2 which I'll release over the next couple of days

Happy to help! Appreciate your work!

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.