Requested, and also increased the checkers/transfers. Seeing much better performance even without the quota increase (at least for now).

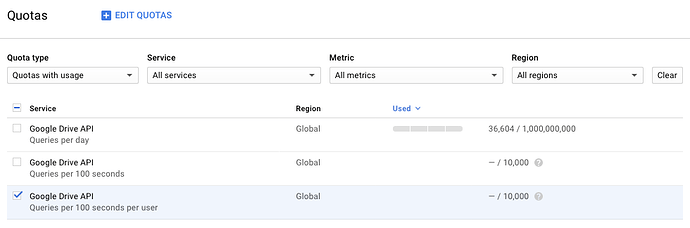

Where do you have a 1k per user per 3 seconds limit? I only see a 10k per user per 100 second limit?

I mis-typed. I corrected it now. I meant per 100/sec. Sorry for confusing.

Thanks! Isn’t increasing that limit a little suspicious? I was quite comfortable pushing 200Mbytes/s from GDrive to ACD last night (got a old security profile that is still working)

I dont think so. I was honest in my request that I use rclone to push files to GD as a backup and I hit the 1000 per user limit easily. Since I am the only user I’d like it increased to the whole 10,000 rate. they approved no issue.

Its not like im doing anything illegal. Im using a tool to backup my files… What would be suspicious?

Sounds reasonable. Still worried they will enforce the 1TB limit on <5 User Accounts.

Heh, worked for a brief while and then my user quota was crushed.

Failed to set modification time: googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 1.591569721s (1 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 2.139585917s (2 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 4.204764712s (3 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 8.050264449s (4 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 2/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.303989579s (5 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.909156333s (6 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.429879825s (7 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.184312746s (8 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.706343734s (9 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.476771718s (10 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 3/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.158377901s (11 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 3/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:32 DEBUG : pacer: Rate limited, sleeping for 16.384065704s (12 consecutive low level retries)

2017/05/24 13:10:32 DEBUG : pacer: low level retry 3/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:33 DEBUG : pacer: Rate limited, sleeping for 16.11208761s (13 consecutive low level retries)

2017/05/24 13:10:33 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

2017/05/24 13:10:34 DEBUG : pacer: Rate limited, sleeping for 16.264975024s (14 consecutive low level retries)

2017/05/24 13:10:34 DEBUG : pacer: low level retry 3/10 (error googleapi: Error 403: User rate limit exceeded, userRateLimitExceeded)

Get increased to 10000 and that goes away your likely hitting the 1000 which is easy.

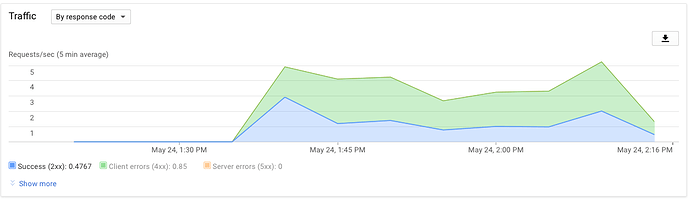

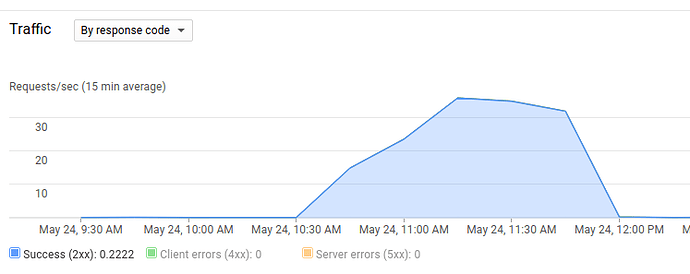

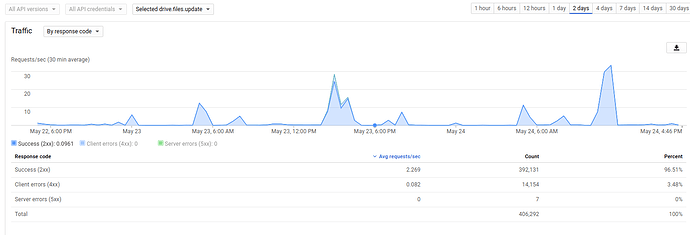

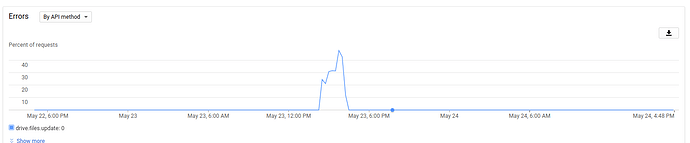

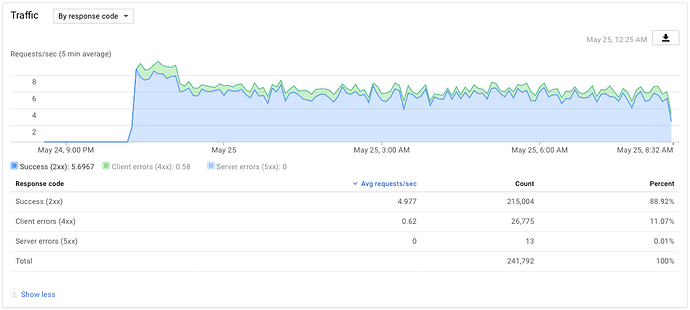

In https://console.developers.google.com you’ll notice that your errors go WAY down once you get increased. From like a 25-30% error rate to like 3%.

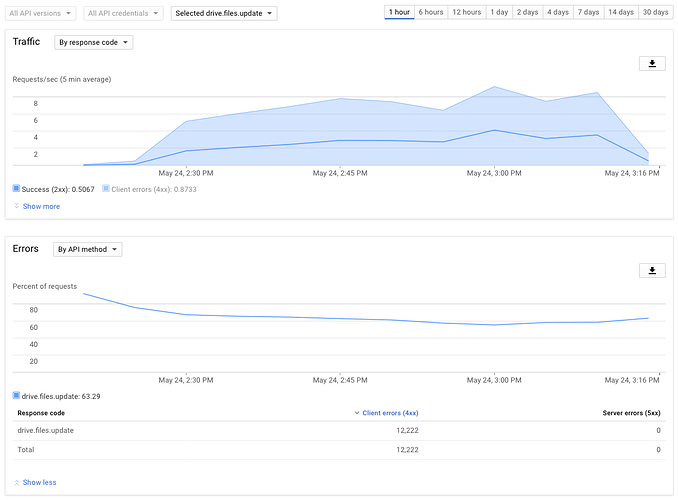

They increased my quota and I’m still seeing constant pacer/rate limiting, and an error rate of 63%. I don’t get it - my grand total queries is 36,000. That should be about five minutes worth at the new rate. This is horrid

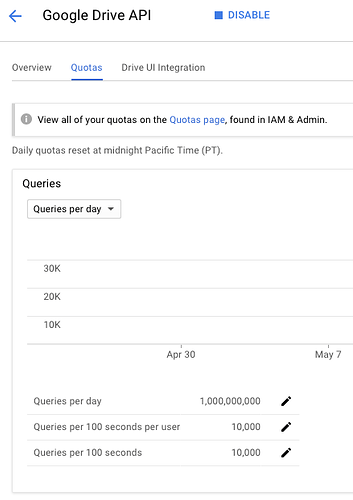

Did you increase it in your API too? You have to manually add that new quota.

Mine looks different.

https://console.developers.google.com/apis/api/drive.googleapis.com/quotas

Go there and click the pencil next to the queries per 100 seconds.

EDIT: ah yes. That looks right.

That graph sure looks awful. :\ I wonder if there is a delay before it kicks in.

Mine looks like this:

My guess is your still technically restricted to the 1000. It may take a little for the API to actually use the new quota.

Man, I hope so. 5.5M files out there (yay for macOS’s package format which encourages lots of individual files).

I’m starting to wonder if I screwed up the patch in some fashion. All errors are coming from the drive.files.update command, none from listing or other operations.

Do you want a copy of hte file I modified?

This is the relevant function I modified from operations.go

func equal(src, dst Object, sizeOnly, checkSum bool) bool {

if !Config.IgnoreSize {

if src.Size() != dst.Size() {

Debugf(src, "Sizes differ")

return false

}

}

if sizeOnly {

Debugf(src, "Sizes identical")

return true

}

// Assert: Size is equal or being ignored

// If checking checksum and not modtime

if checkSum {

// Check the hash

same, hash, _ := CheckHashes(src, dst)

if !same {

Debugf(src, "%v differ", hash)

return false

}

if hash == HashNone {

Debugf(src, "Size of src and dst objects identical")

} else {

Debugf(src, "Size and %v of src and dst objects identical", hash)

}

return true

}

// Sizes the same so check the mtime

if Config.ModifyWindow == ModTimeNotSupported {

Debugf(src, "Sizes identical")

return true

}

srcModTime := src.ModTime()

dstModTime := dst.ModTime()

dt := dstModTime.Sub(srcModTime)

ModifyWindow := Config.ModifyWindow

if dt < ModifyWindow && dt > -ModifyWindow {

Debugf(src, "Size and modification time the same (differ by %s, within tolerance %s)", dt, ModifyWindow)

return true

}

Debugf(src, "Modification times differ by %s: %v, %v", dt, srcModTime, dstModTime)

// Check if the hashes are the same

//same, hash, _ := CheckHashes(src, dst)

// mod time differs but hash is the same to reset mod time if required

if !Config.NoUpdateModTime {

// Size and hash the same but mtime different so update the

// mtime of the dst object here

err := dst.SetModTime(srcModTime)

if err == ErrorCantSetModTime {

Debugf(src, "src and dst identical but can't set mod time without re-uploading")

return false

} else if err != nil {

Stats.Error()

Errorf(dst, "Failed to set modification time: %v", err)

} else {

Infof(src, "Updated modification time in destination")

}

}

return true

}

Here is a pic:

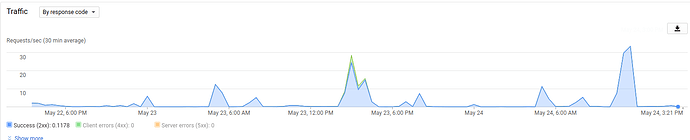

When I did my update yesterday, i also saw a good amount I guess. So maybe its not you… but performance is good for me.

Did it get better today?

The error rate has decreased, but look closer at the Y-axis and the overall performance in QPS is still pretty sad. I verified with Google that my quota did indeed get updated. This is now running with --checkers 20 and --transfers 20, on your patched rclone code; maybe I’ll try 50 again and see how it fares.

I ran like 50 or a 100. that looks better though.

Any chance you could attach the modified operations.go to this issue? Or the output from git diff.

I can have a look see about integrating this permanently with some magic flag or other!