My current is GD -> Crypt and mounting the crypt via:

felix@gemini:/etc/systemd/system$ cat rclone.service

[Unit]

Description=RClone Service

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/rclone mount gcrypt: /GD \

--allow-other \

--dir-cache-time 48h \

--vfs-read-chunk-size 32M \

--vfs-read-chunk-size-limit 2G \

--buffer-size 512M \

--syslog \

--umask 002 \

--log-level INFO

ExecStop=/bin/fusermount -uz /GD

Restart=on-abort

User=felix

Group=felix

[Install]

WantedBy=default.target

I use mergerfs as I just like it better, but that’s some personal preference. My mergerfs writes to the first entry always and my rclone mount is RW.

My systemd:

felix@gemini:/etc/systemd/system$ cat mergerfs.service

[Unit]

Description=mergerFS Mounts

After=network-online.target rclone.service

Wants=network-online.target rclone.service

RequiresMountsFor=/GD

[Service]

Type=forking

User=felix

Group=felix

ExecStart=/home/felix/scripts/mergerfs_mount

ExecStop=/usr/bin/sudo /usr/bin/fusermount -uz /gmedia

ExecStartPost=/home/felix/scripts/mergerfs_find

Restart=on-abort

RestartSec=5

StartLimitInterval=60s

StartLimitBurst=3

[Install]

WantedBy=default.target

I use a script to run the actual mount for more flexibility.

felix@gemini:~/scripts$ cat mergerfs_mount

#!/bin/bash

# RClone

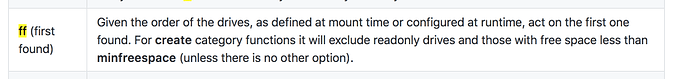

/usr/bin/mergerfs -o defaults,sync_read,allow_other,category.action=all,category.create=ff /data/local:/GD /gmedia

I’ve found that sync_read is needed for unionfs and mergerfs (ymmv).

and I do a little find to prime/warm up the cache:

felix@gemini:~/scripts$ cat mergerfs_find

#!/bin/bash

/usr/bin/find /gmedia &

My thought process for my settings is that a transcode will use the plex setting and read ahead for 600 seconds so that handles buffering on its own. If direct stream, I rather have a 512M buffer as no transcode happens so buffering in rclone seemed better.

I run a script over night to move from local to the cloud and do some clean up. Not the prettiest but effective:

felix@gemini:~/scripts$ cat upload_cloud

#!/bin/bash

LOCKFILE="/var/lock/`basename $0`"

(

# Wait for lock for 5 seconds

flock -x -w 5 200 || exit 1

# Move older local files to the cloud

DIR="/data/local/Movies"

if [ "$(ls -A $DIR)" ]; then

/usr/bin/rclone move /data/local/Movies/ gcrypt:Movies --checkers 3 --fast-list --syslog -v --tpslimit 3 --transfers 3

cd /data/local/Movies

rmdir /data/local/Movies/*

fi

# Radarr Movies

DIR="/data/local/Radarr_Movies"

if [ "$(ls -A $DIR)" ]; then

/usr/bin/rclone move /data/local/Radarr_Movies/ gcrypt:Radarr_Movies --checkers 3 --fast-list --syslog -v --tpslimit 3 --transfers 3

cd /data/local/Radarr_Movies

rmdir /data/local/Radarr_Movies/*

fi

# TV Shows

DIR="/data/local/TV"

if [ "$(ls -A $DIR)" ]; then

/usr/bin/rclone move /data/local/TV gcrypt:TV --checkers 3 --fast-list --syslog -v --tpslimit 3 --transfers 3

cd /data/local/TV

rmdir /data/local/TV/*

fi

) 200> ${LOCKFILE}

In theory, if someone was playing a file while it was moved, you may get a blip, but only would be a minute. You could schedule the moves to be at off hours to minimize that impact.