@Stokkes I am having the same problem. Currently I use a union_fs mount to keep the latest 10tb of my library local and the rest on GDrive. I then use https://github.com/l3uddz/unionfs_cleaner to transfer 1 tb to the cloud when the local goes over 10tb. However I need it to go to the cached rclone now for this setup to work but I get the database is in use errors.

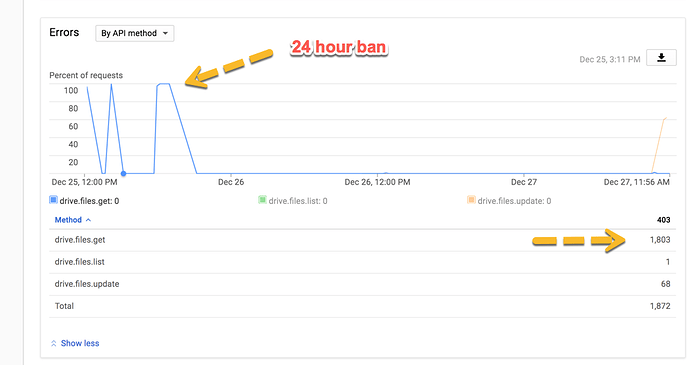

So I did some testing yesterday and got my first 24 hour ban

I used this for a mount:

#rclone mount -v --allow-other cache: /media --cache-writes --cache-rps 4 --cache-total-chunk-size 50G --syslog --vfs-cache-mode full --uid 1000 --gid 1000 --umask 002 &

It worked fine on the cache build and the scans as it worked fine. The odd part is when I started to play a file, it played a few seconds and than 403’ed out. I didn’t have any debug type logs on so not sure what the logs will provide other than the 403’ed.

Did I mount something goofy or miss a parameter?

vfs-cache and cache backend are two totally independent and different things. are your mixing this intentionally?

Intentionally would be a strong word.

I was trying to write something to the mount and it told me in an error log to add that. Can always remove it.

My goal was to have a read/write mount so I can remove stuff as well and not make it read-only.

I user mergerfs to always write locally first, but if I want to replace an old piece of media, I want the ability of deletion to be there as well.

So what’s the actual config I would be looking to setup to test more of the cache feature?

My current config is:

/GD mounted via plexdrive:

/home/felix/scripts/plexdrive -o allow_other -v 2 mount /GD >>/home/felix/logs/plexdrive.log 2>&1 &

rclone encrypted mount on /media:

# Mount the 3 Directories via rclone for the encrypt

/usr/bin/rclone mount \

--allow-other \

--default-permissions \

--uid 1000 \

--gid 1000 \

--umask 002 \

--syslog \

media: /media &

I use mergerfs to mount my TV and Movies:

# mergerfs mount

/usr/bin/mergerfs -o defaults,allow_other,use_ino,category.action=all,category.create=ff /local/movies:/media/Movies /Movies

/usr/bin/mergerfs -o defaults,allow_other,use_ino,category.action=all,category.create=ff /local/tv:/media/TV /TV

My current setup has a ~35TB library that has been using Sonarr/Radarr/Plex for months without any real problems other than I’d like to simplify a few pieces of software.

My use case is that everything is downloaded locally to my home plex server and I have a script that runs once a week to move from local to my GD. I don’t copy anything directly to the mount and I only have the mount read/write so I can delete any upgraded media that may come in after a week. So it would remove say a 720p version from my GD and the 1080p would be local until the weekly script comes along to move it to the cloud.

My ban seemed to happen here when I played a file:

The mount command from that use was:

#rclone mount -v --allow-other cache: /media --cache-writes --cache-rps 4 --cache-total-chunk-size 50G --syslog --vfs-cache-mode full --uid 1000 --gid 1000 --umask 002 &

So I’m not sure if I need to turn off the vfs-cache-mode as I turned that on when I got an error in my logs saying to turn it on when I tried to write a test file into my mounted GDrive.

No one has any comments on how to configure cache properly?

this means every single file will be read completely - even if only a very small portion is needed.

i would suggest not to mix cache remote & vfs-cache and test again. Keep in mind that scanning your mount frequently will also get you banned quickly if you have a short cache-info age or if you purge it manually via SIGHUP. the cache remote has currently no support for the gdrive activity log and will not get any updates from external uploads.

First off, thanks for the awesome work and time you’ve put in so far.

Are there any plans in the pipeline to add in the activity log and update functionality? I think once that pops in, I’d be more comfortable doing more testing to see how it works.

My setup is automated at this point as normal plex scans still happen and I like to be able to perform library updates without a concern of being banned.

I’m testing cache and I thought I got it working nicely but I’ve run into a problem. A lot of the items in my Plex TV Show library have become unavailable. This is my mount command:

/usr/bin/rclone mount --config /home/kamos/.rclone.conf --read-only --allow-other --cache-db-purge --stats 10s -v --log-file=/home/kamos/media-cache.log media-cache: /home/kamos/.media

I’ve tried to purge the db but that didn’t work. When I do cachestats it shows 0 files. What could the problem be?

@KaMoS

you will not be able to show cachestats when the remote is mounted (Bolt DB is busy). Files will be ZERO unless you have actually read something from the mount.

- can you access the mount in your shell?

- can you use it directly with

rclone lsd media-cache: - are you using crypt?

- remote config?

can you access the mount in your shell?

-Yes.

can you use it directly with rclone lsd media-cache:

-Yes

are you using crypt?

-Yes. Gdrive -> Cache -> Crypt

remote config?

[media-cache]

type = cache

remote = gsuite:crypt

plex_url = http://127.0.0.1:32400

plex_username = [redacted]

plex_password = [redacted]

chunk_size = 10M

info_age = 48h

chunk_total_size = 10G

plex_token = [redacted]

Absolutely…

Modes up to and including --vfs-cache-more writes make sense for making the mount more compatible with normal tools, but if it works fine without then don’t use it.

Quick clarification: the option should be --vfs-cache-mode=writes.

@remus.bunduc can be the delete of cache implemented through commands? I mean … Whenever I write a file called deletecacherclone the cache of that directory is deleted. So no all tree is deleted just that part … And after the delete is done the file disappears

this will not work for read only mounts

I thought that this was the problem because the cache need to read some new and plex reloaded full directory after deletion and update me the full cache

When I try this command (to copy with the cache remote)

/home29/poiu0/bin/rclone copy /home29/poiu0/files/1_To_Copy gdrivecache:/1

I have this error:

23:30:02 INFO : gdrivecache: Cache DB path: /home29/poiu0/.cache/rclone/cache-backend/gdrivecache.db

2018/01/16 23:30:02 INFO : gdrivecache: Cache chunk path: /home29/poiu0/.cache/rclone/cache-backend/gdrivecache

2018/01/16 23:30:03 ERROR : /home29/poiu0/.cache/rclone/cache-backend/gdrivecache.db: Error opening storage cache. Is there another rclone running on the same remote? failed to open a cache connection to "/home29/poiu0/.cache/rclone/cache-backend/gdrivecache.db": timeout

2018/01/16 23:30:03 Failed to create file system for "gdrivecache:/1": failed to start cache db: failed to open a cache connection to "/home29/poiu0/.cache/rclone/cache-backend/gdrivecache.db": timeout

2018/01/17

Is that normal?

I am only able to use the ‘‘copy’’ command with a normal remote without a remote.

Okay I was wondering if this would work, I am on Windows 10 btw.

I want to mount an encrypted cache folder so: gdrive > cache > gcrypcache

So if I want to upload new encrypted stuff to my gdrive would it work to just use gdrive > gcryp

gcryp and gcrypcache are setup up the same (have the same password etc) would the stuff I upload via this method also be automatically loaded by gcrypcache?

Also a question regarding plex, what is the benefit (if any) of setting up plex in rclone instead of just using the plex app?

Due to ACD giving up their unlimited plan in my country I moved to GSuite.

Now my setup looks like follows:

gdrive -> cache_gdrive (points to gdrive:) -> crypt_gdrive (points to cache_gdrive:)

I mounted the crypt_gdrive: with only these two flags: --allow-other --allow-non-empty

So far so good! Now, I wanted to upload stuff to crypt_gdrive but I get the same @PEEG. Here is my log:

2018/01/19 16:45:06 ERROR : /root/.cache/rclone/cache-backend/cache_gdrive.db: Error opening storage cache. Is there another rclone running on the same remote? failed to open a cache connection to “/root/.cache/rclone/cache-backend/cache_gdrive.db”: timeout

2018/01/19 16:45:07 DEBUG : cache_gdrive: wrapped gdrive:n5oen4pbldg1kijms6vte0m5rs/j86h4etl6go1uj1ee103ssqdfk/utaiuk0m0d3mptb97geqsnp8ts at root /n5oen4pbldg1kijms6vte0m5rs/j86h4etl6go1uj1ee103ssqdfk/utaiuk0m0d3mptb97geqsnp8ts

The only solution is to unmount the remote and mount it again afterwards.

@ncw, what am I doing wrong? Do I need an additional crypt remote pointed directly to gdrive instead of the cache remote?

Is there another rclone running at that moment? Or maybe the old one didn’t stop quick enough before you started the new one?

You can only use the cache from one rclone at a time unfortunately.