Thanks that’s what I thought. I didn’t change any paths. But I have reanalyzed my movies and refreshed Metadata.

Hopefully it calms down!

Thanks that’s what I thought. I didn’t change any paths. But I have reanalyzed my movies and refreshed Metadata.

Hopefully it calms down!

Hmm something is definitely up with data usage.

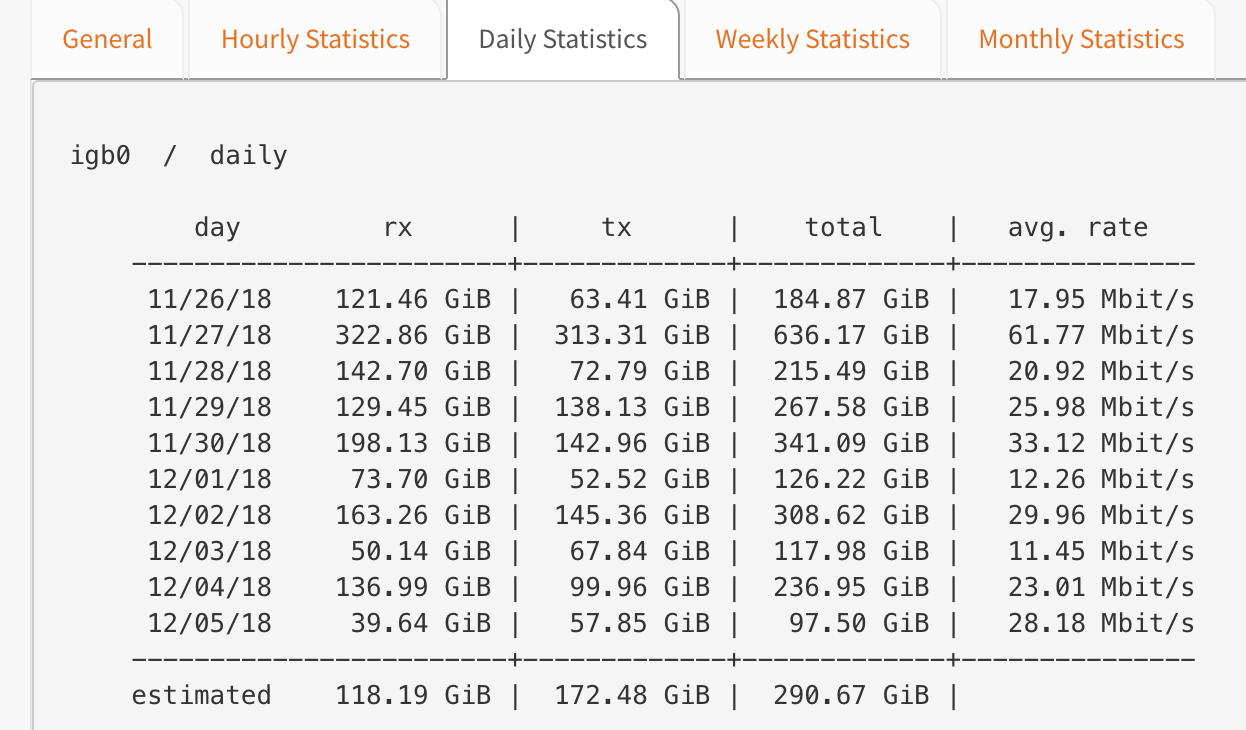

I’ve used 1392GB of incoming data in 2 days. Libraries fully scanned and analysed beforehand. All that would have been analysed would have been new content added in the past two days. No content is downloaded or postprocessed on this server. It’s used for Plex streaming only.

In this same time frame. 97GB of outgoing data has been streamed out - understand due to Plex transcoding, outgoing data will be smaller, but less than 10% of incoming seems strange.

There’s been 85 streams during this time = equating to 16GB per stream. Problem is I don’t have a single file thats 16GB in size. Most files are TV files that are 2-4GB. Some Movies are 10GB or so.

This is my config- is there anything here that would cause Rclone to download multiple chunks of the same data (ie. buffer limitations etc)?

ExecStart=/usr/bin/rclone mount plexcrypt: /home/media/plex --config /root/.config/rclone/rclone.conf --allow-other --allow-non-empty --dir-cache-time 72h --drive-chunk-size 32M --buffer-size 256M --log-level INFO --log-file /home/media/logs/rclone.log --vfs-read-chunk-size 64M --vfs-read-chunk-size-limit off

Quick question - when new content is added to the RClone mount from another server, does RClone on this end download the whole file initially?

I’m just wondering if adding files from my postprocessing server is somehow triggering data usage on this RClone mount?

You don’t need such a large buffer size. Try reducing it to something smaller like 64M. Otherwise any time a file is opened by Plex, that amount of data will be downloaded.

Deep analysis or something on? That feels like a lot of data in 2 days. I run a VPN and torrent fro my same plex box and I only usually see something like this:

That’s usually when I download a new series or something that bumps me up. Even the first analysis really doesn’t use that much bandwidth overall.

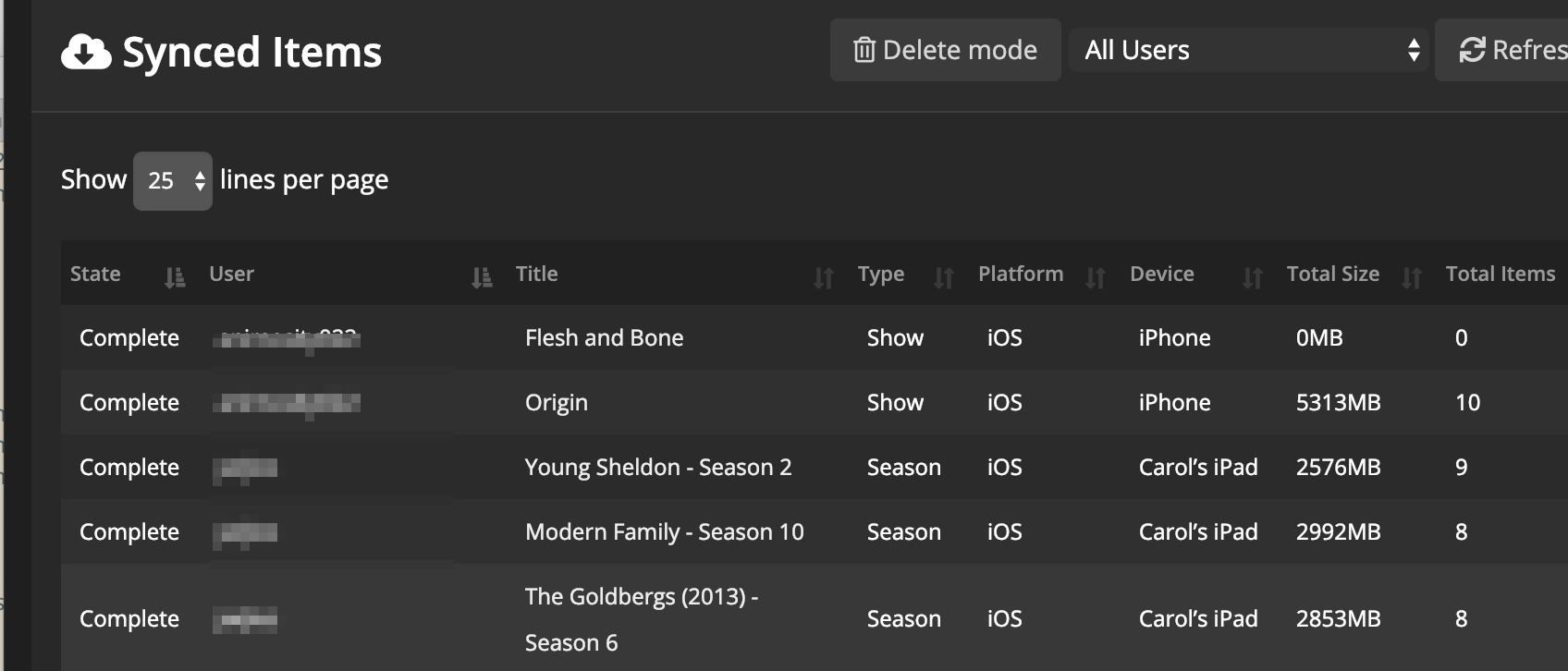

Did someone run a huge sync on a lot of stuff? It is usually something in Plex that drives it, so it’s a bit hard to figure it out without digging in the plex logs a bit and see where something might be up.

I’d check the sync logs from something like this:

and the plex logs to see if some analysis or something else is going on.

It depends on what the operation is and how much time it has to open/close the file.

You can test by running a media info or something against a file as that open and closes it a number of times.

Make the number like 2GB and run the mediainfo and analyze your download. In 4 opens, it will not have downloaded 8GB. The trick being rclone doesn’t have enough time to fully populate the buffer as it closes it out and flushes it fast.

I’ve been running the default buffer size and notice no difference in playback.

This again depends on your internet speeds too. I think its very probable that the 256M buffer will get filled pretty quickly on a 1 Gbps connection.

Yeah, same here, hence the recommendation to reduce it. The default is a paltry 16M compared to the currently configured 256M.

@Animosity022 are you still having the same start times of your playback as before? I’m noticing slow startup times again with playback. So I’m wondering if it’s on my side or on Gdrive’s side.

I think majority of my starts are a few seconds (2-3) to maybe 5-7 depending on if some transcoding happens or not.

I haven’t noticed any changes in that behavior lately.

Hmmm I used to manage that last time. Now I’m at 16 seconds (and more now). Would lowering vfs read chunks help with startup times you think?

Maybe I’ll create a new API, see if that changes things although that shouldnt be since the API is already my own…

Yeah, that seems off. Did you change anything else recently? I found very little difference in testing for start times with adjusting the chunk size.

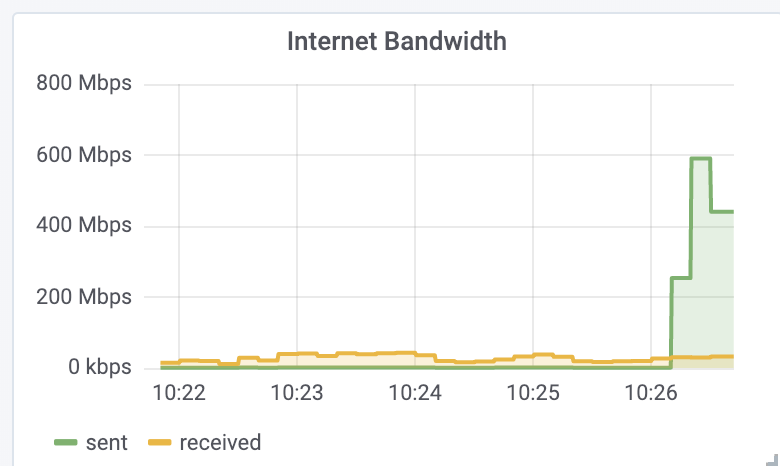

No didn’t change anything. I also see my router instantly downloading at max speed (500mbit) when starting the playback. Now that I think of it I did add -rc though which might be not working well (even though it gives an OK).

Ill try a mount without -rc, see if that changes anything.

That’s pretty normal for me and a sign things are working well. I want it to consume as much bandwidth as possible to start playback as quickly as possibly.

That’s me clicking to start playback on a movie now.

Removed the rc command and the startup times are back to around 2-5 seconds!

I had this: rclone mount --rc --allow-other --buffer-size 1G --dir-cache-time 72h --drive-chunk-size 32M --fast-list --log-level INFO --vfs-read-chunk-size 128M --vfs-read-chunk-size-limit off gdrive_crypt: /mnt/user/mount_rclone/Gdrive --stats 1m &

And changed it to rclone mount --allow-other --buffer-size 1G --dir-cache-time 72h --drive-chunk-size 32M --fast-list --log-level INFO --vfs-read-chunk-size 128M --vfs-read-chunk-size-limit off gdrive_crypt: /mnt/user/mount_rclone/Gdrive --stats 1m &

So I don’t know why it doesn’t cause problems for you?

That seems super strange as the -rc flag shouldn’t cause that. I don’t use stats or fast-list (doesn’t do anything on the mount from my understanding).

You could try to turn the log level up to DEBUG, grab the 2 logs from playback starting with and without the rc flag and post them up and we can take a look and see if anything jumps out.

I’ve compared the two logs and I see with --rc on it generates an 403 error during playback.

With rc:

2018/12/05 17:40:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: Open: flags=OpenReadOnly 2018/12/05 17:40:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: Open: flags=O_RDONLY 2018/12/05 17:40:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: >Open: fd=Films/47 Ronin (2013)/47 Ronin (2013).mkv (r), err=<nil> 2018/12/05 17:40:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: >Open: fh=&{Films/47 Ronin (2013)/47 Ronin (2013).mkv (r)}, err=<nil> 2018/12/05 17:40:51 DEBUG : &{Films/47 Ronin (2013)/47 Ronin (2013).mkv (r)}: Read: len=65536, offset=0 2018/12/05 17:40:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: ChunkedReader.openRange at 0 length 134217728 2018/12/05 17:40:51 DEBUG : pacer: Rate limited, sleeping for 1.211086347s (1 consecutive low level retries) 2018/12/05 17:40:51 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=471649219200, userRateLimitExceeded) 2018/12/05 17:40:51 DEBUG : pacer: Rate limited, sleeping for 2.259786363s (2 consecutive low level retries) 2018/12/05 17:40:51 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=471649219200, userRateLimitExceeded) 2018/12/05 17:40:51 DEBUG : pacer: Rate limited, sleeping for 4.120257644s (3 consecutive low level retries) 2018/12/05 17:40:51 DEBUG : pacer: low level retry 2/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: https://console.developers.google.com/apis/api/drive.googleapis.com/quotas?project=471649219200, userRateLimitExceeded) 2018/12/05 17:40:52 DEBUG : pacer: Resetting sleep to minimum 10ms on success

Without RC:> >

2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/: Attr: 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxrwxrwx, err=<nil> 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/: Lookup: name="47 Ronin (2013).mkv" 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/: >Lookup: node=Films/47 Ronin (2013)/47 Ronin (2013).mkv, err=<nil> 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: Attr: 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: >Attr: a=valid=1s ino=0 size=14253776033 mode=-rw-rw-rw-, err=<nil> 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: Open: flags=OpenReadOnly 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: Open: flags=O_RDONLY 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: >Open: fd=Films/47 Ronin (2013)/47 Ronin (2013).mkv (r), err=<nil> 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: >Open: fh=&{Films/47 Ronin (2013)/47 Ronin (2013).mkv (r)}, err=<nil> 2018/12/05 17:45:51 DEBUG : &{Films/47 Ronin (2013)/47 Ronin (2013).mkv (r)}: Read: len=65536, offset=0 2018/12/05 17:45:51 DEBUG : Films/47 Ronin (2013)/47 Ronin (2013).mkv: ChunkedReader.openRange at 0 length 134217728

Using 8 tends to get the API rate limiting for Google so I prefer to keep it lower.

That’s freaky as with the rc, you getting API limits but without, you aren’t.

And forgive me if I asked, but you are using your API key right? That would make it even more strange.

Yeah using my own API. Stranger is that I’ve only made 6000 API hits today, so it shouldn’t be giving 403 errors.

Those errors are more related back to # in a current span of time rather than overall hits per day.

What’s your rclone version? 1.4.5?