I know I should let the scan complete but here is the thing: it doesn’t. It either blacks out or doesn’t go past 10% of the scan. The library I’m adding is merely 11 TB to which I have given more than a day’s time for completion and it didn’t. This only happens when I scan from mergerFS btw. None of this seems to happen if I do it directly from the cloud mount (the scans complete faster and don’t use all that much CPU). Also I’m currently testing so problems are okay. I could always go back to plexdrive + unionfs but I want this setup to work too.

Proxmox, which is a Debian-based hypervisor that uses a modified Ubuntu kernel. I’ll try setting it up in a pure Debian VM to see if it’s any different. Then if still nothing, a completely separate box with bare Debian.

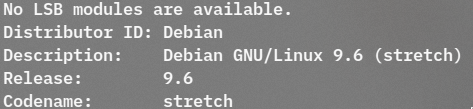

Are you on the most recent Stretch release? I want to eliminate as many possible variables:

- Latest Debian Stretch

- Rclone GDrive remote, then a GCrypt remote wrapping the GDrive remote

- rClone is not custom-built with cmount, you’re using the regular install-based version?

Some sections of your GitHub have seemingly contradicting information (like your gmedia-rclone.service still references auto_cache, sync_read, and cmount) - MergerFS to write to a local drive, but overlay that and your rClone mount to another mount which Plex is pointed to (so your downloaded data is instantly available but not instantly uploaded)

- Do you run your gmedia-find.service by necessity, or preference? For example, my gmedia-find runs “ExecStart=/usr/bin/rclone rc vfs/refresh recursive=true” (I’ve tried both with and without this)

Obviously you’re doing all this for the enjoyment of it so I’m not nitpicking the accuracy of your generously provided documentation, just want to make sure I’m on the same page. I plan on not using the MergerFS mount in my initial test so I can limit the variables.

It could be something with mergerfs, but that seems odd as well as it is the Plex that you are seeing the CPU on.

I know Plex can appear stuck at a certain point as that’s usually when it’s analyzing files. The one thing that seems still odd to me is even when I was scanning, I never saw high CPU like you are seeing now.

What OS are you running?

felix@gemini:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 9.6 (stretch)

Release: 9.6

Codename: stretch

Yeah, my setup is the latest updated stretch:

felix@gemini:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 9.6 (stretch)

Release: 9.6

Codename: stretch

My rclone and GO version:

felix@gemini:~$ rclone version

rclone v1.45

- os/arch: linux/amd64

- go version: go1.11.2

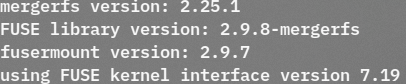

My mergerfs

felix@gemini:~$ mergerfs -V

mergerfs version: 2.18.0

FUSE library version: 2.9.7

fusermount version: 2.9.7

using FUSE kernel interface version 7.19

I use a standard mount and user mergerfs to combine a local / GD together with the local being an always write first setup and I rclone move overnight daily.

Let me take a review and make sure I’m consistent as if you see something wrong, let me know and I’ll fix it so please nitpick away

Same as you.

Tried adding direct_io for testing, plex went unreachable even before scanning 5 entries, lol. I know Plex can appear stuck while scanning, I’m okay with that but what i haven’t ever seen it going offline for hours at once and then coming online sporadically, barely responsive. Again, this only happens with mergerfs. I’ve been on this for 4-5 days now.

Are you using the packaged mergerfs or a compiled version yourself?

root@gemini:~ apt search mergerfs

Sorting... Done

Full Text Search... Done

mergerfs/stable,now 2.18.0-1 amd64 [installed]

another FUSE union filesystem

I got the binary from the author’s github releases page.

I updated to the same version from the GitHub and rescanned my library and don’t get any CPU usage. My library is already completely analyzed though so it’s not really comparing the same to what you have.

If you grab this:

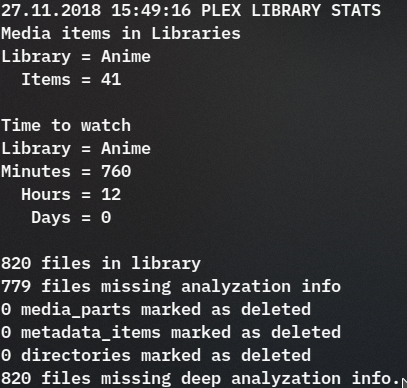

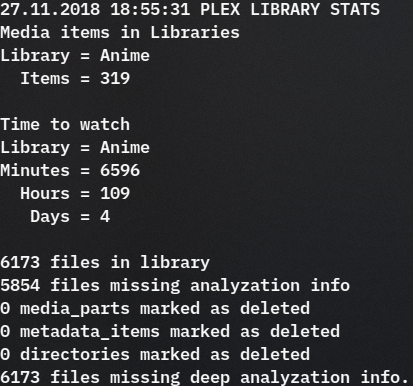

And run as the Plex user, what is the bottom of the output that shows library / analyzed information.

I removed sync_read and set it on scan again. These are the stats with 17 items (as seen from the plex UI) scanned.

sync_read is required because rclone is compiled with it by default so you’ll get out of sync reads without that option on.

So those 779 files need to get analyzed before Plex goes back to a quick scan.

Oh? I didn’t know that. I compiled rclone with cmount as per your instructions in this thread btw. I removed sync_read because i saw that unionfs-fuse has async_read on by default so I thought it might be worth a try.

Yep, if you use use unionsfs, you need to -o sync_read for that as well or you get the same issues as with mergerfs.

You are basically on the same OS as me and running almost the same versions. I’d just use the stock rclone binary you can download along with the mergerfs from the GitHub with the same options and let the first Plex scan finish up to get those last files analyzed.

When the analyze is happening, you shared the logs before and we didn’t see any 403s or any issues in the rclone logs. The timing errors could be more related to the sync_read issue if you had stuff out of sync between rclone/mergerfs.

Alright, I’ll try it again and report back once the scanning is finished.

EDIT 1: I’ll let the scan finish first without sync_read and then I’ll try with sync_read. I say so because it has scanned about 31 items up till now and haven’t seen any irregularities as I did before or >30% CPU usage.

EDIT 2: rclone forums won’t let me reply as this is my first day as an user so I’m posting the reply to your comment here: Well, if I didn’t include sync_read that means async_read is on since that is the default, no? I know you have done a lot of testing and I trust your tests. It’s just that with 35 items scanned now, there is not a single unresponsive page or erroneous behaviour yet so that makes me curious of the results. I’ll report back, as I said, with both variations.

EDIT 3: 106 entry scanned as per UI. No lag, no issues whatsoever. 20-30% CPU usage as normal. I’m starting to think sync_read was the culprit.

EDIT 4: All of 135 entries scanned and we’re back to maximum CPU usage. Also RAM is maxed out and is swapping (probably because I set --buffer-size to 4G). I should really let it do its thing now and check back in a few hours.

You just need the sync_reads to match or not match. If you are using without sync_read and using async_read, unions/mergerfs need to use async_read.

I did a fair amount of performance testing and sync_read does much better overall from my experience.

I have things up and running in a Debian 9 VM, so far my MediaInfo times are about 3-4 times faster. It’s still not down to single-digit seconds, but it’s progress. Plex is currently scanning my library so I’ll know shortly if there will be a difference in actual playback as well.

Looks like it’s working now – for whatever reason it didn’t want to run smoothly on that system, but now inside the Debian VM, it’s working great. My mediainfo times aren’t great, but now I can stream with no issues.

I’m going to change my find to this as well. Forgot to mention that and thanks for the tip!

Thanks for the all info - very much appreciated.

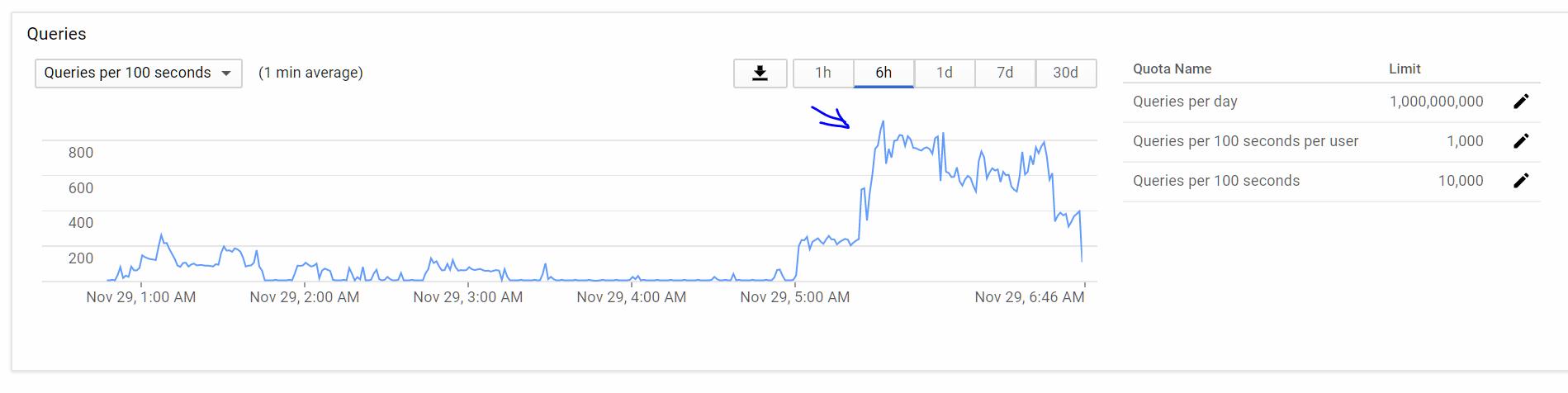

I’ve recently been having issues with hitting API bans at around 5am every morning with Plexdrive 5.

Switched over to rclone VFS using your config yesterday- however when running a Plex Library Scan this morning - I got banned again - too many API calls within 100 seconds

I was running a scan on approx a week’s worth of TV and Movies

I don’t have anything else running and/or calling the API - no uploads from Radarr/Sonarr etc. I’ve turned all that off.

Whats strange, is I ran the exact same library scan at 9pm the night before, and whilst API calls got high (maybe around 700 per 100 seconds) I didn’t get banned.

This is the config I’m using:

[Unit]

Description=Google Drive (rclone)

After=network-online.target

[Service]

Type=simple

User=root

ExecStart=/usr/bin/rclone mount plexcrypt: /home/media/plex --config /root/.config/rclone/rclone.conf --allow-other --allow-non-empty --dir-cache-time 72h --drive-chunk-size 32M --log-level INFO --buffer-size 1G --log-file /home/media/logs/rclone.log --vfs-read-chunk-size 128M --vfs-read-chunk-size-limit off

ExecStop=/bin/fusermount -u /home/media/plex

Restart=on-failure

[Install]

WantedBy=default.target

RClone Config is using default settings.

System is a 6 core VPS with 16GB of RAM.

Is it possible that the VPS is too powerful/efficient at 5am in the morning, causing the ban?

Are there any tweaks I can make to the config to limit the amount of API calls made?

You don’t get banned for number of API calls per 100 seconds.

You get a 403 related back to rate limiting and it tells rclone to back off.

What does your rclone version show?

Did you see errors in the logs related to 403 download quota exceeded?

Geez talk about quick reply!

This is what the log is generating

2018/11/29 05:32:03 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 1/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:04 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 2/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:05 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 3/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:06 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 4/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:07 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 5/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:09 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 6/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:13 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 7/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:14 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 8/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:15 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 9/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

2018/11/29 05:32:16 ERROR : Movies/Police Story 2013 (2013)/Police Story 2013 (2013).mkv: ReadFileHandle.Read error: low level retry 10/10: couldn't reopen file with offset and limit: open file failed: googleapi: Error 403: The download quota for this file has been exceeded., downloadQuotaExceeded

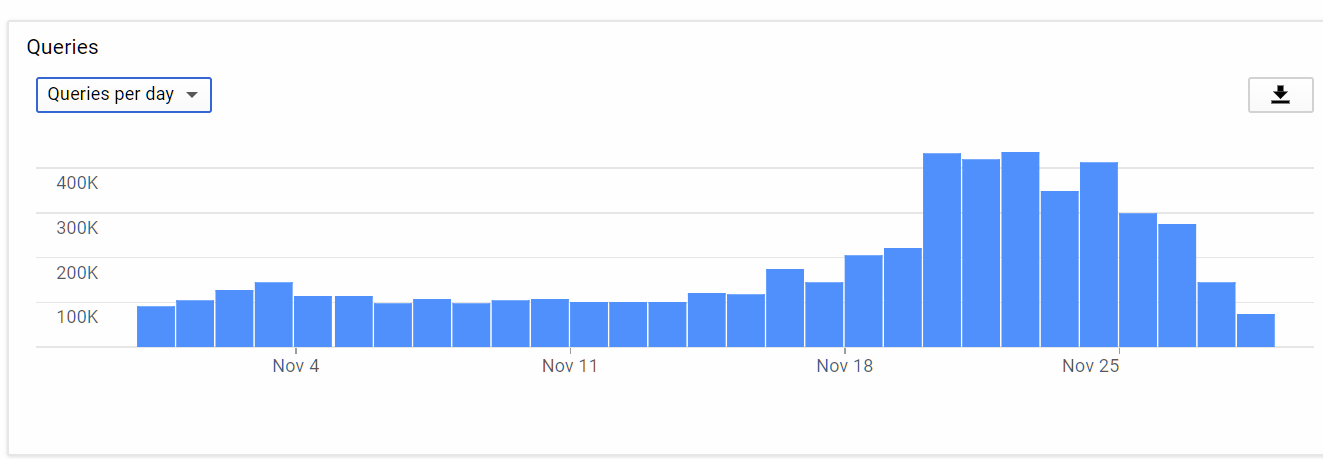

This is my daily usage - last day is incredibly low as I’ve stopped all other activity on the mount: