Nah, folks have gotten the upload limits removed. I believe @calisro has. I haven't bothered to ask as I don't see it as an issue for me.

My response from them was only a month ago. @calisro do you mind elaborating on how you managed this?

Shoot. I think I misspoke. I think he was talking about the API daily limits when I look back at his post. I swear I read about folks getting the upload limits removed but not sure where I saw it.

That happens. I kind of begged and pleaded because 35TB / 750 GB/day took me around 50 days including hiccups and outages. API limits CAN be increased but the support staff I spoke with swear by the 750GB intake limit.

I think it was actually on https://www.reddit.com/r/DataHoarder but I can't seem to dig it up.

I just had the 1000 per second api limit raised. Helped a bit for intensive API commands. We're increased to 10000. I'm not aware of anyone getting the 750gb daily limit increased.

No judgement here. Reddit has way too much content to efficiently find stuff.

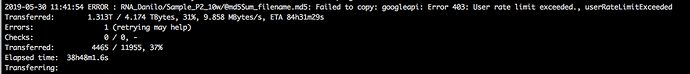

I understand what you mean. However, experience has shown me that there is something that I need to do on my side (I'll try to show here what's going on).

What I'm trying to avoid is this userRateLimitExceed.

I think you mean you are trying to stay within the 750GB per day quota? If so, add in --bwlimit 8.5M and let it run.

@Animosity022 is correct - you can avoid the daily upload limit with --bwlimit 8.5M

You won't avoid the rate limit though not without either requesting an increase from Google (requires a lengthy form to be filled out) or by ignoring rclone's exponential backoff and building in breaks that pause the process every so often.

Personally I just recommend you let rclone do it's thing and hammer away, Google isn't going to ban you in any way for exceeding the rate limit.

You could always just use Google File Stream to copy it all. For a simple download this should be sufficient because unless your internet is balls-to-the-wall amazing you won't hit the theoretical 10TB/day limit I've heard rumors of.

The rate limit is just Google's way of protecting themselves from abuse. You really shouldn't worry about it unless it's causing you problems in another area.

You could try looking into this: https://support.google.com/accounts/answer/6386856 is not rclone related but it might work as well.

My understanding is that Google File Stream isn't available for all platforms, right? For example, I seem to be unable to find a client for linux...

Start a new thread rather than hijacking this one if you have specific questions.