Hey guys,

I opened this in the past: Google Drive for Business - slow: Basically I was “complaining” of Gdrive to Gdrive being superslow.

Since then I let it go a bit as I held what was really important on two NAS I owned.

I’ve decided it’s time to get rid of one of the NAS and so I’m ready to tackle this issue once again. I’ve noticed that the script worked eventually until a month or so ago, when again I could see it identifying a ton of “duplicated” files (which apparently is normal) and then nothing transferred.

This is the line of code I use for that (powershell >> So the variables are the locations):

./rclone.exe sync --checkers=25 --transfers=25 $sourcefolder_offsitedata $destinationfolder_offsitedata --backup-dir $destinationHistoricFolder_offsitedata --suffix $suffix_offsitedata

Source and destinations are the same gdrive remote, which uses my own API I got from Google.

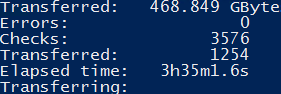

Another thing I tried was to add a new remote, but configured the same way (so same API), just a different name. It worked for like 2 hours (where I could see it transferring ~400GB). Whenever I called that line of code afterwards, it would behave exactly the same as the above (original) script.

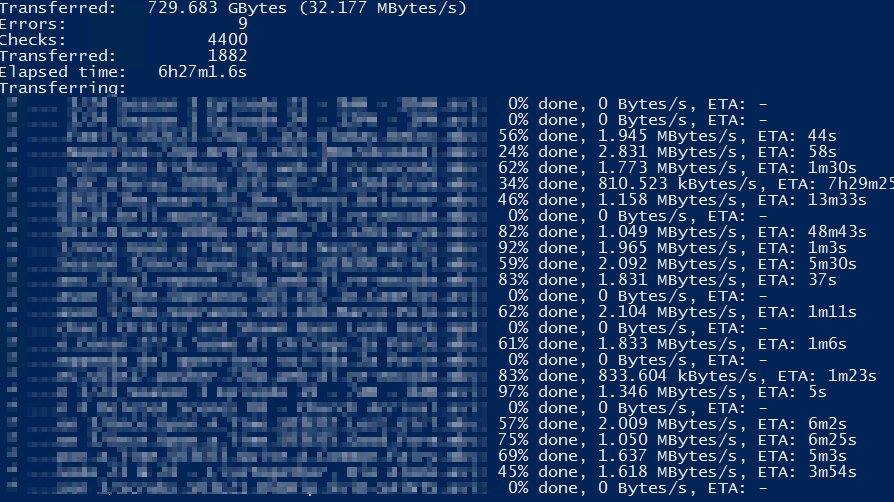

I’m now trying something else: new remote, this time using no API (so default configuration “next, next”) for the remote and it seems to be going fine for now. I’m just afraid to stop it, so I applied QoS on rclone.exe to make sure I had some bandwidth left to work

Now that you have all of the info, my question is: what do you suggest doing here? I mean, hopefully this solution with two remotes and two different APIs works better and I’d be happy like that, but I’m looking to hear some suggestions.

NOTE

In the other thread we discussed Google File Stream, which I eventually got during the testing period and unfortunately (even if it’s great) it doesn’t work for this sort of backup I’m running as it’ll basically be downloading ALL of the files it then re-uploads. But the uploads are way slower than the downloads (looks like G Drive File Stream isn’t as fast as rclone when uploading - in fact, rclone/gdrive are saturating the line which is a 500/500Mbps) and that killed the OS drive

Thanks

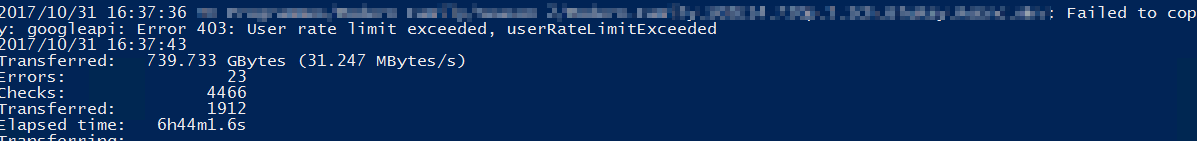

Is there like a time frame or something I shall wait? I just stopped it and started it again and indeed it’s still complaining the limit was exceeded.

Is there like a time frame or something I shall wait? I just stopped it and started it again and indeed it’s still complaining the limit was exceeded.

).

).