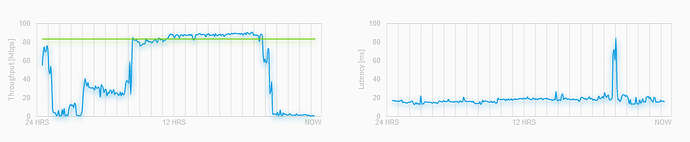

Rclone within a docker container with bandwidth schedule progressively uses the full bandwidth available.

I also tried this script but with the same result: for a certain amount of time everything runs smoothly then for no apparent reason rclone uses more and more bandwidth to finally use 100% of it.

rclone v1.53.1

- os/arch: linux/amd64

- go version: go1.15

Docker container runs on a Unraid server (version 6.8.2).

rclone mount Google drives

I am using the official rclone/rclone container.

I am using 3 different containers:

- Personal Google Drive

- Shared Google Drive

- Sync process to sync data from Google drive folders locally

1.

Container parameter: --cap-add SYS_ADMIN --security-opt apparmor:unconfined

Container post argument:

mount gdrive: /mnt/gdrive --config /rclone/config/rclone.conf --allow-other --size-only --uid=1000 --gid=1000 --umask 002 --dir-cache-time 2m --buffer-size 64M --log-level INFO --log-file /rclone/logs/rclone-mount-gdrive.log --timeout 1h --vfs-cache-max-age 72h --vfs-read-chunk-size 64M --vfs-read-chunk-size-limit 5G --drive-chunk-size 128M --buffer-size 64M --user-agent='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36' --rc --rc-addr 127.0.0.1:5572

Volumes:

/dev/fuse:/dev/fuse

/etc/passwd:/etc/passwd

/etc/group:/etc/group

2.

Container parameter: --cap-add SYS_ADMIN --security-opt apparmor:unconfined

Container post argument:

mount tdrive: /mnt/tdrive --config /rclone/config/rclone.conf --allow-other --size-only --uid=1000 --gid=1000 --umask 002 --dir-cache-time 2m --buffer-size 64M --log-level INFO --log-file /rclone/logs/rclone-mount-gdrive.log --timeout 1h --vfs-cache-max-age 72h --vfs-read-chunk-size 64M --vfs-read-chunk-size-limit 5G --drive-chunk-size 128M --buffer-size 64M --user-agent='Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36' --rc --rc-addr 127.0.0.1:5572

Volumes:

/dev/fuse:/dev/fuse

/etc/passwd:/etc/passwd

/etc/group:/etc/group

3.

Conatiner parameter: --user $(id -u):$(id -g) --device /dev/fuse --cap-add SYS_ADMIN --security-opt apparmor:unconfined

Container post argument:

copy tdrive:/remote_dir /local_dir --bwlimit "00:30,10M 08:00,256K 17:00,100K" --tpslimit 8 --tpslimit-burst 5 --drive-chunk-size 256M --fast-list --drive-acknowledge-abuse --log-file /rclone/logs/rclone-sync-remote_dir.log

Rclone config file

[gdrive]

type = drive

client_id = XXXXXXXXXXXXXXXXXXXXXXXXX.apps.googleusercontent.com

client_secret = YYYYYYYYYYYYYYYYYYYYYYYY

scope = drive

token = {"access_token":"ZZZZZZZZZZZZZZZZZZZZZZ","expiry":"2020-09-14T12:48:18.575356238+02:00"}

root_folder_id = ROOT_ID

[tdrive]

type = drive

client_id = XXXXXXXXXXXXXXXXXXXXXXXXX.apps.googleusercontent.com

client_secret = YYYYYYYYYYYYYYYYYYYYYYYY

scope = drive

token = {"access_token":"ZZZZZZZZZZZZZZZZZZZZZZ","expiry":"2020-09-14T18:05:01.369531163+02:00"}

team_drive = ROOT_ID