plotterx

February 7, 2022, 6:18pm

21

The reason to use beta version is, try to test if you have fixed the problem on beta version or not.

Okay I've installed the latest version.

rclone v1.57.0

- os/version: ubuntu 20.04 (64 bit)

- os/kernel: 5.4.0-97-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.17.2

- go/linking: static

- go/tags: none

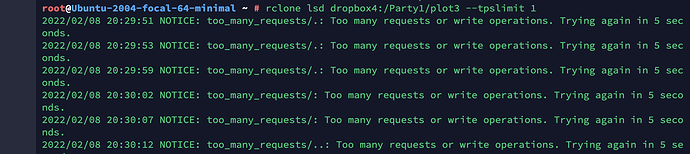

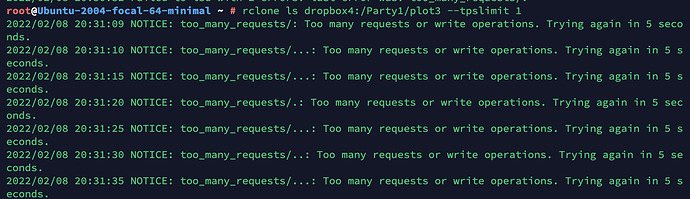

When I connect the app and just with ls command, I can see this logs:

fusermount -uz /dropbox4

I've created a new app to test your orders but I can't see the new app's statistics in 1 day.

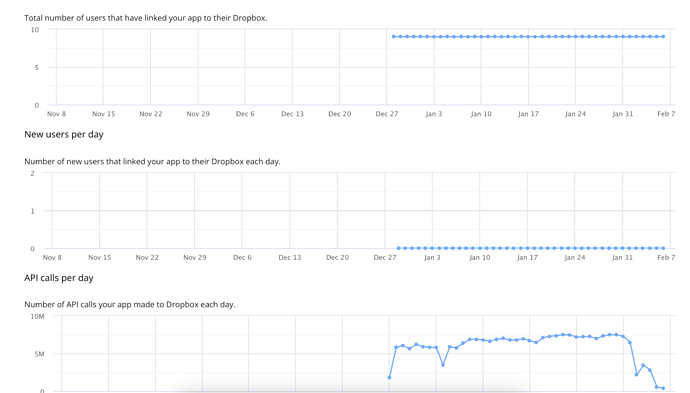

This is the old app's statistics.

r

The old app was getting hammered though.

10 users. Your per day is quite high as it seems to make sense that app was over loaded.

If you do 12 TPS continually for 24 hours, you get 10.3m requests in a day.

To the best of my knowledge, you can make as many apps as you want so I use 4 total.

I have 2 mounts that each use 1.

Each is limited to 12 tps and since I've done that, I've never had a TPS issue.

plotterx

February 7, 2022, 6:29pm

23

I'll wait until tomorrow, And I'll post the newest results. The machine is power off now. We will see it

plotterx

February 8, 2022, 6:02pm

24

hello,

rclone mount dropbox4:/Party1 /dropbox4 --log-level DEBUG --allow-non-empty --tpslimit 1

Also I'm trying to get help from dropbox but they told me and all of my friends this is not about dropbox. There is no api update. So please can you contact with dropbox to solve this? Because they request the developers to contact them.

As I told before, I can provide rclone config for debugging or etc.

Anyway, but unfortunately the same error is continued...

2022/02/08 18:57:06 INFO : Starting transaction limiter: max 1 transactions/s with burst 1

2022/02/08 18:57:06 DEBUG : rclone: Version "v1.57.0" starting with parameters ["rclone" "mount" "dropbox4:/Party1" "/dropbox4" "--log-level" "DEBUG" "--allow-non-empty" "--tpslimit" "1"]

2022/02/08 18:57:06 DEBUG : Creating backend with remote "dropbox4:/Party1"

2022/02/08 18:57:06 DEBUG : Using config file from "/root/.config/rclone/rclone.conf"

2022/02/08 18:57:07 DEBUG : Dropbox root '': Using root namespace "2201984529"

2022/02/08 18:57:08 DEBUG : fs cache: renaming cache item "dropbox4:/Party1" to be canonical "dropbox4:Party1"

2022/02/08 18:57:09 DEBUG : Dropbox root 'Party1': Mounting on "/dropbox4"

2022/02/08 18:57:09 DEBUG : : Root:

2022/02/08 18:57:09 DEBUG : : >Root: node=/, err=<nil>

2022/02/08 18:57:25 DEBUG : /: Attr:

2022/02/08 18:57:25 DEBUG : /: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:25 DEBUG : /: ReadDirAll:

2022/02/08 18:57:25 DEBUG : /: >ReadDirAll: item=5, err=<nil>

2022/02/08 18:57:25 DEBUG : /: Lookup: name="plot1"

2022/02/08 18:57:25 DEBUG : /: >Lookup: node=plot1/, err=<nil>

2022/02/08 18:57:25 DEBUG : plot1/: Attr:

2022/02/08 18:57:25 DEBUG : plot1/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:25 DEBUG : /: Lookup: name="plot2"

2022/02/08 18:57:25 DEBUG : /: >Lookup: node=plot2/, err=<nil>

2022/02/08 18:57:25 DEBUG : plot2/: Attr:

2022/02/08 18:57:25 DEBUG : plot2/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:25 DEBUG : /: Lookup: name="plot3"

2022/02/08 18:57:25 DEBUG : /: >Lookup: node=plot3/, err=<nil>

2022/02/08 18:57:25 DEBUG : plot3/: Attr:

2022/02/08 18:57:25 DEBUG : plot3/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:34 DEBUG : /: Attr:

2022/02/08 18:57:34 DEBUG : /: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:42 DEBUG : /: Lookup: name="plot1"

2022/02/08 18:57:42 DEBUG : /: >Lookup: node=plot1/, err=<nil>

2022/02/08 18:57:42 DEBUG : plot1/: Attr:

2022/02/08 18:57:42 DEBUG : plot1/: >Attr: attr=valid=1s ino=0 size=0 mode=drwxr-xr-x, err=<nil>

2022/02/08 18:57:42 DEBUG : plot1/: ReadDirAll:

2022/02/08 18:57:59 NOTICE: too_many_requests/..: Too many requests or write operations. Trying again in 5 seconds.

2022/02/08 18:57:59 DEBUG : pacer: low level retry 1/10 (error too_many_requests/..)

2022/02/08 18:57:59 DEBUG : pacer: Rate limited, increasing sleep to 5s

plotterx

February 8, 2022, 6:06pm

25

btw, I've a guess, I can see a folder which has several items. Maybe there is a limitation about total file folder size or item count. If api have ability, you can read page by page the directory except reading all.

You are only getting 5 items in a directory and it lists them all out.

plotterx:

2022/02/08 18:57:42 DEBUG : plot1/: ReadDirAll:

2022/02/08 18:57:59 NOTICE: too_many_requests/..: Too many requests or write operations. Trying again in 5 seconds.

2022/02/08 18:57:59 DEBUG : pacer: low level retry 1/10 (error too_many_requests/..)

2022/02/08 18:57:59 DEBUG : pacer: Rate limited, increasing sleep to 5s

and then you try to relist that same directory again and it errors out.

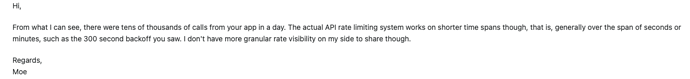

Dropbox won't tell you, me or @ncw about what rate limit you have crossed as they won't share that information and they'll refer you back to the respecting the rate limits and it's not documented or shared.

You'll get a generic response like:

So they won't offer anymore information.

The part that is odd for me is the 5 second back off as I've never seen that and I've only see 300 seconds in any my logs.

I'm not aware of any hard limit but you have 5 items so that doesn't seem like you are crossing it. I have one directory with 5k folders in it.

What does the API graph show for the one you just created? Are you seeing any odd spikes there? tpslimit 1 should reduce you to 1 transaction per second and if that's tripping their rate limits on your App, something is very wrong with that.

plotterx

February 8, 2022, 6:22pm

27

Yeah, I guess I'm right.

Sorry as I'm not following what you are doing. Can you be more specific with steps?

plotterx

February 8, 2022, 6:30pm

29

In the last scenario I shared, I got the same errors as you know. But I suspect this problem is related to number of files or total size of the directory. Then I moved around 20 files to different folder and tried to list that folder. Successfully listed. So I think about a solution but I have no experience on it.

Without knowing what you are doing and not being able to peer into your computer this is very tough to help you out.

Based on what? What is the number of files? What's the size of the directory?

What was the folder count before? What is the size?

You haven't even explained what the issue is and you are asking to code a solution?

plotterx

February 8, 2022, 6:41pm

31

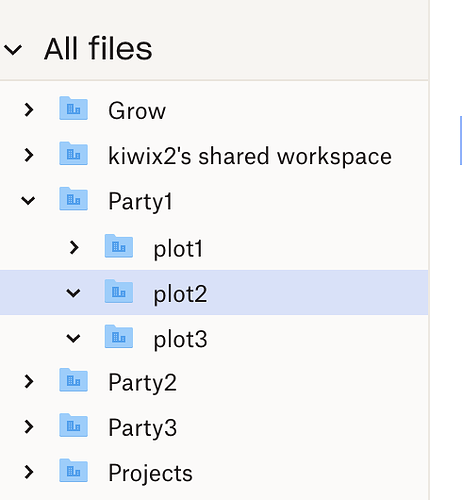

I had 900 files before this operation. (in Party1/plot2)

Each file is 100 GB, total folder size was 90 TB. (Party1/plot2)

In the final, source folder has 872 files (87.2 TB) (Party1/plot2) and the target folder is 28 files (2.8 TB).(Party1/plot3)

each folder is in the same hierarchic level.

Totally, I've 3 main folder names are Party,

What is the use case of what you are doing though? Are you running a ls command to test this and just list out files?

That's not a huge number of files nor that much data.

Ole

February 8, 2022, 7:13pm

33

This will give you a brief introduction to Chia farming and its challenges:https://www.backblaze.com/blog/chia-analysis-to-farm-or-not-to-farm/

Sorry I was aware of the Chia bit on the test case and that was worded poorly in my question.

I'm trying to narrow down a small test case that's reproducible if there is a bug in the API and/or rclone without becoming a chia farmer.

plotterx

February 8, 2022, 7:20pm

35

I just only use "ls" command to test.

ls /dropbox4/plot2

I've moved 10 TB of data to the plot3 folder and I get same error on the new folder now. 100 files or 10 TB is not allowed anymore as I guess...

Moving 10TB takes some time even on the backside of Dropbox as that's not instant.

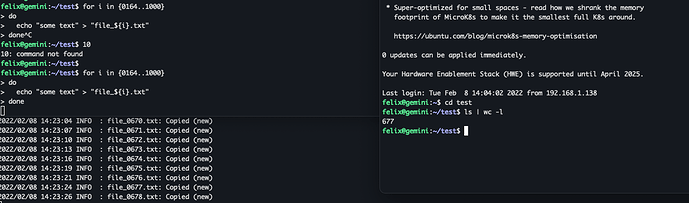

It's not a file number limit. I was in the process of dropping 1000 files into a single directory:

I have a directory with 5000 directories in it so it's not a number of items.

Dropbox (in theory) doesn't have a size limit in a folder, but I can't put 10TB in a folder easily to see.

The ls is just a read dir all so it's not even hitting the size of the files.

If a basic ls command with a TPS of 1 gives you an error, does a rclone ls on that same location produce an error going directly to the remote? No mount.

rclone ls remotename:plot1 or whatnot, whever it's 'broken'

1 Like

plotterx

February 8, 2022, 7:33pm

37

I can provide my realtime access keys for testing

I had time to do that

Yes I'm getting the same error when I try ls or lsd commands too.

Has all this data been there for a bit or did move stuff around?

When I moved a large amount of data around, it took hours to catch up (like 30-50 TB) to another folder.

Since we have a pretty decent test case now and an easier way to reproduce it, perhaps @ncw has something he wants to collect or look at to see if it is an API type issue.

plotterx

February 8, 2022, 7:44pm

39

I didn't modified for last 2 months.

It can be about the file count. You can test it on your local machine too.

I'm ready to support you with any kind of information. Thank you for your patience and helps

I dropped 1000 items in a single directory and no issues:

felix@gemini:~$ rclone ls DB:test | wc -l

1000

I have 53K items on my one mount:

find . -type f | wc -l

53114

If there is an issue with number of files / directories, I can't find it / reproduce it and 1000 in a single spot is more than what you were testing.

If you have a test case or something else to reproduce the issue, that's what we are looking for.