I just tried out the Sync command to a new folder in the bucket and uses --transfers 10 and it did the whole 33GB in 7min! The last Move command I did took 55 min, that is a huge improvement! Are there any guidelines to how many transfers it is recommended/safe to use with rclone? This task that seemed huge before is beginning to look much more manageable thanks to rclone!

With b2 you can set a leader number than the default. It works well with more. You should just experiment with what meets your needs. I use 12.

Thanks Rob,

I did a couple of tries with 10 and then jumped up to 50 (wishful thinking). It did much better with 10 than with 50. I just kicked off a Sync of the 34TB using --transfers 12 and it seems to be going well.

It is difficult to give guidelines other than increase --transfers until the speed goes down as there are so many things in play

- your network speed

- your disk speed

- your disk seek time

- your CPU power

And all of those things apply at the service provider too! In addition the service provider may have

- network throttling per user/IP/machine

- transactions per second throttling per user/IP/machine

- uploads per second throttling per user

However backblaze do recommend (can't find the link right now) using lots of transfers. 12 sounds like a good number.

Thanks Nick,

Its chugging away and all looks well. I really appreciate the time you took to help answer my questions and help me get on my way. Rclone is now a standard tool in my toolbox, I cant thank you enough!

All best...

Jason

Nick,

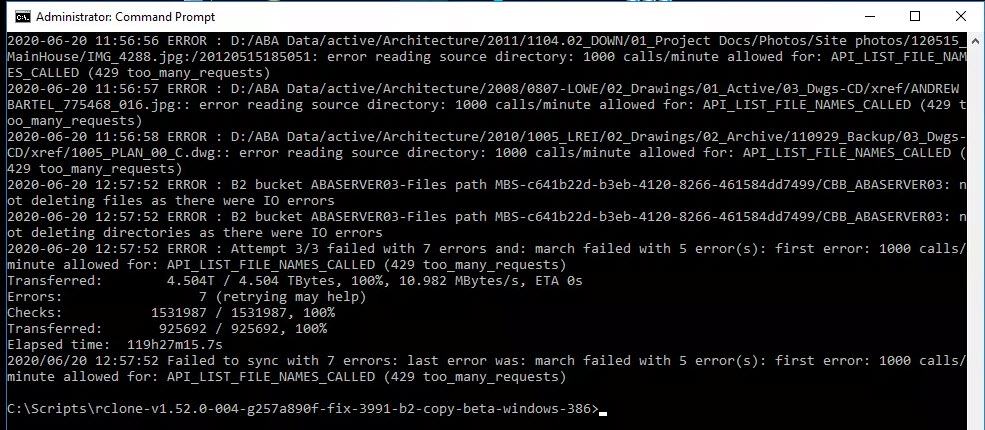

The smallest transfer (~3TB) has finished and resulted in some errors.

It said retrying may help so I ran it once more and I got these results:

2020-06-22 13:34:10 ERROR : Attempt 3/3 failed with 25 errors and: march failed with 24 error(s): first error: 1000 calls/minute allowed for: API_LIST_FILE_NAMES_CALLED (429 too_many_requests) Transferred: 3.350T / 3.350 TBytes, 100%, 27.296 MBytes/s, ETA 0s Errors: 25 (retrying may help) Checks: 2662961 / 2662961, 100% Transferred: 66010 / 66010, 100% Elapsed time: 35h44m50.4s 2020/06/22 13:34:10 Failed to sync with 25 errors: last error was: march failed with 24 error(s): first error: 1000 calls/minute allowed for: API_LIST_FILE_NAMES_CALLED (429 too_many_requests)

I was a bit confused as it got 7 errors the 1st time and 25 errors the 2nd time? It seems to keep transferring files after subsequent runs, Are these files that it missed the first time?

Thanks again!

Try adding --fast-list to your flags to work around the API limit.

Thanks Nick,

I did try that and I got an error about memory. Should I try it on a computer with more memory?

Ah, you could do that, or you could slow down the listing a bit with --tpslimit 10 which should keep you under the 1000 calls/minute limit.

Perfect, Thanks Nick. I will give it a go now.

Nick,

Should I continue to use the Beta version that you had sent over or should I be using the more recent release? When I ran the Sync with --fast-list --tpslimit 10 --progress

I got a window full of text like this after some time:

``goroutine 21389 [select]:

github.com/rclone/rclone/fs/march.(*March).Run.func1.1(0x7fa69840, 0x1, 0x1, 0x135f03c0, 0x135b73a0, 0x13440bc0)

github.com/rclone/rclone/fs/march/march.go:173 +0xfa

created by github.com/rclone/rclone/fs/march.(*March).Run.func1

github.com/rclone/rclone/fs/march/march.go:169 +0x1f6

goroutine 21246 [select]:

github.com/rclone/rclone/fs/march.(*March).Run.func1.1(0x7507fdc0, 0x1, 0x1, 0x135f03c0, 0x135b73a0, 0x13440bc0)

github.com/rclone/rclone/fs/march/march.go:173 +0xfa

created by github.com/rclone/rclone/fs/march.(*March).Run.func1

github.com/rclone/rclone/fs/march/march.go:169 +0x1f6``

You'll need to use the latest beta but you can use the latest one there if you want.

Leave the --fast-list off your command line - you don't have enough memory for that just add --tps-limit 10 - that should work ![]()

Thanks Nick. Giving it a go now. Much appreciated!

Hey Nick,

I wanted to give you an update of where I was. I was able to finish the small bucket I had to copy (~3TB) by using the --tps-limit 10 switch. Thank you so much for your help!

Now I am just awaiting for the 10TB and 34TB buckets I have to finish their initial sync command that has some errors. I am planning to run the same --tps-limit 10 sync command on them as well but I can forsee that taking some time as the 3TB bucket took about 36 hours to check. Is it safe to inch up the TPS-Limit a bit higher to try to get thru it quicker?

These folders that I am synchronizing are backup folders, I stopped backing up to them as I can see that causing issues or making it take longer when the data in the source is changing on a nightly basis. I was wondering if there is a way in the sync to do a type of "Augmented Sync" where it would only sync files that dont exist in the destination and ignore any new files that exist in the destination but not in the source? This way I could start resuming my backups to the new folder while all the older files still pending sync can catch up on the back end.

All best...

Jason

Great!

In theory 16.6 /s is 1000 calls per minute so if you stick under that you should be OK ![]()

So you want to do a sync where files don't get deleted in the destination? Is that right?

If so you want rclone copy instead of rclone sync if I've understood you correctly.

Possibly, these two folders have already copied 31 of 34TB to the new folder. Would the Copy command overwrite files that are already there or does it do a comparison and only copy files over from the source that do not exist in the destination?

No and Yes - copy does this by default. That requires scanning all the files again though which can take some time if you have lots of files...

Thanks Craig, I think that is something that I could live with.

So if we started adding new files to the destination folder via the backup agent (Cloudberry), it would not effect the Sync/Copy job finishing up the transfer?

It may or may not copy this files depending on whether that directory has been scanned or not, but it shouldn't upset the current transfer.

Thanks Nick,

I am going to give it a go. I will be sure to let you know how it goes.

Thanks again.