Could you please specify the OS and the output from rclone version ?

rclone v1.51.0-054-gda5cbc19-beta

- os/arch: windows/amd64

- go version: go1.13.8

Adding the flag outputs the following:

PS C:\Users\Fernando\Desktop> ./rclone mount --async-read

Error: unknown flag: --async-read

(+ a list of all arguments as a help guide, not shown here as it's unrelevant)

Ok, Windows doesn't have that flag and its not enabled there by default anyway. This was only for debugging in Linux where its enabled by default.

I hadn't merged those flags to master and thence the beta yet.

I have done now - expect them to turn up in the the latest beta in 15-30 mins.

I'd like to come up with a bigger default for the read timeout then I'll put that in a 1.51.1 release - any further thoughts on that anyone?

Isn't this linux/unix only or am I reading the code wrong? @ferferga was trying it on windows, which still won't work, I think ?

Yes you are right --async-read is only implemented for linux/freebsd/macOS right now - the windows mount will accept it but won't do anything with it. The other flags --vfs-read-time etc will work on windows.

Okay, thanks both! @ncw @darthShadow

Has anyone lifted the default to 20ms and can report their results?

Or what would be the solution to this?

Edit:

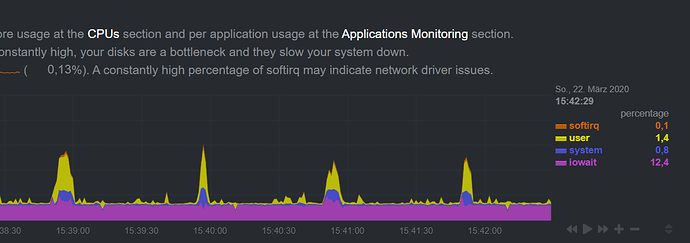

What I am wondering is, on higher load I already have 20-30% iowait if I lift this even higher won't it affect performance?

It will stop rclone closing the stream and seeking which really kills the performance. Not sure what effect on the IOWAIT will be exactly. Probably good!

I went with the latest beta and will keep an eye on it, only after that I will check your suggestion.

Thank you for your awesome work!

Curious if the iowait issue has been resolved in the latest betas?

If not, do you still recommend the below settings for people running 1.51 who are experiencing iowait issues? [ I am not, but several in our work group are.]

I do some tests.

go from 10ms in 10ms steps to 40ms.

Only with 40ms I got a clear netdata CPU-Log without constantly IOWAIT

edit: my systemd with v1.51.0-116-g36717c7d-beta

--allow-other \

--umask 0007 \

--uid 120 \

--gid 120 \

--use-mmap \

--async-read=true \

--vfs-read-wait 40ms \

--buffer-size 2G \

--fast-list \

--timeout 40m \

--dir-cache-time 96h \

--drive-chunk-size 128M \

--vfs-cache-max-age 72h \

--vfs-cache-mode writes \

--vfs-cache-max-size 100G \

--vfs-read-chunk-size 128M \

--vfs-read-chunk-size-limit off \

--log-file=/var/log/rclone/mount.log \

--log-level=INFO \

--user-agent "GoogleDriveFS/36.0.18.0 (Windows;OSVer=10.0.19041;)"Thanks, very useful.

I didn't put this in the beta yet - I'll move it up the TODO list!

Can you see if rclone is doing seeks with the 40mS delay?

I'd expect some IOWAIT with async fetching - that is why it is faster because the kernel issues multiple reads at once but rclone can only respond to one at once.

How can I do that?

You can check the debug log for messages which look like "failed to wait for in-sequence read is the easiest way.

I'm on linux kernel 5.4.14 and don't have issues??

with rclone v1.51.0-126-g45b63e2d-beta I got the same error after 1 day 12h

will activate debug log

I have a 8h DEBUG Log (1GB ![]() )

)

I searched for failed to wait for in-sequence read and got 1459 Results...

I'm on Debian 10... you think it can go better with backport Kernel?