rclone version)

rclone v1.56.0

Ubuntu

Google Drive

rclone copy /tmp remote:tmp)

/usr/bin/rclone mount gcrypt: /JellyMedia/GMedia \

--allow-other \

--dir-cache-time 5000h \

--poll-interval 10s \

--bwlimit 83M \

--bwlimit-file 40M \

--tpslimit 5 \

--tpslimit-burst 1 \

--cache-dir=/JellyMedia/RcloneCache \

--drive-pacer-min-sleep 10ms \

--drive-pacer-burst 1000 \

--vfs-cache-mode full \

--vfs-cache-max-size 200G \

--vfs-cache-max-age 5000h \

--vfs-cache-poll-interval 5m \

--vfs-read-ahead 2G

Only you would know that based on what you have configured.

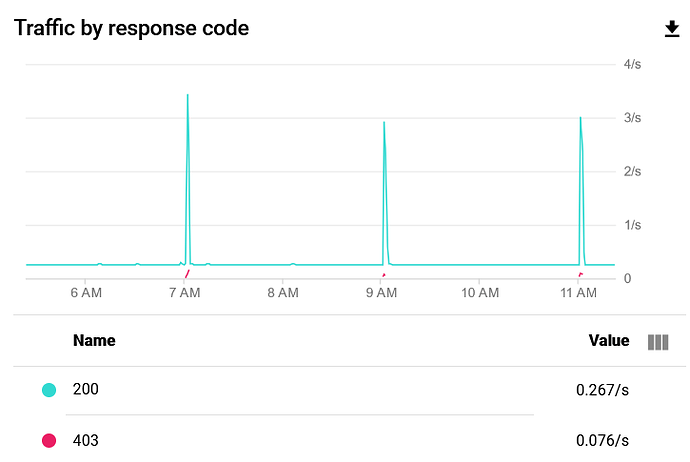

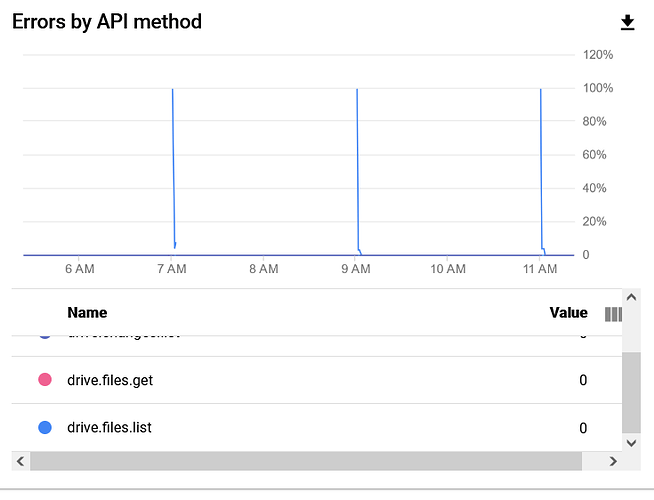

Looks like a scheduled job I'd imagine.

I found the problem. I have a script that runs every 2 hours to upload to google drive. I noticed using the tag --fast-list causes the problem. Is there anyway to slowly spread out the requests instead of all at once?

rclone move xxx gcrypt: --delete-empty-src-dirs --fast-list --min-age 2d --drive-stop-on-upload-limit --progress --transfers 15 --exclude *partial~ --exclude downloads/**

fast-list makes less API calls.

--fast-list Use recursive list if available. Uses more memory but fewer transactions.

It's more likely your checkers/transfers are too high as you have 15 transfers. A log would be juicy to look at.

ncw

July 22, 2021, 11:51am

5

This is how you slow the transfers per second down.

--tpslimit float Limit HTTP transactions per second to this.

For anyone else who stumbles along this problem, the --transfers 15 was the problem. I was in the process of moving servers and on the new one I removed that flag and it seems to be working fine now.

system

September 27, 2021, 12:40pm

7

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.