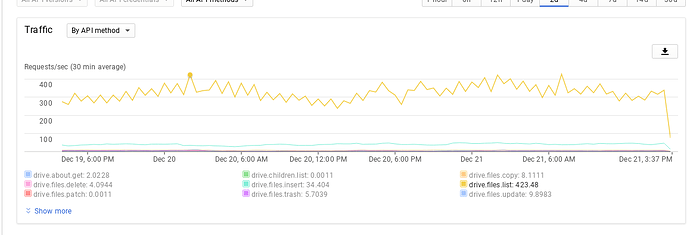

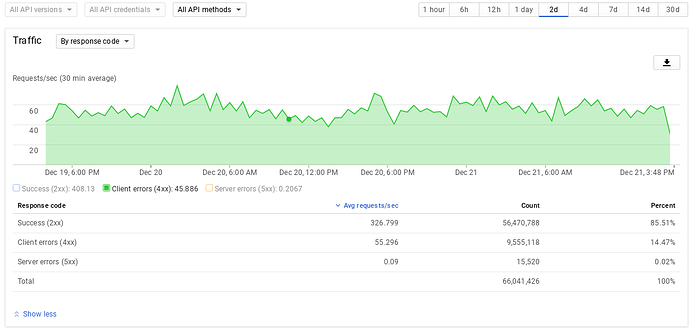

I’m getting this across my rclone instance, and I’m not seeing any throttling. I’m using my own client ID and client auth… any thoughts what is happening or what I can say to Google Support when they eventually contact me.

I’ve checked the oauth token is valid and not expired as well.

Thanks

Steve

2016/12/19 14:04:06 <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

2016/12/19 14:04:06 HTTP RESPONSE (req 0xc4206d61e0)

2016/12/19 14:04:06 HTTP/1.1 403 Forbidden

Access-Control-Allow-Credentials: false

Access-Control-Allow-Headers: Accept, Accept-Language, Authorization, Cache-Control, Content-Disposition, Content-Encoding, Content-Language, Content-Length, Content-MD5, Content-Range, Content-Type, Date, GData-Version, Host, If-Match, If-Modified-Since, If-None-Match, If-Unmodified-Since, Origin, OriginToken, Pragma, Range, Slug, Transfer-Encoding, Want-Digest, X-ClientDetails, X-GData-Client, X-GData-Key, X-Goog-AuthUser, X-Goog-PageId, X-Goog-Encode-Response-If-Executable, X-Goog-Correlation-Id, X-Goog-Request-Info, X-Goog-Experiments, x-goog-iam-authority-selector, x-goog-iam-authorization-token, X-Goog-Spatula, X-Goog-Upload-Command, X-Goog-Upload-Content-Disposition, X-Goog-Upload-Content-Length, X-Goog-Upload-Content-Type, X-Goog-Upload-File-Name, X-Goog-Upload-Offset, X-Goog-Upload-Protocol, X-Goog-Visitor-Id, X-HTTP-Method-Override, X-JavaScript-User-Agent, X-Pan-Versionid, X-Origin, X-Referer, X-Upload-Content-Length, X-Upload-Content-Type, X-Use-HTTP-Status-Code-Override, X-Ios-Bundle-Identifier, X-Android-Package, X-YouTube-VVT, X-YouTube-Page-CL, X-YouTube-Page-Timestamp

Access-Control-Allow-Methods: GET,OPTIONS

Access-Control-Allow-Origin: *

Alt-Svc: quic=":443"; ma=2592000; v=“35,34”

Cache-Control: private, max-age=0

Content-Type: text/html; charset=UTF-8

Date: Mon, 19 Dec 2016 13:04:06 GMT

Expires: Mon, 19 Dec 2016 13:04:06 GMT

Server: UploadServer

X-Guploader-Uploadid: AEnB2UqEjGy12rLAYfFArnoCLId9g07z96rb0xUpx3Aaf_dGWdZ7nX2RW6jwtHU8Q9uf0NvvmDxhK31ItumFkKmRnzjLdQmYXw1BElQccwcNMLu6yhPqNaQ

Content-Length: 0

2016/12/19 14:04:06 <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

2016/12/19 14:04:08 : Dir.Attr